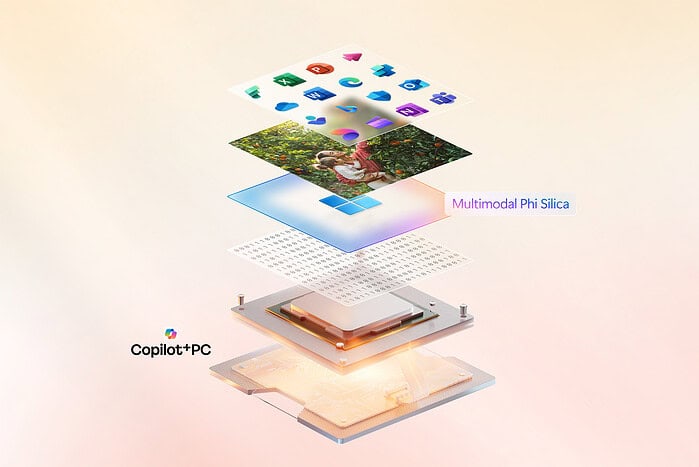

Microsoft Brings Multimodal AI to Phi Silica -Here's What You Need to Know

2 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Microsoft has expanded its on-device language model, Phi Silica, by introducing multimodal capabilities that add image understanding to its existing text abilities. Available on Copilot+ PCs, the update enables Phi Silica to process both visual and textual inputs efficiently, enhancing accessibility and productivity experiences.

Rather than deploying a separate vision model, Microsoft introduced a lightweight 80-million-parameter projector that integrates with the existing Phi Silica system. This efficient design minimizes memory and storage impact while maximizing performance, running entirely on-device using the device’s NPU.

Also read : Global CISOs, including Microsoft, Urge to Align Cybersecurity Regulations

A key benefit of this update is improved accessibility. Phi Silica can now generate detailed image descriptions for blind and low vision users, offering both short and extended Alt Text directly from the device, without relying on cloud services. This enhances screen reader experiences across Windows applications.

Technical optimizations, including the reuse of Microsoft’s Florence image encoder and quantized model operation, ensure fast processing with minimal latency. Early evaluations show that the new system generates higher-quality image descriptions compared to previous methods.

Microsoft’s approach highlights a broader shift toward resource-conscious, privacy-first AI on personal devices, delivering richer, faster, and more secure user experiences without additional hardware demands.

User forum

0 messages