OpenAI’s o3 and o4-mini Models Can Now Analyze Images Like a Human

1 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

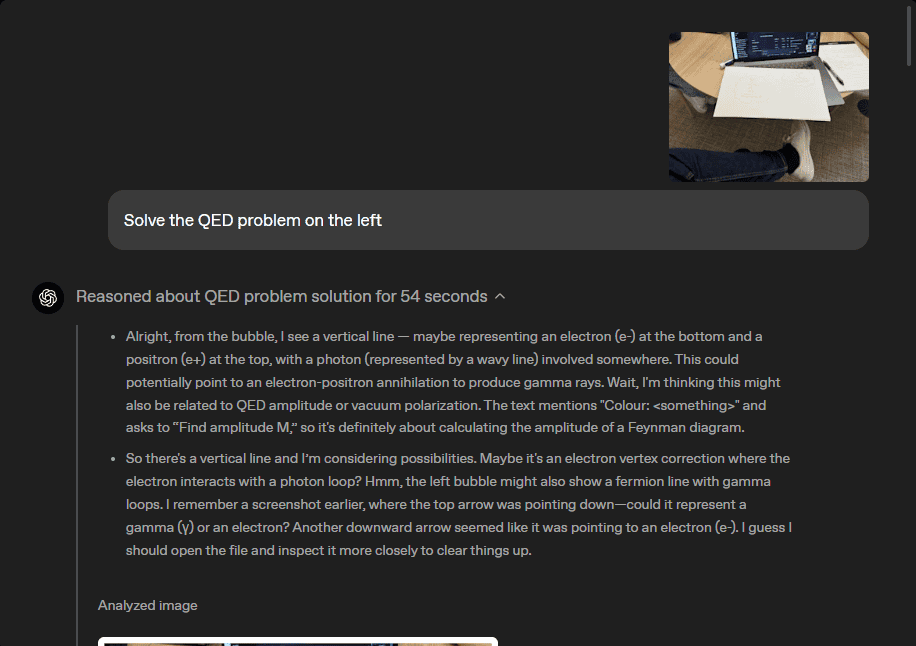

OpenAI has introduced two new models, o3 and o4-mini, that allow ChatGPT to process and understand images in a way similar to human reasoning. These models can interpret user-uploaded images, such as photos, diagrams, or screenshots, and provide detailed analyses. For instance, ChatGPT can now read handwritten notes, solve visual math problems, or identify issues in a screenshot of a software error.

Also read: OpenAI Finally Rolls Out ‘Much Needed’ ChatGPT Feature to Manage AI-Generated Content

The models achieve this by incorporating visual information into their reasoning process, enabling them to manipulate images—like rotating or zooming—to better comprehend the content. This advancement allows for more accurate and thorough responses, even when dealing with imperfect or complex images.?

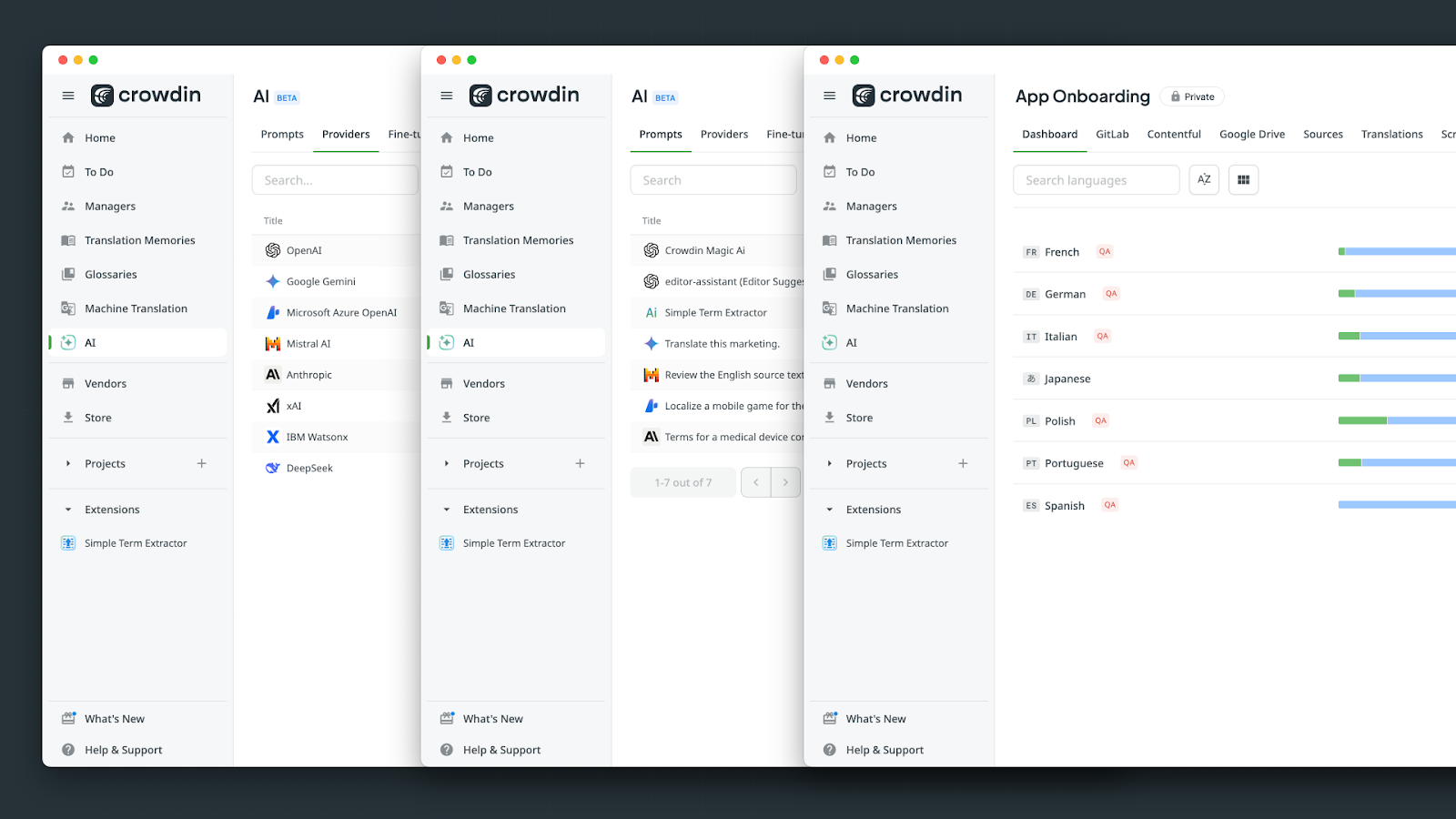

These capabilities are available to OpenAI’s ChatGPT Plus, Pro, and Team users, with plans to expand access in the near future. The integration of visual reasoning marks a significant step forward in making AI interactions more intuitive and versatile.?

User forum

0 messages