Meta identifies domains distributing malware using fake ChatGPT tools

2 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Meta wants to warn everyone about bad actors using ChatGPT to disguise their malware. In a recent security report, the Facebook and Instagram parent company said that it flagged over a thousand domains since March due to the distribution of ChatGPT-like tools that are secretly hiding malware. (via Axios)

Malicious actors are known for following the trends to create effective baits for unsuspecting victims. These days, ChatGPT is the central focus of some campaigns, according to Meta.

“Malware operators, just like spammers, are very attuned to what’s trendy at any given moment,” said Guy Rosen, Meta’s Chief Information Security Officer. “They latch onto hot button issues, popular topics, to get people’s attention.”

The company shared that in its own report, it discovered 10 malware families posing as ChatGPT tools. The campaign involves the installation of browser extensions, which act as bait. And although some of them could offer ChatGPT-like functions, Meta said that the extensions could harvest sensitive data, including credit card information and passwords.

The company said it had already reported the domains hosting the malware-distributing ChatGPT-like tools to its partners in the industry. However, the number of individuals affected by the campaign is unknown, given the actors are performing their acts outside Meta-owned platforms. Despite that, as noted by Axios, the company has released some protection tools for its users to combat malware. One of the tools is a guide dedicated to business account users who want to remove malware from their systems and prevent actors from designating themselves as administrators of such accounts.

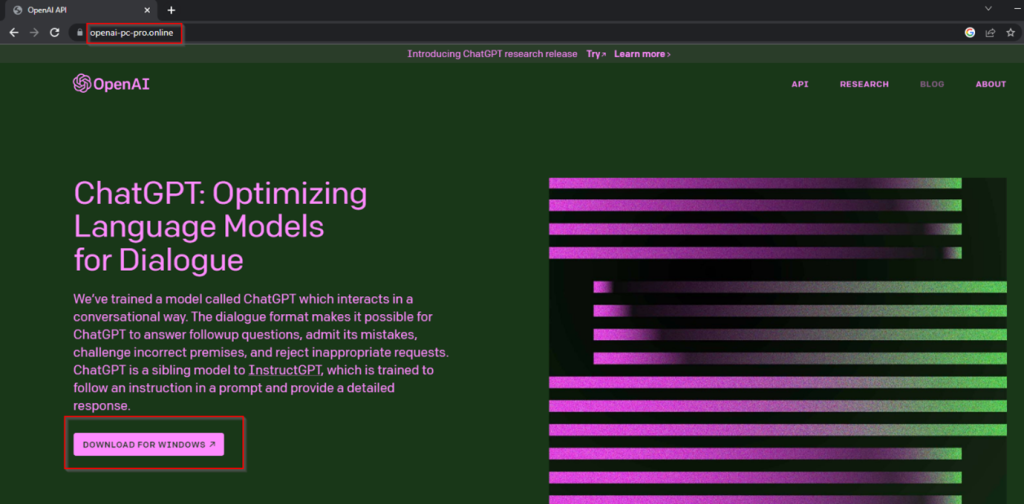

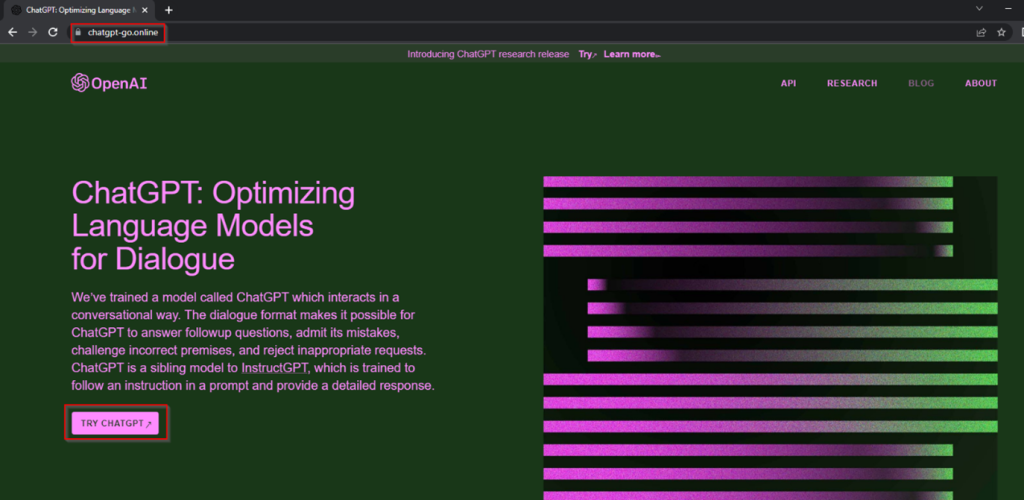

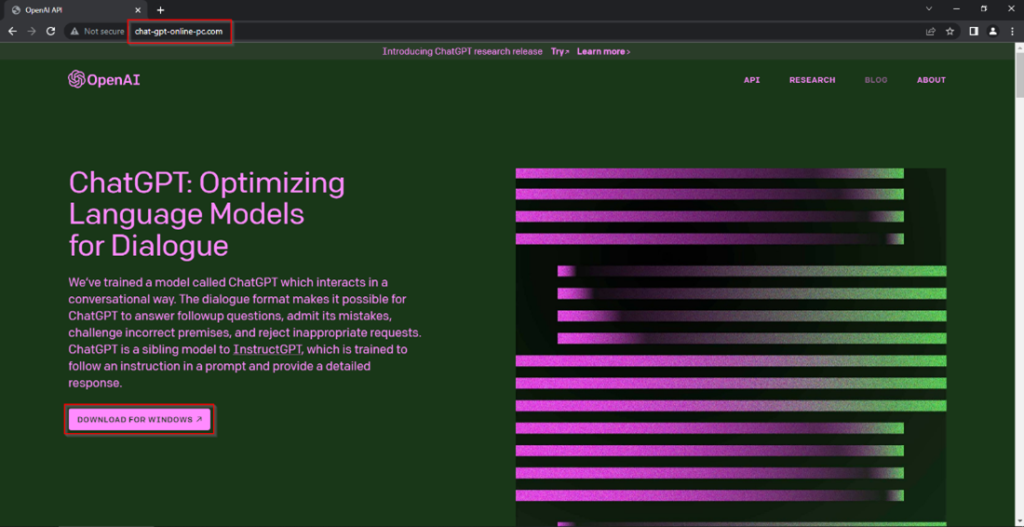

Meta is not the first to raise concerns about malware campaigns using ChatGPT as a cover. In February, researchers at Cyble also revealed the existence of typosquatted domains posing as ChatGPT portals and websites. The report even shared the discovery of 50 fake and malicious applications using the same logos from OpenAI and ChatGPT. It was also reported that such campaigns actually circulated within Meta’s platform, including Facebook. For instance, Cyble noted several pages mimicking OpenAI offering links, bringing victims to different phishing pages and tricking them into downloading malicious files into their devices.

User forum

0 messages