Google's AI news tool, which "scrapes" info without consent, raises ethical concerns in beta program

2 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Key notes

- Google offers an AI tool to generate news content for select publishers in exchange for data and feedback.

- The tool gathers and summarizes content from public sources, raising concerns about plagiarism and its impact on original sources.

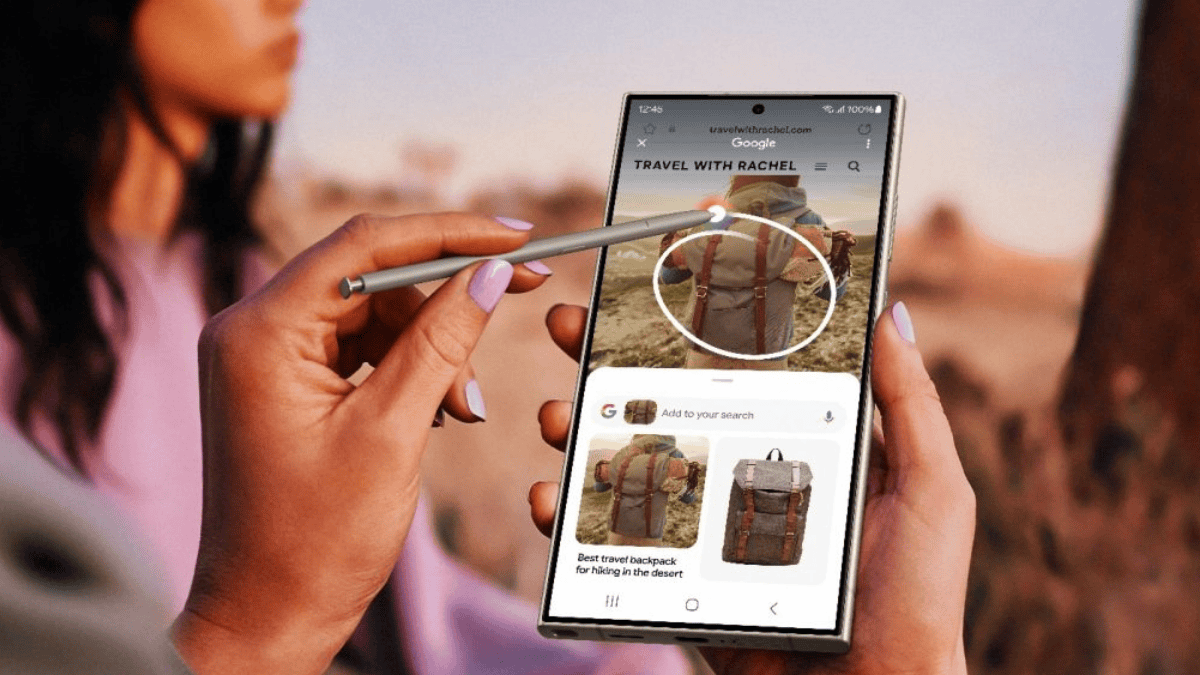

Google is facing controversy over a new program offering an unreleased AI tool for news content creation to select independent publishers.

The program allows publishers to access the tool for free in exchange for providing data and feedback. The AI tool gathers and summarizes content from public sources like government reports and other news outlets, potentially allowing publishers to create content relevant to their audience at no cost.

However, critics raise concerns about the program’s ethical implications. The process involves scraping content from other sources without their consent, which some argue borders on plagiarism, similar to what happens between The New York Times and OpenAI. Additionally, the potential for AI-generated articles to draw traffic away from sources raises concerns about the program’s impact on the wider news industry.

Digital Content Next CEO Jason Kint expressed concerns that the program contradicts Google’s mission of supporting news and questioned potential antitrust issues. While some publishers benefit from the program’s financial and technological support, others remain apprehensive about its long-term impact on journalism.

Google maintains that the tool is designed to help small publishers and emphasizes factual content and human editing. However, the program highlights the ongoing debate surrounding the use of AI in news creation and the need for transparency and ethical considerations in its development and implementation.

This program follows recent criticism of Google’s AI image generation tool, Gemini, which has been accused of producing inaccurate and biased content. Critics have labeled the tool as “woke” for depicting women popes, black Vikings, and diverse Founding Fathers. Moreover, Google faced criticism for its nuanced stance on pedophilia, emphasizing it as a complex issue rather than simply “gross.”

These concerns raise questions about Google’s ability to develop and implement AI tools responsibly, particularly in the context of news creation.

More here.