Wayve's new autonomous AI LINGO-2 can interact with passengers and take orders

2 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Key notes

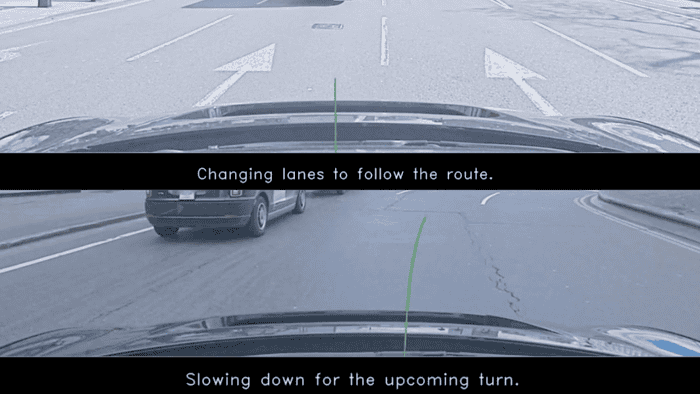

- Wayve’s LINGO-2 is the first self-driving car model to both navigate and explain its actions in real-time.

- This vision-language-action model (VLAM) uses cameras and language processing to understand its surroundings.

- Passengers may interact with LINGO-2 by asking questions or providing simple instructions.

Wayve has unveiled LINGO-2, a new vision-language-action model (VLAM) designed for use on public roads. LINGO-2 integrates visual perception, language processing, and real-time decision-making capabilities to navigate its surroundings. LINGO-2 acts as the brain of a self-driving car. It uses cameras to perceive its surroundings (vision) and then employs language processing to understand the scene.

Unlike its predecessors, LINGO-2 has what it takes to provide real-time explanations for its actions while controlling the vehicle’s movements.

“LINGO-2 navigates around a bus. We can observe that LINGO-2 can follow the instructions to either hold back and “stop behind the bus” or “accelerate and overtake the bus.”

This feature allows the model to narrate its decision-making process, increasing trust in autonomous vehicles. For instance, LINGO-2 can elucidate reasons for actions like slowing down for pedestrians, fostering a deeper understanding of its behavior.

“We ask LINGO-2 to describe “What is the weather like?” It can correctly identify that the weather ranges from “very cloudy, there is no sign of the sun” to “sunny” to “the weather is clear with a blue sky and scattered clouds.”

Moreover, LINGO-2 opens avenues for interaction between passengers and the vehicle. Users may be able to issue simple instructions using natural language, such as requesting a turn, while passengers could inquire about the car’s surroundings, including the status of traffic lights or potential hazards.

“When we ask the model, “What is the color of the traffic lights?” it correctly responds, “The traffic lights are green.”

Despite its capabilities, Wayve acknowledges certain limitations. The accuracy of explanations and the model’s ability to safely execute spoken instructions in varied real-world scenarios require further refinement.

LINGO-2’s debut can be a big step in autonomous vehicle technology. It offers a future where self-driving cars can provide clear explanations for their actions. Autonomous driving brings potential for transparency and interaction with passengers despite remaining challenges.

More here.

User forum

0 messages