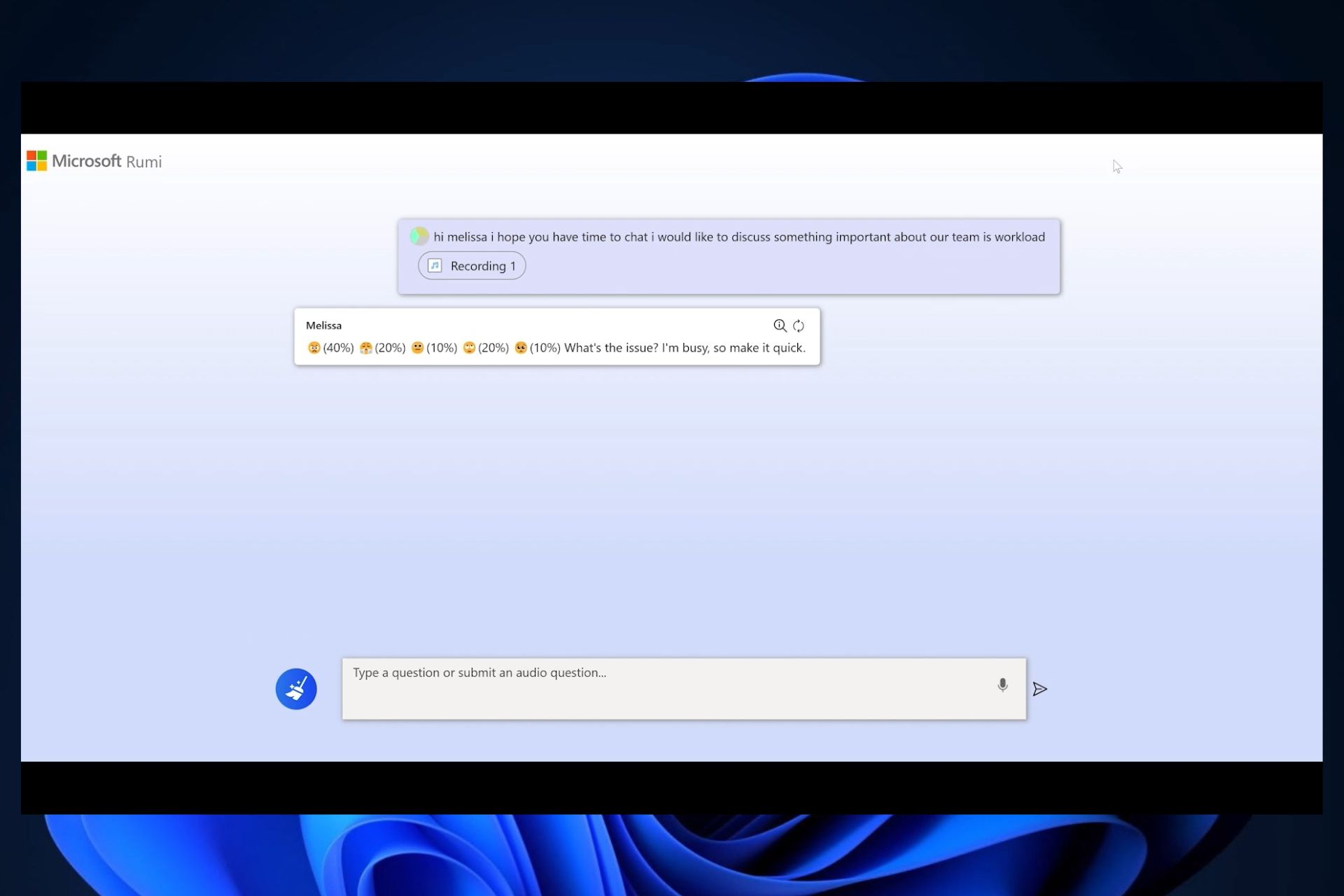

Microsoft Project Rumi is able to analyze your facial expressions and more to form an opinion on your attitude

2 min. read

Updated on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Microsoft Project Rumi is a new large language model (LLM) AI, capable of handling paralinguistic input when interacting with others. This means this AI model will see your facial expressions, voice tone, gestures, and eye movements and will form an idea about the attitude of your interaction.

Microsoft Project Rumi comes to address the current limitations of AI when interacting with a human person. For example, a text-only-based conversation with Bing Chat won’t be enough for Bing to actually see your facial expressions when addressing it. It won’t be enough to form an answer that could also respond to your attitude. It’s true, Bing Chat can get very creative sometimes, and when you’re using certain words, the tool will provide a sensible answer, more or less. But ultimately, it can’t answer you properly, since it’s not seeing and hearing you.

But now, thanks to Microsoft Project Rumi, the AI languages will use your device’s microphone and camera, with your permission, of course, to actually hear you and see you. And based on your complex input, which includes your facial expressions, tone of voice, and so on, the AI model will answer you back accordingly.

Microsoft Project Rumi, like multiple other MS AI projects, is a breakthrough in AI technology. Many users everywhere complain that AI somehow lacks a human soul, but this technology will allow AI to come as close to a human as possible, for now.

What do you think about it? Let us know in the comments section below.

User forum

1 messages