Google to introduce "near me" and "scene exploration" to multisearch tech

3 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Last month, we saw Google roll out the multisearch feature in Lens, which is primarily meant to aid users in their shopping activities and finding particular items in the market through the simultaneous use of image and word inputs. Now, Google has bigger ambitions for the technology by presenting more advanced features everyone can use. On Wednesday, Google Senior Vice President Prabhakar Raghavan revealed the upcoming functions in multisearch tech of Google – the “near me” and “scene exploration.”

The “near me” function, which is planned to be released globally later this year in English first and then in other languages over time, will work just like the basic multisearch wherein users would need a mixture of images and words in their search input. However, instead of focusing the words on the physical appearance of the image, this new function will highlight the location of the search by using the phrase “near me.” Doing so will show you the nearest businesses that offer the product you are looking for.

“For example, say you see a colorful dish online you’d like to try – but you don’t know what’s in it, or what it’s called,” Raghavan explains. “When you use multisearch to find it near you, Google scans millions of images and reviews posted on web pages, and from our community of Maps contributors, to find results about nearby spots that offer the dish so you can go enjoy it for yourself.”

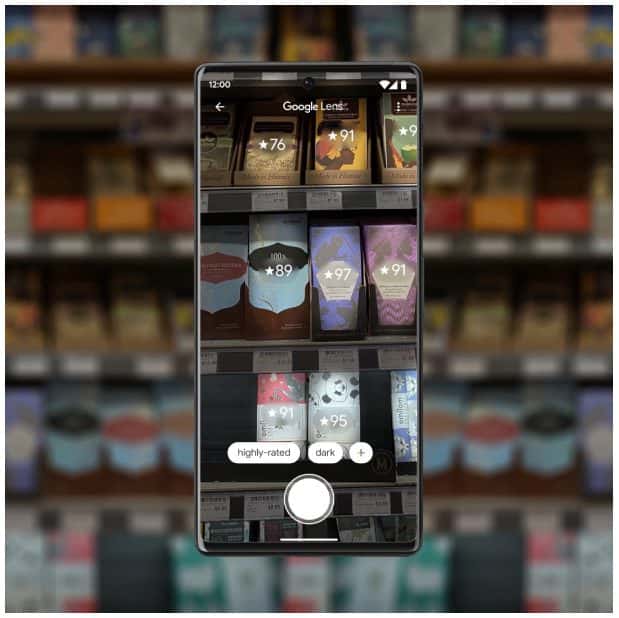

The “scene exploration” function, on the other hand, features a more promising capability by allowing users to receive information about multiple objects within the camera frame. According to Raghavan, this will enable you to pan the multisearch camera and immediately receive information about the items captured in the scene.

“Imagine you’re trying to pick out the perfect candy bar for your friend who’s a bit of a chocolate connoisseur,” Raghavan elaborates. “You know they love dark chocolate but dislike nuts, and you want to get them something of quality. With scene exploration, you’ll be able to scan the entire shelf with your phone’s camera and see helpful insights overlaid in front of you. Scene exploration is a powerful breakthrough in our devices’ ability to understand the world the way we do – so you can easily find what you’re looking for– and we look forward to bringing it to multisearch in the future.”

With these new functions that can improve the multisearch capability of Google Lens, Google can further boost the purpose of the image recognition technology and make it more practical for everyday use. Currently, Raghavan notes that Google Lens users perform searches more than 8 billion times per month. But with the new improvements coming, the numbers could increase dramatically, with the tech getting more recognition from the public.

User forum

0 messages