Google starts 'multisearch' in Lens to allow simultaneous search entry of image and word

3 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Google has the answer to all your questions. Finding the right and precise answer, however, could be a challenge, especially when your query is about images. With this, Google came up with the bright idea of enabling users to search images with complementing words through its “multisearch” feature. Google says it will allow more detailed image search results fitting the expected answers of users. The feature is currently being offered as a beta feature in English for US users.

“At Google, we’re always dreaming up new ways to help you uncover the information you’re looking for — no matter how tricky it might be to express what you need,” said Belinda Zeng, Search Product Manager at Google. “That’s why today, we’re introducing an entirely new way to search: using text and images at the same time. With multisearch in Lens, you can go beyond the search box and ask questions about what you see.”

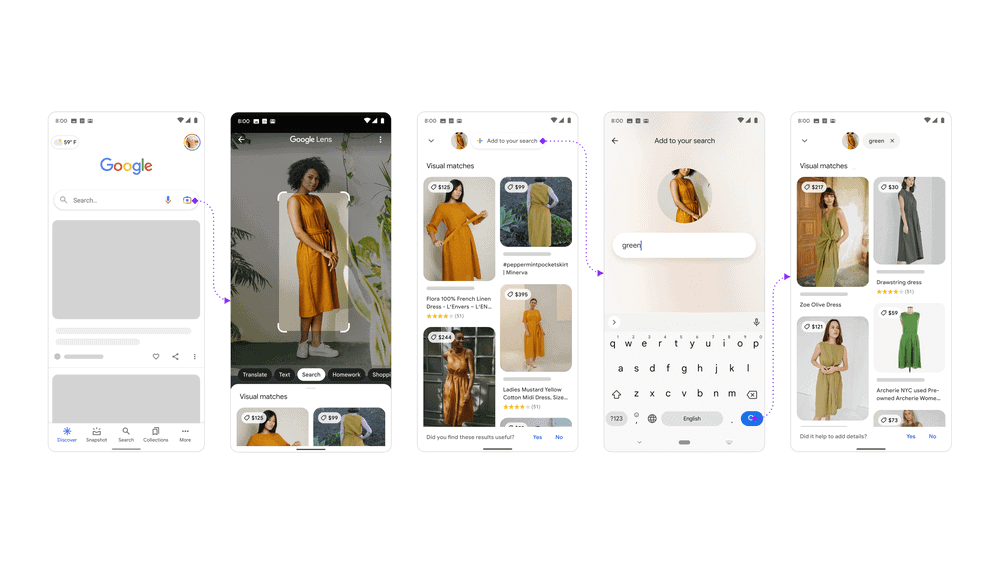

Using the new feature requires easy steps. It can be accessed via the Google app on Android or iOS devices. Upon the launch of the application, you can tap the Lens camera icon. As you know, this will give you the option to either shoot a photo using the Google camera or use the existing photo stored on the device. Once you have picked or shot the photo you want to use, just swipe up, and you will be given the initial results based on the visuals of the image presented. To add word/s to the search entry and use the multisearch feature, just tap the “+ Add to your search” button, and you will see a search bar. Type the word you want to use to better describe the exact image you are looking for.

According to Google, this will be useful to get better answers about the appearance of the objects you are seeing and “refine your search by color, brand or a visual attribute.” For instance, it will help you get better image search results about the specific color of a dress, size of a table, material of a mug, or even care instructions about a particular plant!

“All this is made possible by our latest advancements in artificial intelligence, which is making it easier to understand the world around you in more natural and intuitive ways,” Zeng added. “We’re also exploring ways in which this feature might be enhanced by MUM– our latest AI model in Search– to improve results for all the questions you could imagine asking.”