Detecting AI inventions: OpenAI will label your self invented AI techniques as malicious

2 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Detecting AI inventions might seem a bit off for AI companies, but apparently, OpenAI, the popular company behind the world’s most popular AI tool, will actually flag any of your AI inventions on ChatGPT as malicious.

Spotted by this Reddit user, it seems OpenAI is detecting AI inventions that you intentionally or not intentionally discovered while using ChatGPT.

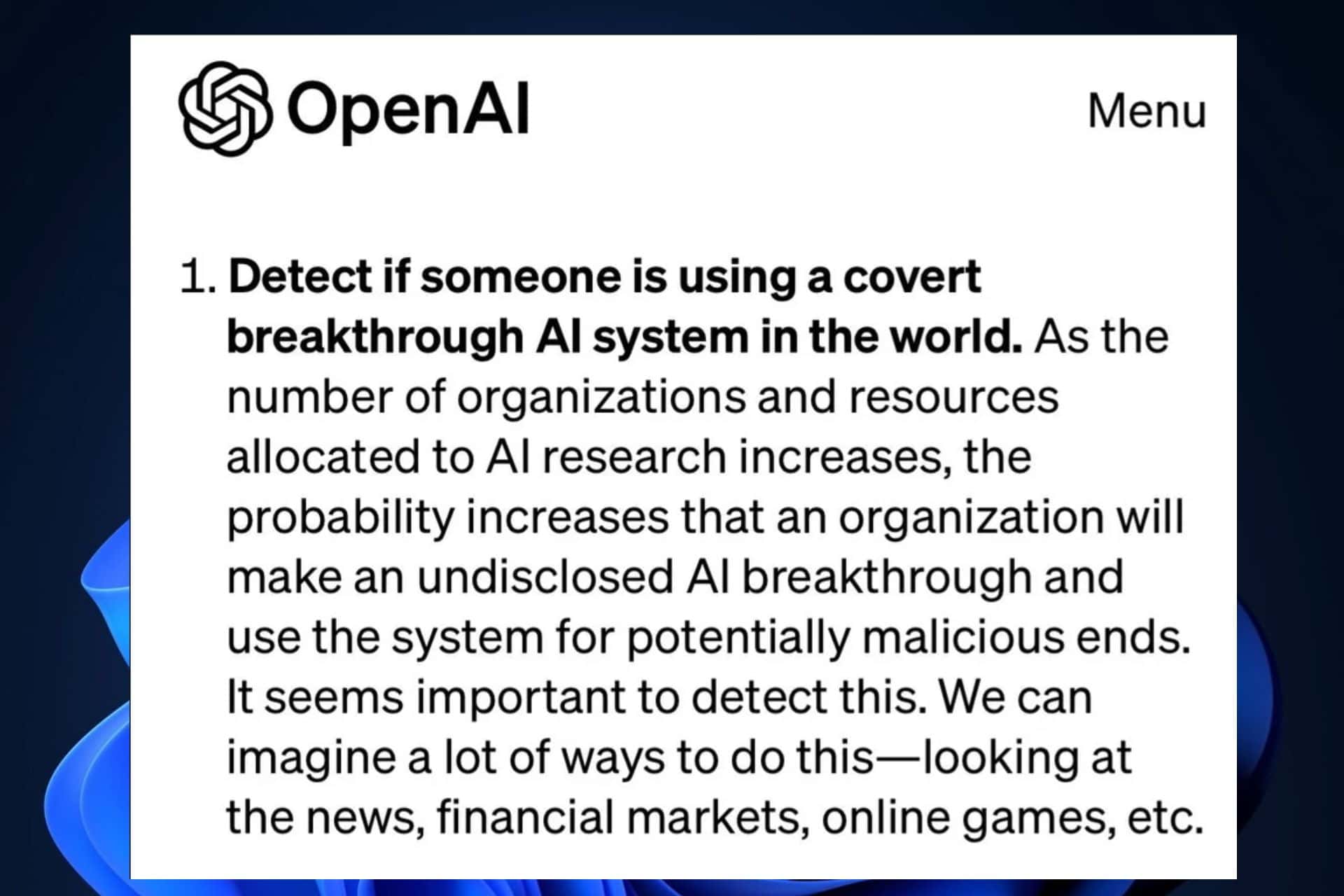

Detect if someone is using a covert breakthrough AI system in the world. As the number of organizations and resources allocated to AI research increases, the probability increases that an organization will make an undisclosed AI breakthrough and use the system for potentially malicious ends. It seems important to detect this. We can imagine a lot of ways to do this – looking at news, financial markets, online games, etc.

And OpenAI is planning on detecting AI inventions all around the globe. However, they have to be covert, and this means the AI inventions need to be unheard of, or built in such a way, that they remain a secret.

Truthfully, this could also be a good thing, as phishing and threat actors might be using undisclosed AI inventions to initiate and enable their attacks. Coming to acknowledge these inventions and discovering how they work might be a good thing in the end.

What do you think about it? Do you think it’s fair or not that OpenAI is detecting AI inventions?