Claude 3.5 Sonnet's "Computer use" feature honestly sounds really freaky

The feature is now available on the API for developers.

2 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Key notes

- Anthropic released Claude 3.5 Sonnet with a “Computer use” feature to control computers via screen gaze.

- The experimental feature has limitations and risks.

- The Claude 3.5 Haiku model will also be available later this month as a text-only version.

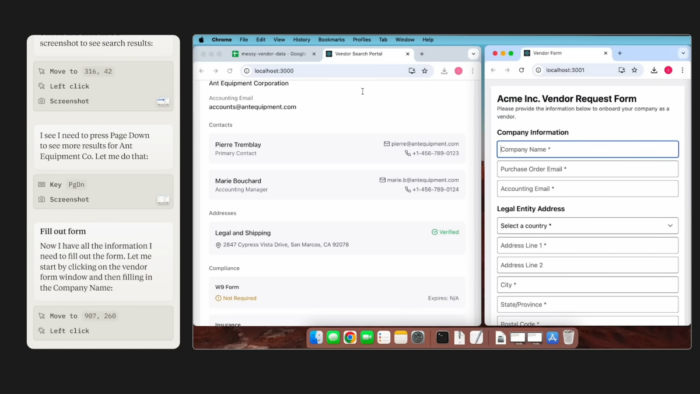

Anthropic has just recently released a public beta of its Claude 3.5 Sonnet AI model. And with that, the Amazon-backed AI company features a new feature called “Computer use“, which basically lets you control your computer by simply looking at your screen.

This feature is now available on the API for developers, but honestly, it sounds really freaky. In its demo shown on a MacBook, Anthropic reveals that you can direct the AI to work on your PCs like a human. That means, you can make it do things like looking at the screen, moving cursors, clicking buttons, and typing texts.

Similar AI assistants from Microsoft, OpenAI, and Google have yet to give full control to their tools (like Copilot or Gemini) over users’ PCs. But still, not too long ago, Microsoft also filed a patent application for a system that lets you control your PCs using your tongue.

According to the application, you can select letters by moving your eyes and tapping your teeth, with the possibility of multiple gestures like erasing letters and more.

Anthropic also warns us that the “computer use” feature is still far from perfect, especially when it’s being used to browse the internet. And, You still can’t do things like scrolling, dragging, and zooming. The company says that it “poses unique risks that are distinct from standard API features or chat interfaces.”

In another announcement, Anthropic also released the Claude 3.5 Haiku model. Hailed as the fastest in the whole Claude 3.5 family, Haiku excels in coding, tool use, and reasoning— similar to OpenAI’s o1 model.

It will be available later this month via the Anthropic API, Amazon Bedrock, and Google Cloud’s Vertex AI, initially as a text-only model with plans for image input to follow.

User forum

0 messages