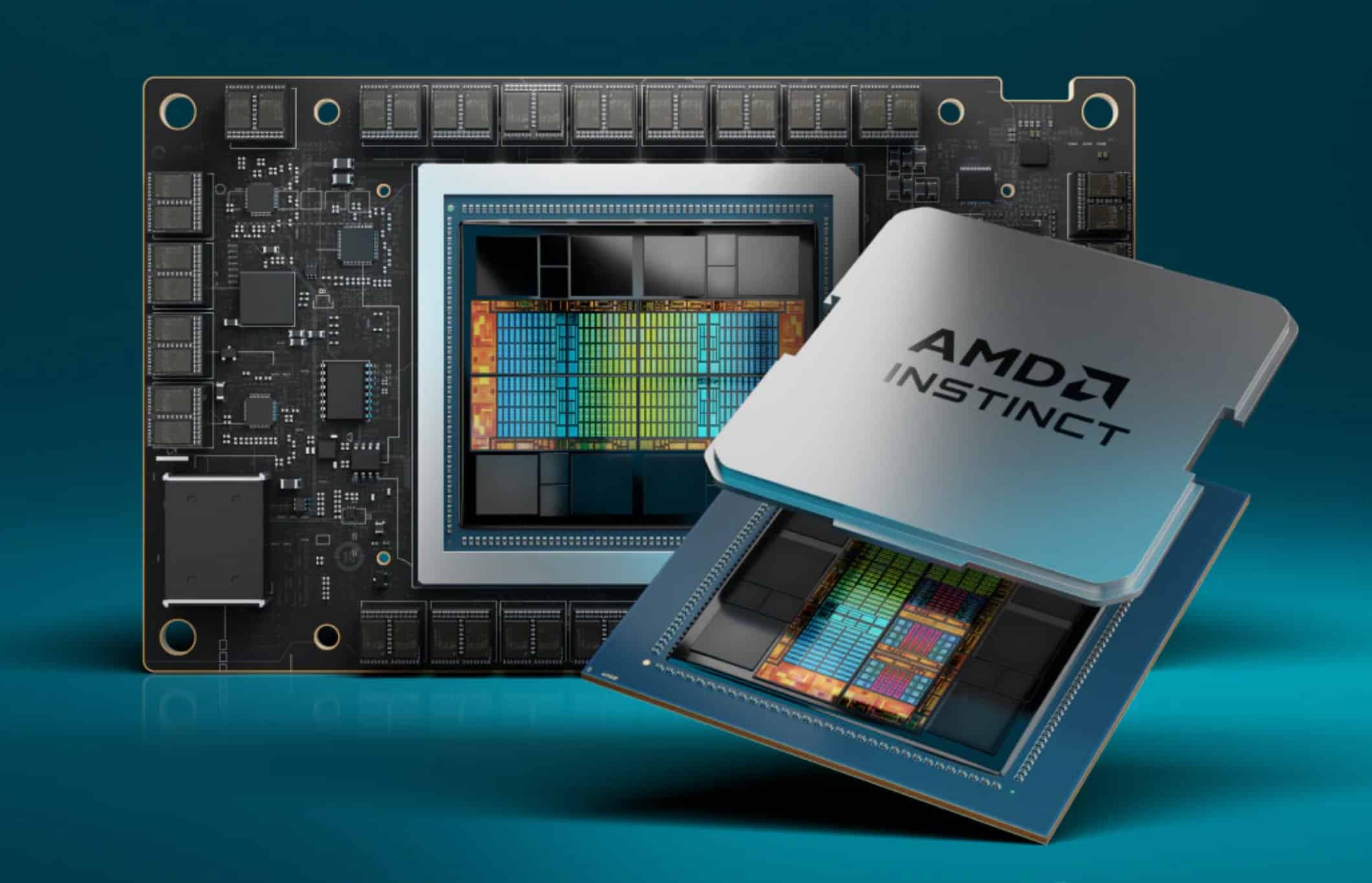

AMD unveils new AI accelerators and APU for cloud and enterprise

3 min. read

Updated on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

AMD announced its latest products and technologies for artificial intelligence (AI) and high-performance computing (HPC) at its Advancing AI event on Tuesday, aiming to challenge the dominance of rivals such as Nvidia Corp. and Intel Corp. in the fast-growing market.

The chipmaker unveiled the AMD Instinct MI300 Series accelerators, which offer the highest memory bandwidth in the industry for generative AI and top-tier performance for large language model (LLM) training and inferencing. The company also introduced the AMD Instinct MI300A accelerated processing unit (APU), a combination of the latest AMD CDNA 3 architecture and “Zen 4” CPUs, designed to deliver breakthrough performance for HPC and AI workloads.

Customer and Partner Adoption

Among the customers leveraging AMD’s latest Instinct accelerator portfolio is Microsoft Corp., which recently announced the new Azure ND MI300x v5 Virtual Machine (VM) series, optimized for AI workloads and powered by AMD Instinct MI300X accelerators. Furthermore, El Capitan, a supercomputer powered by AMD Instinct MI300A APUs and located at Lawrence Livermore National Laboratory, is expected to be the second exascale-class supercomputer powered by AMD, delivering more than two exaflops of double precision performance when fully deployed. Oracle Corp. also plans to add AMD Instinct MI300X-based bare metal instances to the company’s high-performance accelerated computing instances for AI, with MI300X-based instances planned to support OCI Supercluster with ultrafast RDMA networking.

Several major OEMs have also showcased accelerated computing systems in conjunction with the AMD Advancing AI event. Dell Technologies Inc. displayed the Dell PowerEdge XE9680 server featuring eight AMD Instinct MI300 Series accelerators and the new Dell Validated Design for Generative AI with AMD ROCm-powered AI frameworks. Hewlett Packard Enterprise Co. announced the HPE Cray Supercomputing EX255a, the first supercomputing accelerator blade powered by AMD Instinct MI300A APUs, set to become available in early 2024. Lenovo Group Ltd. announced its design support for the new AMD Instinct MI300 Series accelerators with planned availability in the first half of 2024. Super Micro Computer Inc. announced new additions to its H13 generation of accelerated servers powered by 4th Gen AMD EPYC CPUs and AMD Instinct MI300 Series accelerators.

Product Features and Specifications

The AMD Instinct MI300X accelerators are powered by the new AMD CDNA 3 architecture and offer nearly 40% more compute units, 1.5x more memory capacity, 1.7x more peak theoretical memory bandwidth, as well as support for new math formats such as FP8 and sparsity, all geared towards AI and HPC workloads. The AMD Instinct MI300A APUs, the world’s first data center APU for HPC and AI, leverage 3D packaging and the 4th Gen AMD Infinity Architecture to deliver leadership performance on critical workloads sitting at the convergence of HPC and AI.

| AMD Instinct™ | Architecture | GPU CUs | CPU Cores | Memory | Memory Bandwidth (Peak theoretical) |

Process Node | 3D Packaging w/ 4th Gen AMD Infinity Architecture |

| MI300A | AMD CDNA™ 3 | 228 | 24 “Zen 4” | 128GB HBM3 | 5.3 TB/s | 5nm / 6nm | Yes |

| MI300X | AMD CDNA™ 3 | 304 | N/A | 192GB HBM3 | 5.3 TB/s | 5nm / 6nm | Yes |

| Platform | AMD CDNA™ 3 | 2,432 | N/A | 1.5 TB HMB3 | 5.3 TB/s per OAM | 5nm / 6nm | Yes |

Software and Ecosystem Updates

AMD also announced the latest AMD ROCm 6 open software platform and the company’s commitment to contribute state-of-the-art libraries to the open-source community, furthering the company’s vision of open-source AI software development. AMD continues to invest in software capabilities through the acquisitions of Nod.AI and Mipsology as well as through strategic ecosystem partnerships such as Lamini and MosaicML.

User forum

0 messages