AI Misuse Statistics: 50+ Worrying Stats and Facts

These eye-opening AI misuse statistics will show you how not all applications of AI are positive.

Artificial intelligence is now a key part of our daily lives. From medical research to student learning tools, it’s already streamlining industries across the board. Let’s take a look at some of its downfalls.

Most Shocking AI Misuse Statistics

Whether it’s cheating, infringing on copyright, meddling in politics, or the worst kind of deepfakes – the scale and breadth of AI misuse will shock you:

- 1 out of 10 student assignments contained AI-generated content in 2023.

- 84% of workers may have exposed company data by using AI.

- 90% of artists believe Copyright law is lacking for AI.

- 78% of people open AI-written phishing emails.

- Phishing emails have risen 1,256% since ChatGPT launched.

- 75% worry deepfakes could influence elections.

- Searches for NSFW deepfakes have increased 5,800% since 2019.

- 4 million people a month use deepfake ‘Nudify’ apps.

Student Misuse of AI Statistics

AI in education has many legitimate applications, but it would be foolish to think students aren’t using it to do the work for them.

1. 1 out of 10 student assignments contained AI-generated content in 2023.

(Source: Turnitin)

In the year since the education-focused Turnitin plagiarism checker launched its AI detector, approximately 1 in 10 higher-education assignments contained content generated by AI tools like ChatGPT.

Furthermore, out of the 200 million papers analyzed, more than 6 million were at least 80 percent AI-generated.

2. Almost 50% of students surveyed admitted to using AI in some form for studies.

(Source: Tyton Partners)

One 2023 paper found that nearly half of students were using AI and 12% on a daily basis.

It’s even more worrying that 75% say they’ll continue to use AI even if institutions ban it.

3. Students and faculty are split 50/50 on the pros and cons of AI.

(Source: Tyton Partners)

The same research found that roughly half of students and faculty felt AI would have a positive or negative impact on education during Spring. A definitive split on the pros and cons.

Interestingly, by Fall, 61% of faculty were now in favor of integrating AI, while 39% still considered it a negative. This demonstrates a gradual change in opinion.

4. 68% of middle school and high school teachers have used AI detection tools.

(Source: Center for Democracy & Technology)

In a 2024 paper, the majority of school-level teachers said they had used AI detection, a rise from the previous year.

Moreover, nearly two-thirds have reported students facing consequences for allegedly using generative AI in their assignments. This is up from 48% in the 2022-2023 school year.

It seems AI is now fully embedded in middle, high school, and higher education.

AI Misuse Statistics on the Job

AI is growing in all industries. Students aren’t the only ones cutting corners. The rate of workers who fear AI or use it in risky ways is interesting.

5. More than half of workers use AI weekly.

(Source: Oliver Wyman Forum)

Based on a study of more than 15,000 workers across 16 countries, over 50% say they use AI on a weekly basis for work.

6. 84% of workers may have exposed company data by using AI.

(Source: Oliver Wyman Forum)

From the same study, 84% of those who use AI admit that this could have exposed their company’s proprietary data. This poses new risks when it comes to data security.

7. Despite concerns, 41% of employees surveyed would use AI within finance.

(Source: Oliver Wyman Forum, CNN World)

Risk also extends to finances. Although 61% of employees surveyed are concerned about the trustworthiness of AI results, 40% of those would still use it to make “big financial decisions.”

30% would even share more personal data if that meant better results.

Perhaps not so wise, after one finance worker in Hong Kong was duped into transferring $25 million. Cybercriminals deep-faked the company’s chief financial officer in a video conference call.

8. 37% of workers have seen inaccurate AI results.

(Source: Oliver Wyman Forum)

Concerns about trustworthiness are warranted, as nearly 40% of US employees say they have seen “errors made by AI while it has been used at work.” Perhaps a bigger concern is how many employees have acted on false information.

Interestingly, the highest percentage of employees recognizing inaccurate AI info is 55% in India. This is followed by 54% in Singapore and 53% in China.

On the other end of the scale, 31% of German employees have seen errors.

9. 69% of employees fear personal data misuse.

(Source: Forrester Consulting via Workday)

It’s not just employers that face potential risk from workers. A 2023 study commissioned by Workday suggests two-thirds of employees are worried that using AI in the workplace could put their own data at risk.

10. 62% of Americans fear AI usage in hiring decisions.

(Source: Ipos Consumer Tracker, ISE, Workable)

Before employees even make it into the workforce, AI in hiring decisions is a growing concern.

According to Ipos, 62% of Americans believe AI will be used to decide on winning job applicants.

ISE supports this fear, as it found 28% of employers rely on AI in the hiring process.

Moreover, a survey of 3,211 professionals in 2023 came to a similar figure, with 950 (29.5%) admitting to utilizing AI in recruitment.

11. Americans are most concerned about AI in law enforcement.

(Source: Ipos Consumer Tracker)

67% of polled Americans fear AI will be misused in police and law enforcement. This is followed by the fear of AI hiring and “too little federal oversight in the application of AI” (59%).

Copyright AI Misuse Stats

If AI can only rely on existing information, such as art styles or video content, where does that leave the original creator and copyright holder?

12. 90% of artists believe copyright law is lacking for AI.

(Source: Book and Artist)

In a 2023 survey, 9 out of 10 artists say copyright laws are outdated when it comes to AI.

Furthermore, 74.3% think scraping internet content for AI learning is unethical.

With 32.5% of their annual income coming from art sales and services, 54.6% are concerned that AI will impact their income.

13. An ongoing class action lawsuit alleges copyright infringement against artists.

(Source: Data Privacy and Security Insider)

Visual artists Sarah Andersen, Kelly McKernan, and Karla Ortiz, are in an ongoing legal battle against Midjourney, Stable Diffusion, among others.

A judge has ruled that AI models plausibly function in a way that infringes on copyrighted material, and the plaintiffs’ claim can proceed.

14. Getty Images claims Stability AI illegally copied 12 million images.

(Source: Reuters)

From individual artists to photography giants, Getty Images is also after Stable Diffusion.

In 2023, the stock photo provider filed a lawsuit alleging Stability AI copied 12 million of its photos to train its generative AI model.

Getty licenses its photos for a fee, which the more than billion-dollar AI giant never paid for.

The outcome of such cases could produce massive changes to the way AI art and photo generators operate.

15. A 10-step Copyright Best Practices has been proposed.

(Source: Houston Law Review)

To tackle the issue of AI copyright infringement, Matthew Sag has proposed a set of 10 best practices.

These include programming models to learn abstractions rather than specific details and filtering content that is too similar to existing works. Moreover, records of training data that involve copyrighted material should be kept.

Scamming and Criminal AI Misuse Stats

From catfishing to ransom calls, scammers and criminals misuse AI in increasingly scary ways. These stats and facts paint a future of ever-increasing criminal sophistication:

16. 25% of people have experienced AI voice cloning scams.

(Source: McAfee)

Cybercriminals can use AI to clone the voices of people and then use them in phone scams. In a survey of 7,000 people in 2023, one in four say they’ve experienced a voice scam firsthand or know others who have.

More worrying, 70% of those surveyed say they aren’t confident if they can distinguish the difference.

17. AI voice cloning scams steal between $500 and $15,000.

(Source: McAfee)

77% of those victims lost money and successful AI voice cloning scams aren’t going after small change.

The survey notes that 36% of targets lost between $500 and $3,000. Some 7% were conned out of between $5,000 and $15,000.

18. 61% of cybersecurity leaders are concerned about AI-drafted phishing emails.

(Source: Egress)

It’s not just modern and elaborate voice cloning that criminals are misusing. Traditional phishing emails are also an increasing concern.

In 2024, 61% of cybersecurity leaders say the use of chatbots in phishing keeps them awake at night. This might be because AI is accurate and fast.

Furthermore, 52% think AI could be beneficial in supply chain compromises, and 47% worry it may aid in account takeovers.

19. AI can help scammers be more convincing.

(Source: Which)

We’ve all seen the stereotypical email scam of a wealthy prince offering a small fortune if you just send some money to help them unblock a larger transfer. Replete with grammatical mistakes and inconsistencies, these scams are now typically buried in the spam folder.

However, AI chatbots are capable of cleaning up non-English scam messages to be more convincing.

Despite it being against their terms, Which was able to produce legitimate-looking messages posing as PayPal and delivery services using ChatGPT and Bard.

20. 78% of people open AI-written phishing emails.

(Source: Sosafe)

To demonstrate further the effectiveness of AI in producing convincing scams, one study found that recipients opened nearly 80% of AI-written phishing emails.

While the study actually found similar results from human-written scams, interaction rates were sometimes higher for the AI-generated emails.

Nearly two-thirds of individuals were deceived into divulging private details in online forms after clicking malicious links.

21. Creating phishing emails is 40% quicker with AI.

(Source: Sosafe)

Research also suggests that as well as making scams look more convincing, the power of AI can produce them faster.

Phishing emails are made 40% faster using AI, meaning in the numbers game, cybercriminals will be more successful and can scale up their operations.

22. Phishing emails have risen 1,256% since ChatGPT was launched.

(Source: SlashNext)

While correlation doesn’t necessarily mean causation, AI and phishing go hand-in-hand. Since ChatGPT launched, there has been a staggering 1,265% increase in the number of phishing emails sent.

23. Cybercriminals use their own AI tools like WormGPT and FraudGPT.

(Source: SlashNext – WormGPT, KrebOnSecurity)

Public AI tools like ChatGPT have their place in the cybercrime world. However, to skirt restrictions, criminals have developed their own tools like WormGPT and FraudGPT.

Evidence suggests WormGPT is implicated in Business Email Compromise Attacks (BECs). It can also write malicious code for malware.

WormGPT sells access to its platform via a channel on the encrypted Telegram messaging app.

24. More than 200 AI hacking services are available on the Dark Web.

(Source: Indiana University via the WSJ)

Many other malicious large language models exist. Research from Indiana University discovered more than 200 services of this kind for sale and free on the dark web.

The first of its kind emerged just months after ChatGPT itself went live in 2022.

One common hack is called prompt injection, which bypasses the restrictions of popular AI chatbots.

25. AI has detected thousands of malicious AI emails.

(Source: WSJ)

In some ironic good news, firms like Abnormal Security are using AI to detect which malicious emails are AI-generated. It claims to have detected thousands since 2023 and blocked twice as many personalized email attacks in the same period.

26. 39% of Indians found online dating matches were scammers.

(Source: McAfee – India)

Cybercriminals use romance as one source for finding victims and recent research in India revealed nearly 40% of online dating interactions involved scammers.

From regular fake profile pics to AI-generated photos and messages, the study found love scammers to be rife on dating and social media apps.

27. 77% of surveyed Indians believe they’ve interacted with AI-generated profiles.

(Source: McAfee – India)

The study of 7,000 people revealed that 77% of Indians have come across fake AI dating profiles and photos.

Furthermore, of those who responded to potential love interests, 26% discovered them to be some form of AI bot.

However, research suggests a culture that might also be embracing the art of catfishing with AI.

28. Over 80% of Indians believe AI-generated content garners better responses.

(Source: McAfee – India, Oliver Wyman Forum)

The same research found that many Indians are using AI to boost their own desirability in the online dating realm, but not necessarily for scamming.

65% of Indians have used generative AI to create or augment photos and messages on a dating app.

And it’s working. 81% say AI-generated messages elicit more engagement than their own natural messages.

In fact, 56% had planned to use chatbots to craft their lovers’ better messages on Valentine’s Day in 2024.

Indeed, other research suggests 28% of people believe AI can capture the depth of real human emotion.

However, Indians take note: 60% said if they received an AI message from a Valentine’s lover, they would feel hurt and offended.

29. One ‘Tinder Swindler’ scammed roughly $10 million from his female victims.

(Source: Fortune)

One of the most high-profile dating scammers was Shimon Hayut. Under the alias Simon Leviev, he scammed women out of $10 million using apps, doctored media, and other trickery.

Yet after featuring in the popular Netflix documentary the Tinder Swindler, the tables have turned. He was conned out of $7,000 on social media in 2022.

Reports state someone posed as a couple on Instagram with ties to Meta. They managed to get him to transfer money via good old PayPal.

AI Fake News and Deepfakes

Fake news and misinformation online are nothing new. However, with the power of AI, it’s becoming easier to spread and difficult to distinguish between real, false, and deepfake content.

30. Deepfakes increased 10 times in 2023.

(Source: Sumsub)

As AI technology burst into the mainstream, so did the rise of deepfakes. Data suggests the number of deepfakes detected rose 10 times in 2023 and is only increasing this year.

Based on industries, cryptocurrency-related deepfakes made up 88% of all detections.

Based on regions, North America experienced a 1,740% increase in deepfake fraud.

31. AI-generated fake news posts increased by 1,000% in one month.

(Source: NewsGuard via the Washington Post)

In just May 2023, the number of fake news-style articles increased by 1,000% according to fact checker NewsGuard.

The research also found that AI-powered misinformation websites skyrocketed from 49 to 600 in the same period, based on their criteria.

Whether it’s financially or politically motivated, AI is now at the forefront of fake stories, widely shared on social media.

32. Canada is the most worried about AI fake news.

(Source: Source: Ipos Global Advisor)

In a survey of 21,816 citizens in 29 countries, 65% of Canadians were worried that AI would make fake news worse.

Americans were slightly less worried at 56%. One reason given is a decrease in the number of local news outlets in Canada, leaving people to turn to lesser-known sources of information.

33. 74% think AI will make it easier to generate realistic fake news and images.

(Source: Source: Ipos Global Advisor)

Of all citizens surveyed across each country, 74% felt AI is making it harder to distinguish real from fake news and images from real ones.

89% of Indonesians felt the strongest on the issue, while Germans (64%) were the least concerned.

34. 56% of people can’t tell if an image is real or AI-generated.

(Source: Oliver Wyman Forum)

Research suggests more than half of people can’t distinguish fake AI-generated images and real ones.

35. An AI image was in Facebook’s top 20 most-viewed posts in Q3 2023.

(Source: Misinfo Review)

Whether we believe them or not, AI imagery is everywhere. In the third quarter of 2023, one AI image garnered 40 million views and over 1.9 million engagements. That put it in the top 20 of most viewed posts for the period.

36. An average of 146,681 people follow 125 AI image-heavy Facebook pages.

(Source: Misinfo Review)

An average of 146, 681 people followed 125 Facebook pages that posted at least 50 AI-generated images each during Q3, 2023.

This wasn’t just harmless art, as researchers classified many as spammers, scammers, and engagement farms.

Altogether, the images were viewed hundreds of millions of times. And this is only a small subset of such pages on Facebook.

37. 60% of consumers have seen a deepfake in the past year.

(Source: Jumio)

According to a survey of over 8,000 adult consumers, 60% encountered deepfake content in the past year. While 22% were unsure, only 15% said they’ve never seen a deepfake.

Of course, depending on the quality, one may not even know if they’ve seen it.

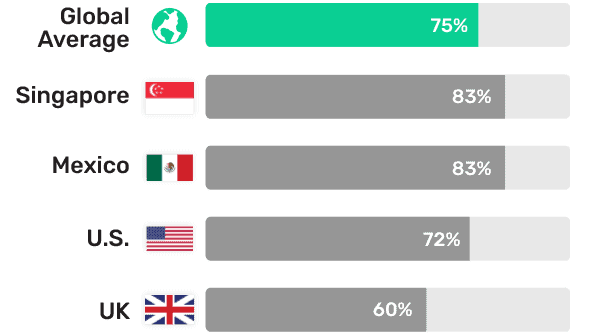

38. 75% worry deepfakes could influence elections.

(Source: Jumio)

In the United States, 72% of respondents feared AI deepfakes could influence upcoming elections.

The biggest worry comes from Singapore and Mexico (83% respectively), while the UK is less worried about election interference with 60%.

Despite this, UK respondents felt the least capable of spotting a deepfake of politicians (33%).

Singapore was the most confident at 60%, which may suggest fear correlates with individual awareness of deepfakes.

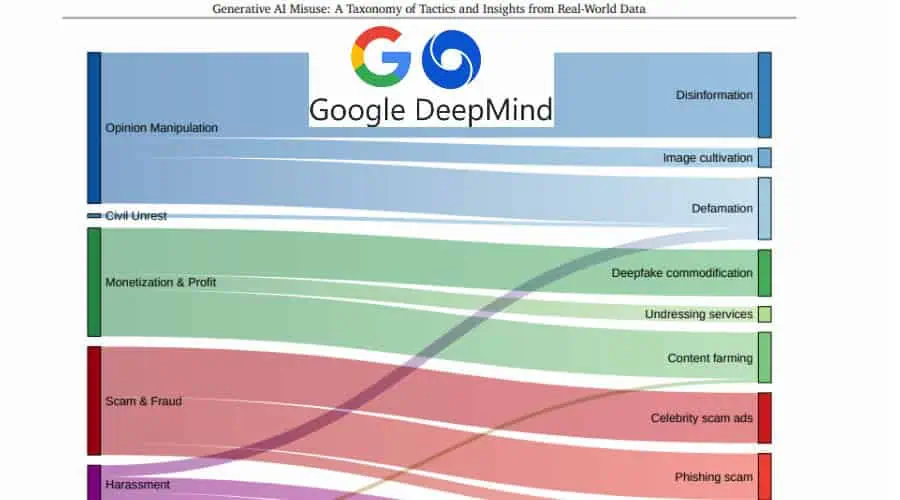

39. Political deepfakes are the most common.

(Source: DeepMind)

The most common misuse of AI deepfakes is in the political sphere according to research from DeepMind and Google’s Jigsaw.

More precisely, 27% of all reported cases tried to distort the public’s perception of political realities.

The top 3 strategies are disinformation, cultivating an image, and defamation.

Other than opinion manipulation, monetization & profit, then scam & fraud, were the second and third most common goals of deepfakes.

40. Just 38% of students have learned how to spot AI content.

(Source: Center for Democracy & Technology)

Education is one way to tackle the impact of deepfakes, but schools may be failing. Just 38% of UK school students say they’ve been taught how to spot AI-generated images, text, and videos.

Meanwhile, 71% of students themselves expressed a desire for guidance from their educators.

Adult, Criminal, and Inappropriate AI Content

The adult industry has always been at the forefront of technology and AI is just the next step. But what happens when users pay for content, they think is real? Or worse, people deepfake others without consent?

41. Over 143,000 NSFW deepfake videos were uploaded in 2023.

(Source: Channel 4 News via The Guardian)

Crude fake images of celebrities superimposed onto adult stars have been around for decades, but AI has increased their quality and popularity, as well as introducing videos.

As AI technology rapidly evolved in 2022/2023, 143,733 new deepfake videos appeared on the web during Q1-3 of 2024.

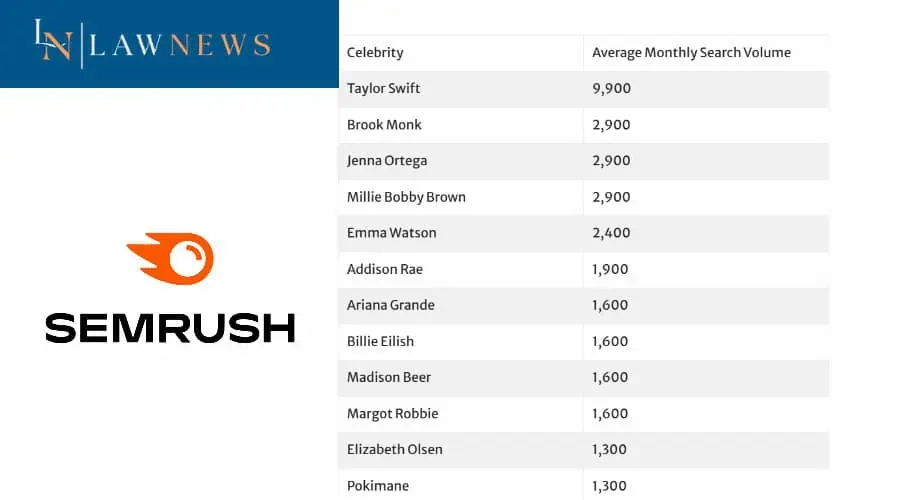

42. Searches for NSFW deepfakes have increased by 5,800% since 2019.

(Source: SEMRush via Law News)

An example of the rise of adult deepfakes can be found by monitoring search engine volume. According to an analysis using SEMRush, the term in this context has increased 58 times since 2019.

43. 4,000 celebrities have been victims of NSFW deepfakes.

(Source: Channel 4 News via The Guardian)

Research on the most popular adult deepfake websites found the likeness of approximately 4,000 celebrities generated into explicit images and videos.

5 sites received over 100 million views over a three-month period in 2023.

Many countries are now proposing laws to make such content illegal.

44. 96% of deepfake imagery is adult content.

(Source: Deeptrace)

In as early as 2019, 96% of deepfakes online were adult in nature. 100% were women (mostly celebrities) who hadn’t given consent.

On the other end of the scale, on YouTube where nudity is prohibited, 61% of deepfakes were male and “commentary-based.”

45. Taylor Swift deepfakes went viral in 2024 with over 27 million views.

(Source: NBC News)

In January 2024, Taylor Swift became the most viewed subject of deepfakes when NSFW AI images and videos spread online, mostly on Twitter/X.

In 19 hours, the primary content received over 260,000 likes, before the platform removed the material and temporarily blocked her from the trending algorithm.

Some deepfakes also depicted Taylor Swift as a Trump supporter.

46. Jenna Ortega is the 2nd most searched deepfake celebrity in the UK.

(Source: SEMRush via Law News)

Based on UK search data, behind Taylor Swift, actress Jenna Ortega is the most searched celebrity for adult deepfakes.

She’s tied with influencer Brooke Monk and fellow actress Millie Bobby Brown.

The top 20 list is filled with everyone from singer Billie Elish to streamer Pokimane, but all are women.

47. Fake AI models make thousands monthly on OnlyFans platforms.

(Source: Supercreator, Forbes)

OnlyFans and similar platforms are known for their adult content but now AI means real people don’t have to bare it all for the camera.

Personas like TheRRRealist and Aitana López make thousands a month.

While the former is open about being fake, the latter has been coyer, opening ethical questions. And they aren’t the only ones.

Even AI influencers outside the adult space, such as Olivia C (a two-person team) make a living through endorsements and ads.

48. 1,500 AI models had a beauty pageant.

(Source: Wired)

The World AI Creator Awards (WAICA) launched this Summer via AI influencer platform Fanvue. One aspect was an AI beauty pageant, which netted Kenza Layli (or her anonymous creator) $10,000.

Over 1,500 AI creations, along with elaborate backstories and lifestyles, entered the contest. The Moroccan Kenza persona had a positive message of empowering women and diversity, but deepfake misuse isn’t always so “uplifting.”

49. 6,000 people protested a widespread deepfake scandal targeting teachers and students.

(Source: BBC, The Guardian, Korea Times, CNN)

A series of school deepfake scandals emerged in South Korea in 2024, where hundreds of thousands of Telegram users were sharing NSFW deepfakes of female teachers and students.

Over 60 victims were identified, whose AI imagery had spread onto public social media.

This led to 6,000 people protesting in Seoul and eventually, 800 crimes were recorded, several resulting in convictions, and passing new laws.

They implicated around 200 schools, with most victims and offenders being teenagers.

50. 4 million people a month use deepfake ‘nudify’ apps on women and children.

(Source: Wired – Nudify)

Beyond celebrities, deepfake AI misuse has much darker implications.

In 2020, an investigation of AI-powered apps that “undress” photos of women and children found 50 bots on Telegram for creating deepfakes with varying sophistication. Two alone had more than 400,000 users.

51. Over 20,000 AI-generated CSAM were posted to a single Dark Web forum in a month.

(Source: IWF)

Perhaps the most shocking of AI misuse statistics comes from a 2023 report from a safeguarding organization. Its investigators discovered tens of thousands of AI-generated CSAM posted to just one forum on the dark web over a month.

The report notes this has increased in 2024 and such AI images have also increased on the clear web.

Cartoons, drawings, animations, and pseudo-photographs of this nature are illegal in the UK.

Wrap Up

Despite the adoption of AI across many fields, it’s still very much the wild west. What does society deem appropriate, and should there be more regulations?

From students generating assignments or risky use in the workplace to realistic scams and illegal deepfakes, AI has many concerning applications.

As explored in these AI misuse statistics, it might be time to rethink where we want this technology to go.

Are you worried about its misuse? Let me know in the comments below!

Sources:

1. Turnitin

3. Center for Democracy & Technology

5. CNN World

6. Forrester Consulting via Workday

8. ISE

9. Workable

10. Book and Artist

11. Data Privacy and Security Insider

12. Reuters

14. McAfee

15. Egress

16. Sosafe

17. SlashNext

19. KrebOnSecurity

20. Indiana University via the WSJ

21. WSJ

22. McAfee – India

23. Fortune

24. Sumsub

25. NewsGuard via the Washington Post

27. Misinfo Review

28. Jumio

29. DeepMind

30. Center for Democracy & Technology

31. Channel 4 News via The Guardian

32. Deeptrace

33. NBC News

34. NBC News 2

36. Supercreator

37. Forbes

38. Wired

39. BBC

40. The Guardian

41. BBC 2

42. Korea Times

43. CNN

44. Wired – Nudify

45. IWF

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

User forum

1 messages