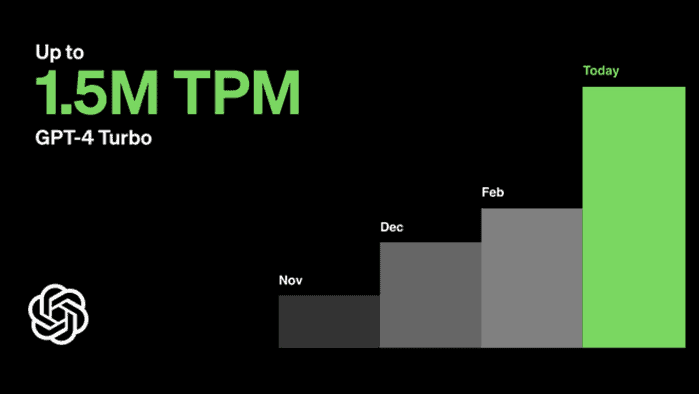

OpenAI now doubles GPT-4 Turbo rate limits, now up to 1.5 million tokens per minute

The model was first launched last year.

Key notes

- OpenAI has increased GPT-4 Turbo rate limits twofold universally.

- It now provides a maximum of 1.5 million tokens per minute (TPM).

- Rate limits, imposed on organizational levels, are restrictions placed on API access to prevent abuse.

It’s been a while since OpenAI first launched GPT-4 Turbo back in November last year at OAI’s DevDay event. At that time, the Microsoft-backed company said that the model supports 128K tokens: input tokens are 3x cheaper, and output tokens are 2x cheaper than GPT-4.

Now, it seems like things are changing. The company has increased GPT-4 Turbo rate limits twofold universally, eliminated daily limits entirely, and now provides a maximum of 1.5 million tokens per minute (TPM).

If you’re not familiar, rate limits, imposed on organizational levels, are restrictions placed on API access to prevent abuse, ensure fairness, and manage infrastructure load. They prevent malicious overloading of the API, ensure equitable access for all users, and maintain smooth performance during high-demand periods by throttling the number of requests allowed within a specified timeframe.

Each usage comes with different pricing plans. OpenAI offers at least five usage tiers: the free version, which is limited at $100 / month, all the way to tier 5 at $10,000 / month for larger organizations.

You can also tap into the power of image analysis with GPT-4 Turbo with Vision through Azure OpenAI. This advanced multimodal AI model combines the capabilities of GPT-4 Turbo with the ability to analyze images, allowing you to ask questions about pictures and receive insightful textual responses.

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

User forum

0 messages