OpenAI announces several new fine-tuning API features and expanded Custom Model program

2 min. read

Updated on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Key notes

- OpenAI expands its fine-tuning capabilities with new features, assisted fine-tuning, and custom-trained models, empowering organizations to tailor language models to specific needs.

Last year, OpenAI first launched its self-serve fine-tuning API for GPT-3.5. Fine-tuning can help LLMs better understand content and augment its existing knowledge and capabilities for a specific task. Since its launch, thousands of organizations have used fine-tuning API to generate better code, to summarize text in a specific format, and more.

Today, OpenAI announced several new features for developers to give them more control over fine-tuning. Read about them below.

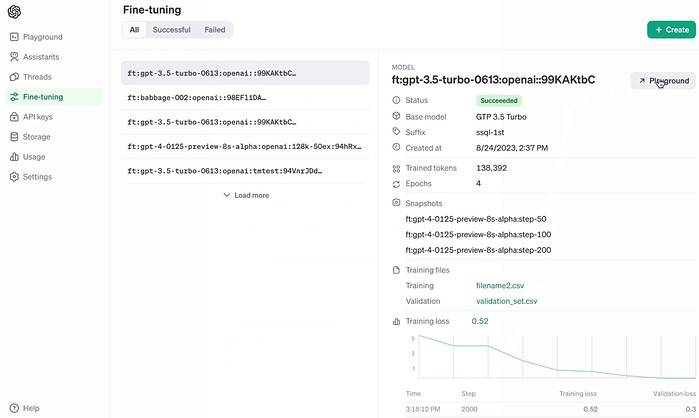

- Saving a full fine-tuned model checkpoint during each training epoch to reduce the need for subsequent retraining, especially in the cases of overfitting

- A new side-by-side Playground UI for comparing model quality and performance, allowing human evaluation of the outputs of multiple models or fine-tune snapshots against a single prompt

- Support for integrations with third-party platforms to let developers share detailed fine-tuning data to the rest of their stack

- Metrics computed over the validation dataset (previously a sampled batch) at the end of each epoch to provide better visibility into model performance (token loss and accuracy) and give feedback on the models’ generalization capability

- The ability to configure available hyperparameters from the Dashboard (rather than only through the API or SDK)

- Various improvements to the fine-tuning Dashboard, including the ability to configure hyperparameters, view more detailed training metrics, and rerun jobs from previous configurations.

Today, OpenAI also announced the assisted fine-tuning offering as part of the Custom Model program. Through Assisted fine-tuning, a dedicated group of OpenAI researchers will use techniques beyond the fine-tuning API, such as additional hyperparameters and various parameter efficient fine-tuning (PEFT) methods to train and optimize models for a specific domain. This program will be really helpful for organizations that need support to build efficient training data pipelines, evaluation systems, and bespoke parameters and methods to improve model performance for their specific domain. In addition to assisted fine-tuning, OpenAI also helps organizations in training a purpose-built model from scratch that understands their business, industry, or domain.

“With OpenAI, most organizations can see meaningful results quickly with the self-serve fine-tuning API. For any organizations that need to more deeply fine-tune their models or imbue new, domain-specific knowledge into the model, our Custom Model programs can help,” wrote OpenAI team.

User forum

0 messages