Microsoft takes legal measures against ten for using DALL-E AI for harmful purposes

The company filed the complaint in a Virginia court

Key notes

- Microsoft is suing ten cybercriminals for exploiting AI services to create harmful content and resell access.

- The group used custom software to bypass safety filters, generating harmful images with stolen API keys.

- The company then took action by revoking the criminals’ access after finding out.

Microsoft’s Digital Crimes Unit is taking legal action against a foreign-based group that uses AI to exploit customer credentials to alter AI services and resell access to bad actors.

In a new report, the Redmond tech giant says that it’s filed a complaint in a Virginia court against ten cyber criminals who “intentionally develop tools” to “create offensive and harmful content.”

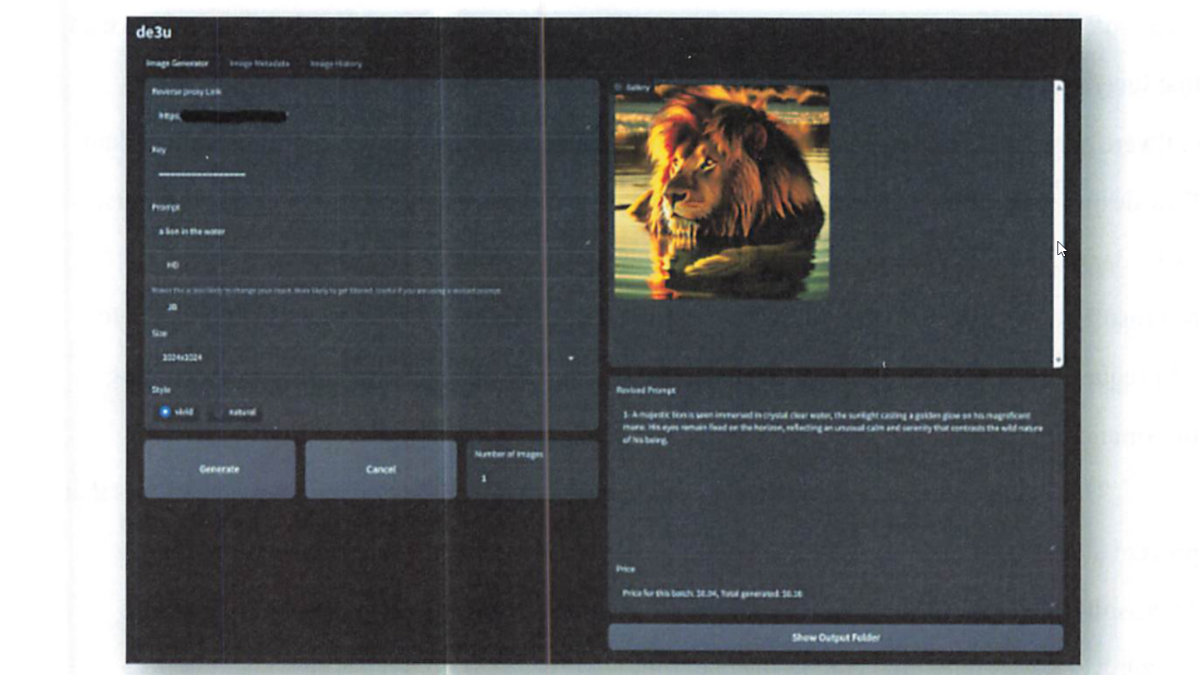

The cybercriminals allegedly used custom software to reverse-engineer safety filters (de3u) to create thousands of harmful images using DALL-E AI while stripping metadata to evade detection. They’re accused of operating a hacking-as-a-service scheme by stealing API keys from US companies (including Microsoft) and selling them to malicious users.

Once Microsoft discovered this activity on its Azure OpenAI services, it took action by revoking the criminals’ access, adding new security measures, and strengthening protections to prevent future attacks.

The age of the AI boom is right in front of us, and with that, there have always been concerns about whether cybercriminals are exploiting this technology for harmful & illicit content.

In a 2024 pledge before Congress, Microsoft said that it’s advocating for a comprehensive deepfake fraud statute, tools to label synthetic content, and updated laws to tackle AI-generated abuse.

Microsoft is also a part of the C2PA initiative, which works to develop standards for AI-generated content authentication. The C2PA standard automatically labels content with provenance metadata, hence we’re seeing a lot of AI labels (in whatever wording they use) across platforms like Instagram, Facebook, and more.

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

User forum

0 messages