Microsoft Edge to run Phi-3 mini model locally on the device, similar to Chrome

That means, you can run the model without any internet connection needed

2 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Key notes

- Microsoft might let you run the Phi-3 mini model locally on Edge.

- An experimental flag hints at offline natural language processing.

- Phi-3 mini, launched in April, offers powerful, cost-effective AI.

Microsoft may soon let you run the Phi-3 mini model locally on the device on Edge, its popular browser, not too long after Google tested its Gemini Nano’s local integration to Chrome.

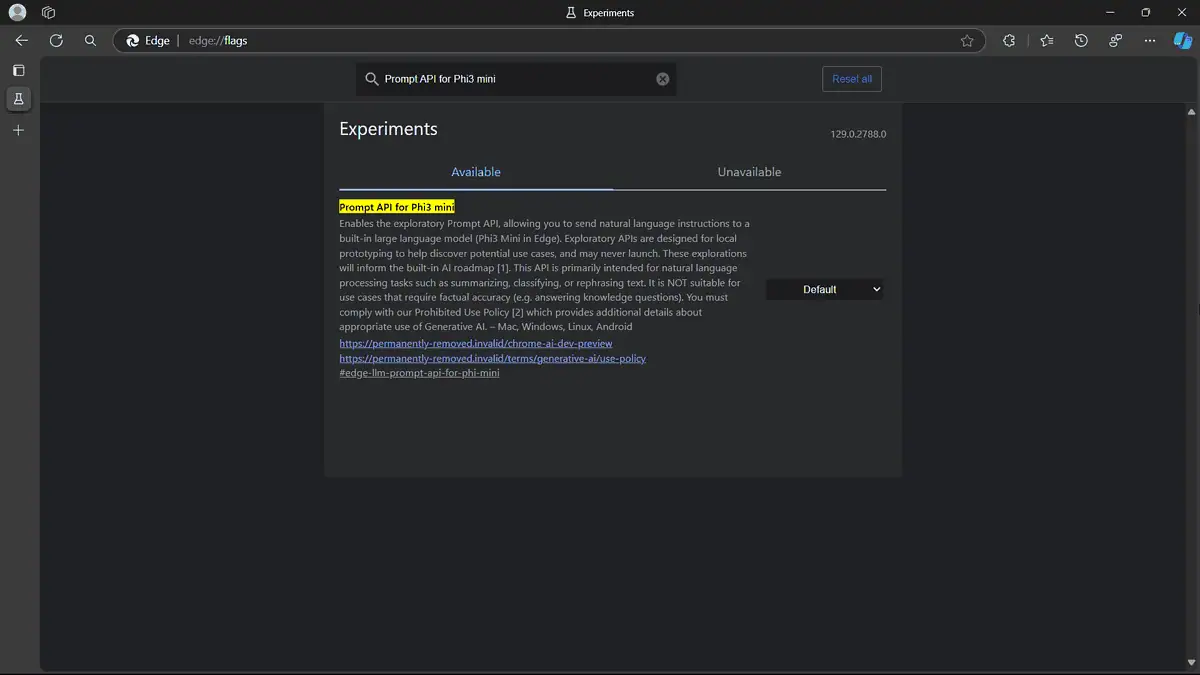

A spot by trusted browser enthusiast @Leopeva64 reveals a new flag called “Prompt API for Phi3 mini” that may have something to do with the feature. The exploratory Prompt API lets you test natural language tasks with the Phi3 Mini model, but it’s not for fact-checking, according to the flag’s description, and it’s coming to Mac, Windows, Linux, and Android.

We’ve independently verified it, and it checked out. Here’s what it looks like:

“Exploratory APIs are designed for local prototyping to help discover potential use cases, and may never launch … This API is primarily intended for natural language processing tasks such as summarizing, classifying, or rephrasing text,” the flag reads.

That means, you can run the model without any internet connection needed. You can take this feature out for a spin by downloading Edge Canary and activating the flag, but you may still see a few hiccups here and there.

As the enthusiast points out further, a similar flag is also available on Chrome Canary, the popular browser’s experimental channel, but for Gemini Nano. It’s crazy fast, and it offers offline AI processing, though it’s not yet available in the public version.

Despite its smaller size at 3.8B parameters, the Phi-3 mini model is competent compared to its competitors. It’s designed to offer powerful AI capabilities while being more cost-effective and suitable for local, offline use.

Microsoft launched the smaller model back in April this year as a part of the Phi-3 family. This new model is available on platforms like Azure, Hugging Face, and Ollama, and builds on the capabilities of its predecessor, Phi-2, with better performance in coding and reasoning.

User forum

0 messages