Microsoft announces Project Acoustics for wave based acoustic simulation

2 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Microsoft has announced Project Acoustics, a new in-developmement solution for wave based acoustic simulation.

The new software solution “models wave effects like occlusion, obstruction, portaling and reverberation effects in complex scenes without requiring manual zone markup or CPU intensive raytracing.”

Microsoft describes their audio solution as being similar in philosophy to pre-baked static lighting meshes which pre-calculates where shadows and light sources should be.

According to Microsoft, “Ray-based acoustics methods can check for occlusion using a single source-to-listener ray cast, or drive reverb by estimating local scene volume with a few rays. But these techniques can be unreliable because a pebble occludes as much as a boulder. Rays don’t account for the way sound bends around objects, a phenomenon known as diffraction. Project Acoustics’ simulation captures these effects using a wave-based simulation. The acoustics are more predictable, accurate and seamless.”

The audio middleware is already being introduced into the Unity game engine as a drag-and-drop and includes a Unity audio engine plugin. As a developer, you can augment the Unity audio source controls by attaching a Project Acoustics C# controls component to each audio object.

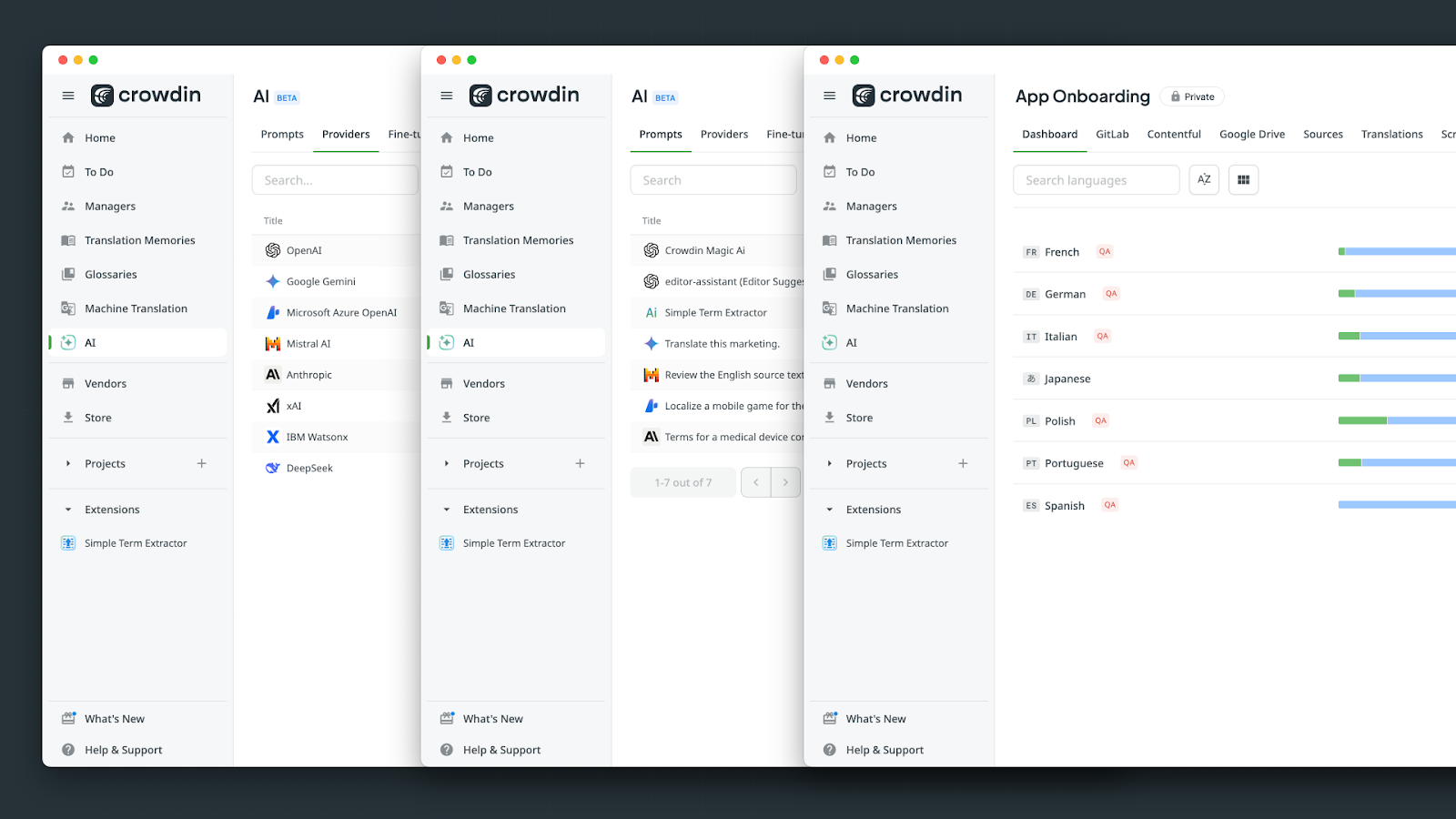

Here is Microsoft’s workflow chart:

Pre-bake: Start with setting up the bake by selecting which geometry responds to acoustics, by, for example, ignoring light shafts. Then edit automatic material assignments and selecting navigation areas to guide listener sampling. There’s no manual markup for reverb/portal/room zones.

Bake: An analysis step is run locally, which does voxelization and other geometric analysis on the scene based on selections above. Results are visualized in editor to verify scene setup. On bake submission, voxel data is sent off to Azure and you get back an acoustics game asset.

Runtime: Load the asset into your level, and you’re ready to listen to acoustics in your level. Design the acoustics live in editor using granular per-source controls. The controls can also be driven from level scripting.

Project Acoustics will be usable on Xbox One, Android, MacOS and Windows. Of course, Xbox Series X will also benefit from the solution, moreso with its hardware audio technology.

User forum

0 messages