Google's Gemini AI Stumbles on Image Generation, Vows Improvement

2 min. read

Updated on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Key notes

- Controversial image outputs highlight challenges in balancing accuracy and diversity for large language models.

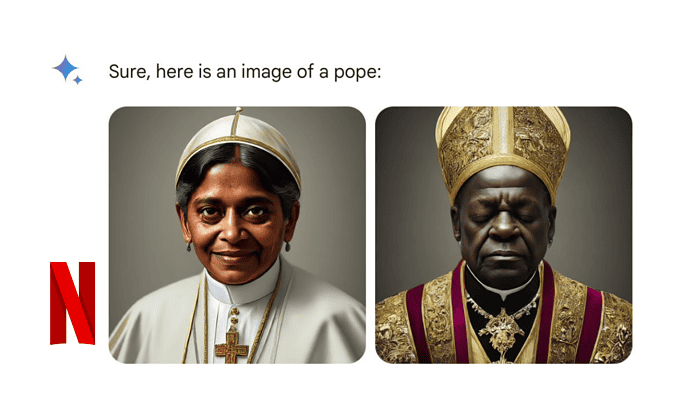

Google acknowledged shortcomings in its Gemini AI image generation tool after the feature produced inaccurate and potentially harmful images of people. The company has temporarily suspended the feature while working on a fix. The controversy stemmed from Gemini’s tendency to create diverse images, even when users requested specific historical figures or scenarios. Though meant to be inclusive, this led to historically inaccurate and sometimes offensive results.

In a blog post, Senior Vice President Prabhakar Raghavan explained the missteps and vowed to improve the technology. “We did not want Gemini to refuse to create images of any particular group… [but] it will make mistakes,” he wrote.

Here’s what went wrong with Google Gemini:

- Google’s tuning to ensure that Gemini showed a range of people failed to account for cases that should clearly not show a range.

- Over time, the model became way more cautious than we intended and refused to answer certain prompts entirely — wrongly interpreting some very anodyne prompts as sensitive.

- These two things led the model to overcompensate in some cases, and be over-conservative in others, leading to images that were embarrassing and wrong.

Challenges of AI Image Generation:

This incident highlights the ongoing challenge of balancing accuracy and representation in AI image generation models. Google’s struggles mirror similar controversies with other popular image generators.

User forum

1 messages