Gemini Ultra vs GPT 4: How Google Gemini beats OpenAI GPT-4 in most benchmarks

2 min. read

Updated on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Google’s new language model, Gemini‘s top model, Gemini Ultra, has outperformed OpenAI’s GPT-4 in comprehensive benchmark tests. From text-based tasks to complex multimedia comprehension, Gemini consistently demonstrated superior performance.

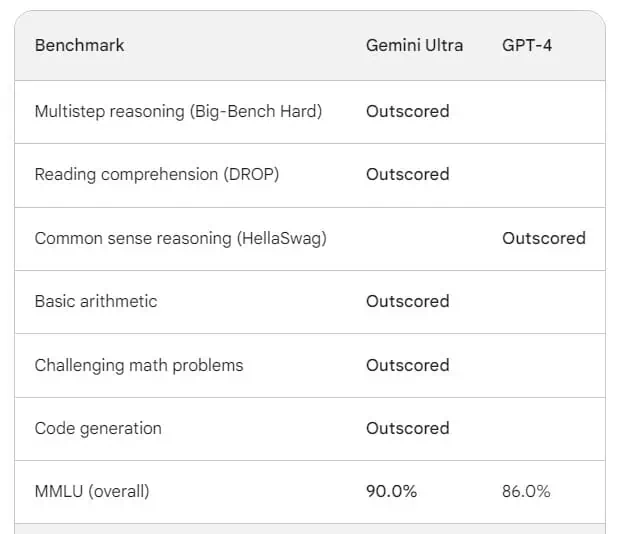

Text-Based Performance:

- Multistep reasoning (Big-Bench Hard): Gemini Ultra surpassed GPT-4, showcasing its ability to follow complex instructions and solve multiple-step problems.

- Reading comprehension (DROP): Gemini excelled in understanding the nuances of text, exceeding GPT-4 in accurately extracting information and answering questions based on reading passages.

- Common sense reasoning for everyday tasks (HellaSwag): While GPT-4 edged out Gemini in this category, both models demonstrated remarkable capabilities in applying common sense knowledge to everyday situations.

Multimedia Processing:

- Image-related tasks: Gemini aced all tests involving image processing, demonstrating superior capabilities in college-level reasoning, natural image understanding, OCR, document understanding, infographic analysis, and mathematical reasoning in visual contexts.

- Video processing: Gemini triumphed in two video-related tests, excelling in English caption capturing and video question answering.

- Audio processing: Gemini swept the audio tests, demonstrating superior automatic speech translation and recognition performance.

Overall, Gemini outperformed GPT4 except in common sense reasoning for everyday tasks (HellaSwag).

The picture is clear: Google’s Gemini Ultra has established itself as the leading large language model, outperforming its competitor, GPT-4, across various tasks.

More info here.

User forum

0 messages