This is how Microsoft wants to fight extremist content

2 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

The terrorist attack at Al Noor mosque in Christchurch took 50 innocent people’s lives. The white supremacist was able to live stream the entire event on social media which sparked controversies and raised many questions on the moderation policy of the social media networks.

Although not as much popular as Facebook, Twitter, and YouTube, Microsoft revealed that a small part of its userbase used their platforms as well to spread the infamous shooting video. And while the company said that it had taken prompt action and made no delay in removing the video, Microsoft stressed that it’d need the tech consortium to come together to find a better solution.

“Ultimately, we need to develop an industrywide approach that will be principled, comprehensive and effective. The best way to pursue this is to take new and concrete steps quickly in ways that build upon what already exists,” Microsoft president Brad Smith wrote in a blog post.

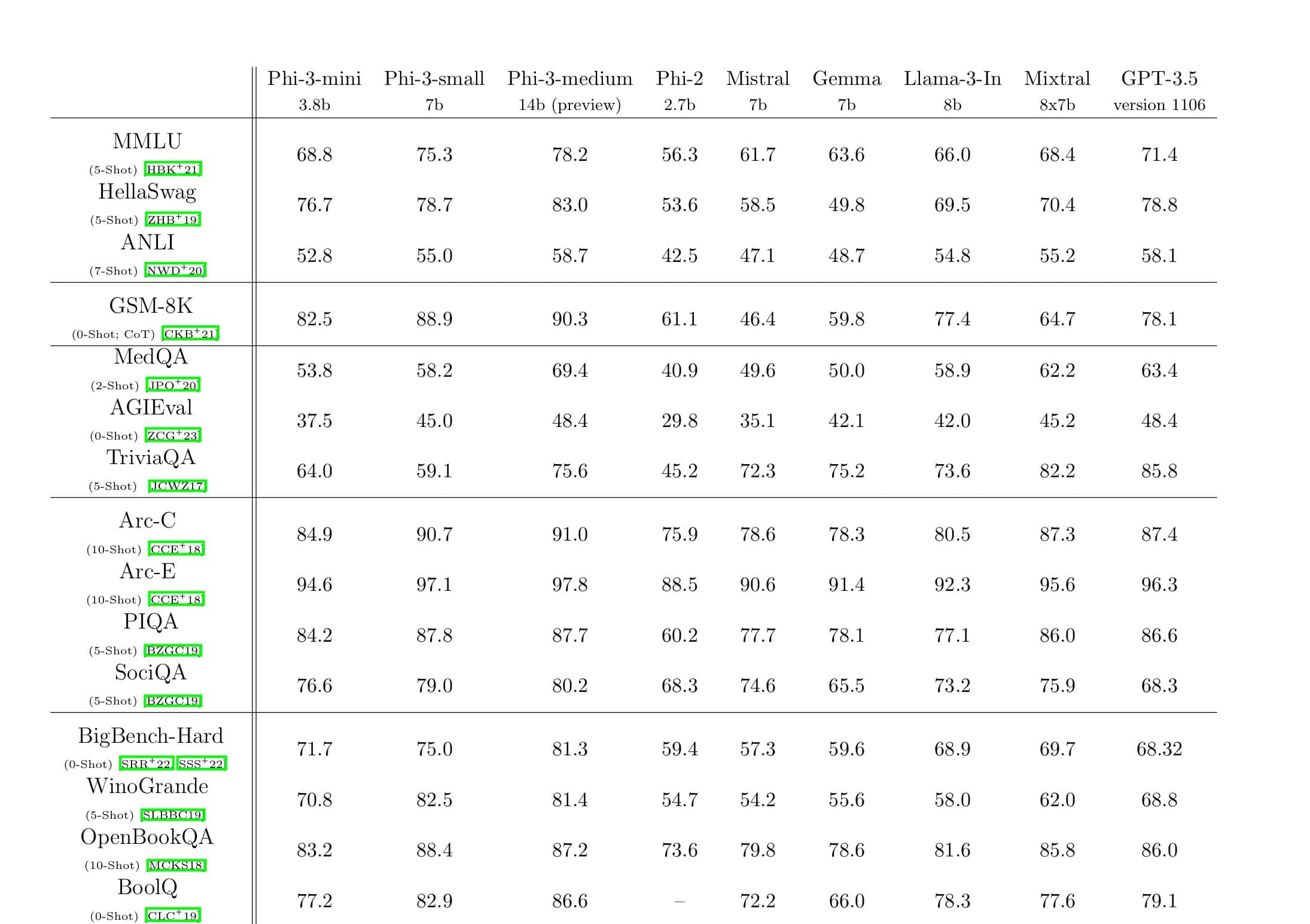

Microsoft’s Brad Smith put forward several ideas to put an end to the spreading of extremist content. Brad suggests that the tech industry should work together to advance technologies like PhotoDNA which helps in applying and identifying known violent content. Also, the companies should work together to identify and catch edited versions of the same content.

Moreover, Brad wants the sector to create a “major event” protocol, in which technology companies will share information more quickly and directly which in turn will help each platform and service to take actions quickly.

Brad then talked about how technology can’t be a solution to everything and that we need to treat others with respect and dignity and respect each other’s differences.

Microsoft’s statement came days after Facebook admitted that its AI isn’t capable enough to spot extremist content in a live stream, citing the amount of such content to be too less to train its AI.