There's a new approach giving typical cameras 3D capabilities

4 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

A team of researchers at Stanford University, with the collaboration between the Laboratory for Integrated Nano-Quantum Systems (LINQS) and ArbabianLab, has devised a way to make it possible to enable cameras in the future to see in 3D (particularly to see the light in three dimensions). The project started with the team pointing out that light detection and ranging (LiDAR or lidar) systems these days are inconvenient due to their size.

“Existing lidar systems are big and bulky, but someday, if you want lidar capabilities in millions of autonomous drones or in lightweight robotic vehicles, you’re going to want them to be very small, very energy efficient, and offering high performance,” said Okan Atalar, the first author on the new paper in the journal Nature Communications and a doctoral candidate in electrical engineering at Stanford.

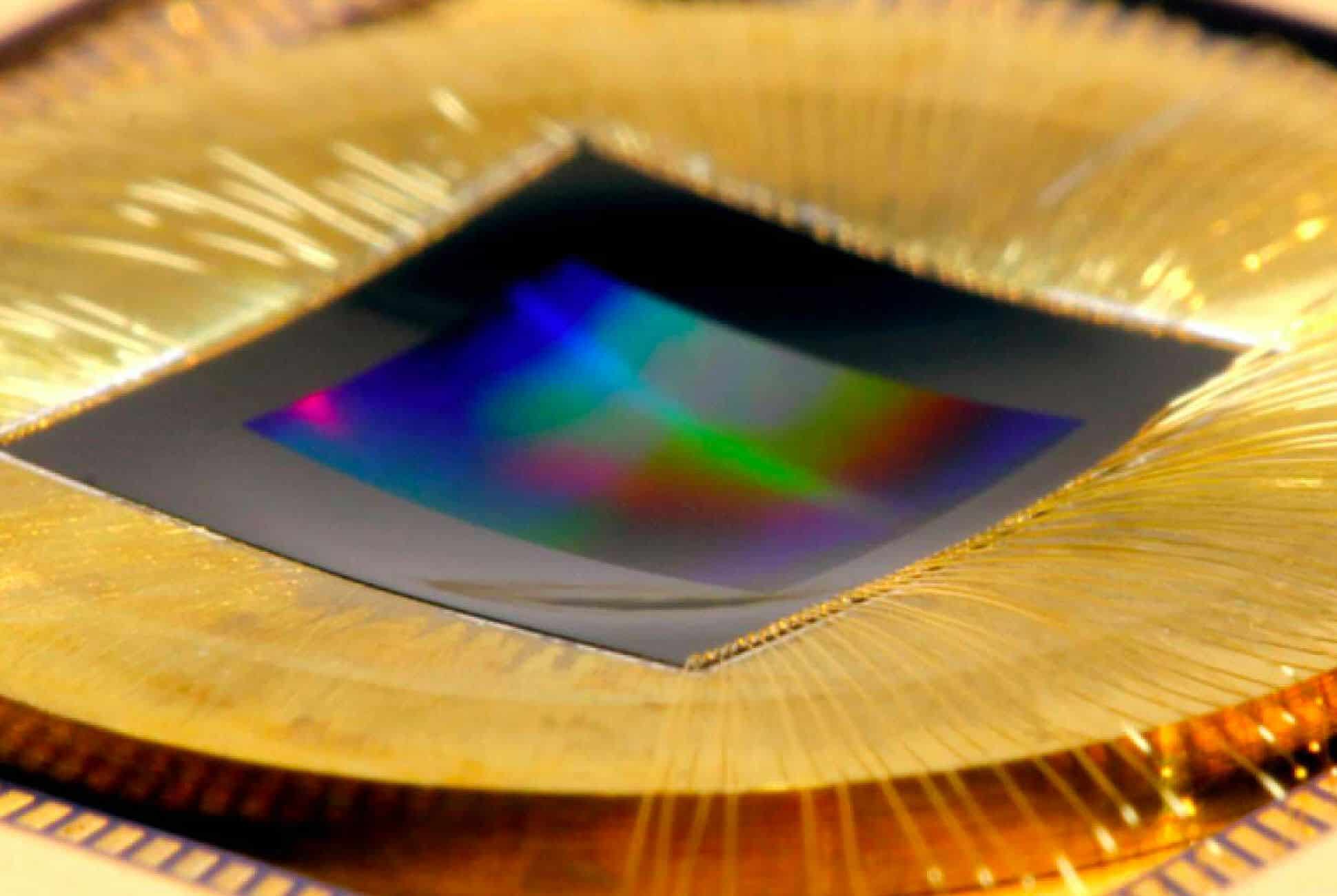

The team then created a compact device, allowing it to be more energy efficient (since lidar can consume too much power due to the size and number of components it is using) and a good fit for integration into cameras of everyday cellphones and digital SLRs. The study basically relies on the acoustic resonance phenomenon. It introduces the use of a thin wafer of lithium niobate, which is said to be the perfect material due to its electrical, acoustic, and optical properties.

The lithium niobate is coated with two transparent electrodes as a simple acoustic modulator. Technically, when electricity is used through the electrodes of the said acoustic modulator, vibration will occur efficiently at very predictable and controllable frequencies. The lithium niobate will then modulate light while a couple of polarizers added will turn the light on and off several million times a second.

This process is essential and one of the known approaches to adding 3D imaging to standard sensors. Like in lidar, the process will effectively help measure the variations in the light and calculate distance. And as said, existing modulators found in other systems can have high energy consumption, which is impractical. But with the approach shown by the researchers, there is a possibility to introduce 3D imaging in small cameras like the ones situated on phones and drones. According to the researchers, it can be the foundation of the “standard CMOS lidar” in the future. (CMOS image sensors are almost universally used in smartphones).

“What’s more, the geometry of the wafers and the electrodes defines the frequency of light modulation, so we can fine-tune the frequency,” Atalar added. “Change the geometry and you change the frequency of modulation … While there are other ways to turn the light on and off,” Atalar says, “this acoustic approach is preferable because it is extremely energy efficient.”

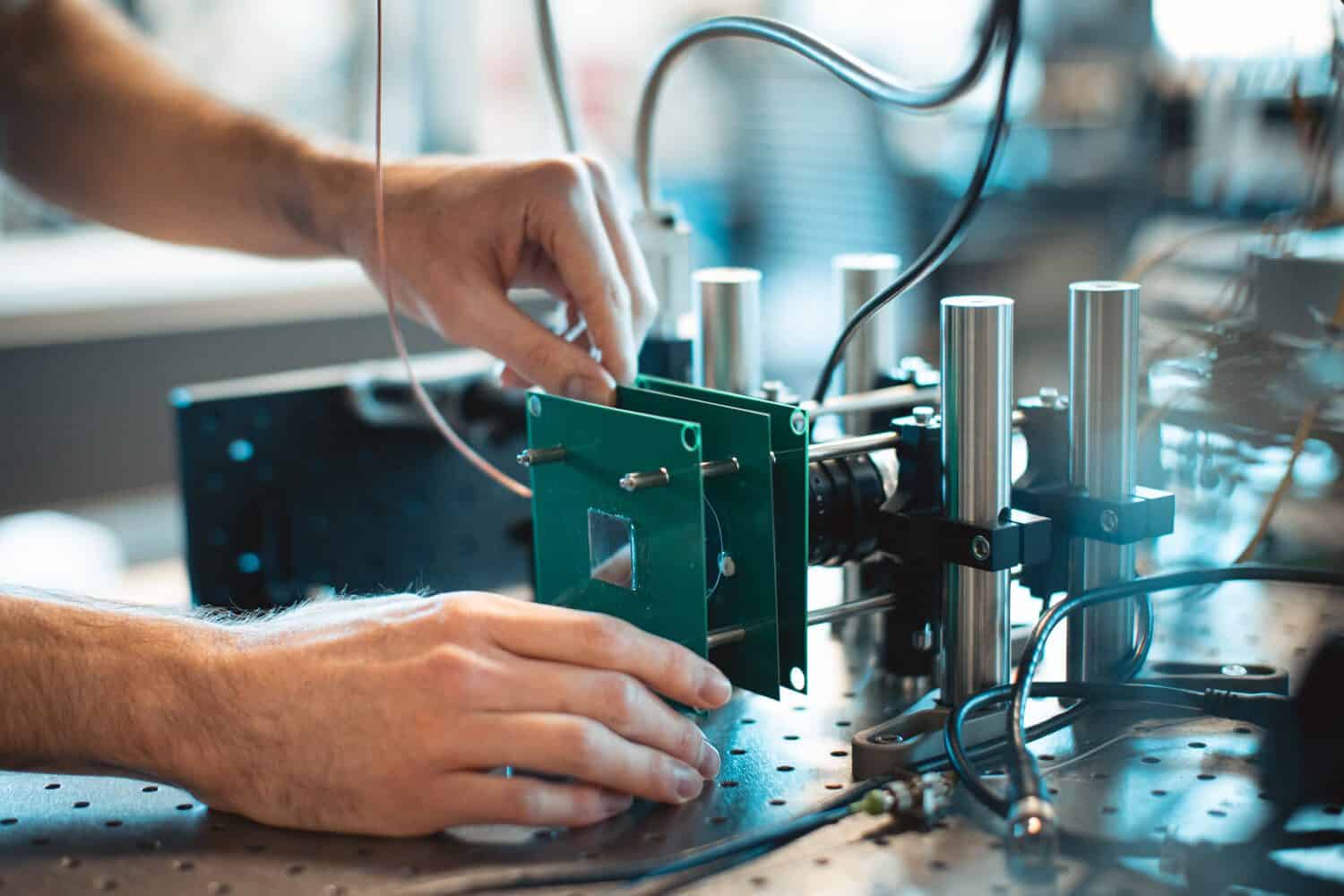

The researchers tried the tech by building a prototype lidar system on a lab bench using a commercially available digital camera as a receptor. According to the team’s reports, the new system was able to produce megapixel-resolution depth maps. In addition, they said that the optical modulator created by the team incredibly consumed just a small amount of power and that it was even reduced 10 times lower than what was presented in the paper.

With that, if the tech gets the support it needs, it could open new possibilities for the smartphone market and so much more. It can also revolutionalize how we use all devices with cameras, including standard professional cameras, drones, tablets, laptops, and more. It can mean additional functions and capabilities for them that can help us in various ways, like getting more details in images captured. Through megapixel-resolution lidar, the researchers also say that it will be easier for the system to identify targets efficiently at a more excellent range. For instance, when used for autonomous cars, the improved lidar system can distinguish pedestrians from cyclists at considerable distances, resulting in a better system to prevent accidents.

User forum

0 messages