The Free Software Foundation thinks GitHub Copilot should be illegal

3 min. read

Updated on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

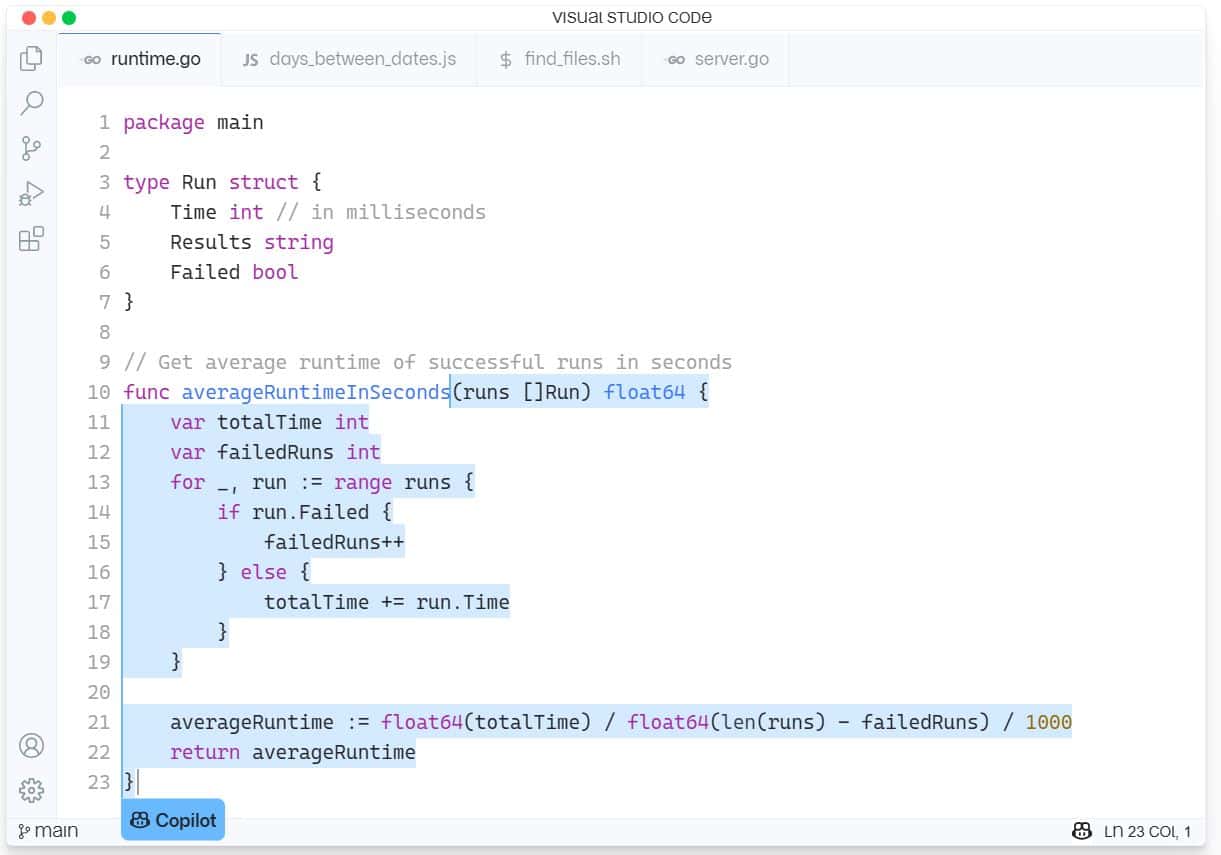

GitHub Copilot is a new AI assistance service for software development built into Microsoft’s Visual Studio Code. GitHub Copilot supports a variety of languages and frameworks and makes the lives of developers easier by offer suggestions for whole lines or entire functions right inside the IDE. GitHub Copilot is powered by OpenAI Codex, and was trained on billions of lines of open-source code.

The last issue has caused the Free Software Foundation (FSF) to have a massive bee in their bonnet, calling the tool “unacceptable and unjust, from our perspective.”

The open-source software advocate complains that Copilot requires closed source software such as Microsoft’s Visual Studio IDE or Visual Studio Code editor to run and that it constitutes a “service as a software substitute” meaning it’s a way to gain power over other people’s computing.

The FSF felt there were numerous issues with Copilot which still needed to be tested in court.

“Developers want to know if training a neural network on their software can be considered fair use. Others who might want to use Copilot wonder if the code snippets and other elements copied from GitHub-hosted repositories could result in copyright infringement. And even if everything might be legally copacetic, activists wonder if there isn’t something fundamentally unfair about a proprietary software company building a service off their work,” the FSF wrote.

The help answer these questions the FSF has called for white papers that examine the following issues:

- Is Copilot’s training on public repositories copyright infringement? Fair use?

- How likely is the output of Copilot to generate actionable claims of violations of GPL-licensed works?

- Can developers using Copilot comply with free software licenses like the GPL?

- How can developers ensure that code to which they hold the copyright is protected against violations generated by Copilot?

- If Copilot generates code that gives rise to a violation of a free software licensed work, how can this violation be discovered by the copyright holder?

- Is a trained AI/ML model copyrighted? Who holds the copyright?

- Should organizations like the FSF argue for change in copyright law relevant to these questions?

The FSF will pay $500 for published white papers and may release more funds if further research is warranted.

Those who want to make a submission can send it to [email protected] before the 21st of August. Read more about the process at FSF.org here.

Microsoft has responded to the coming challenge by saying: “This is a new space, and we are keen to engage in a discussion with developers on these topics and lead the industry in setting appropriate standards for training AI models.”

Given that Copilot sometimes steals whole functions from other Open Source apps, do our readers agree with FSF, or is the FSF being hypocritical simply because it’s an AI rather than a human repurposing code? Let us know your view in the comments below.

via infoworld

User forum

0 messages