Tech giants like Microsoft, OpenAI want clearer rules for AI models from the UK

3 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Key notes

- AI giants (Google, Facebook) join UK’s AI safety tests, but want clearer rules.

- Companies agree to fix flaws found, but push for transparency in testing process.

- UK aims to be leader in AI safety, but balances voluntary agreements with future regulations.

- Focus on preventing misuse for cyberattacks and other harmful applications.

Leading artificial intelligence companies are urging the UK government to provide more details about its AI safety testing procedures. This shows the potential limitations of relying solely on voluntary agreements to regulate this rapidly developing technology.

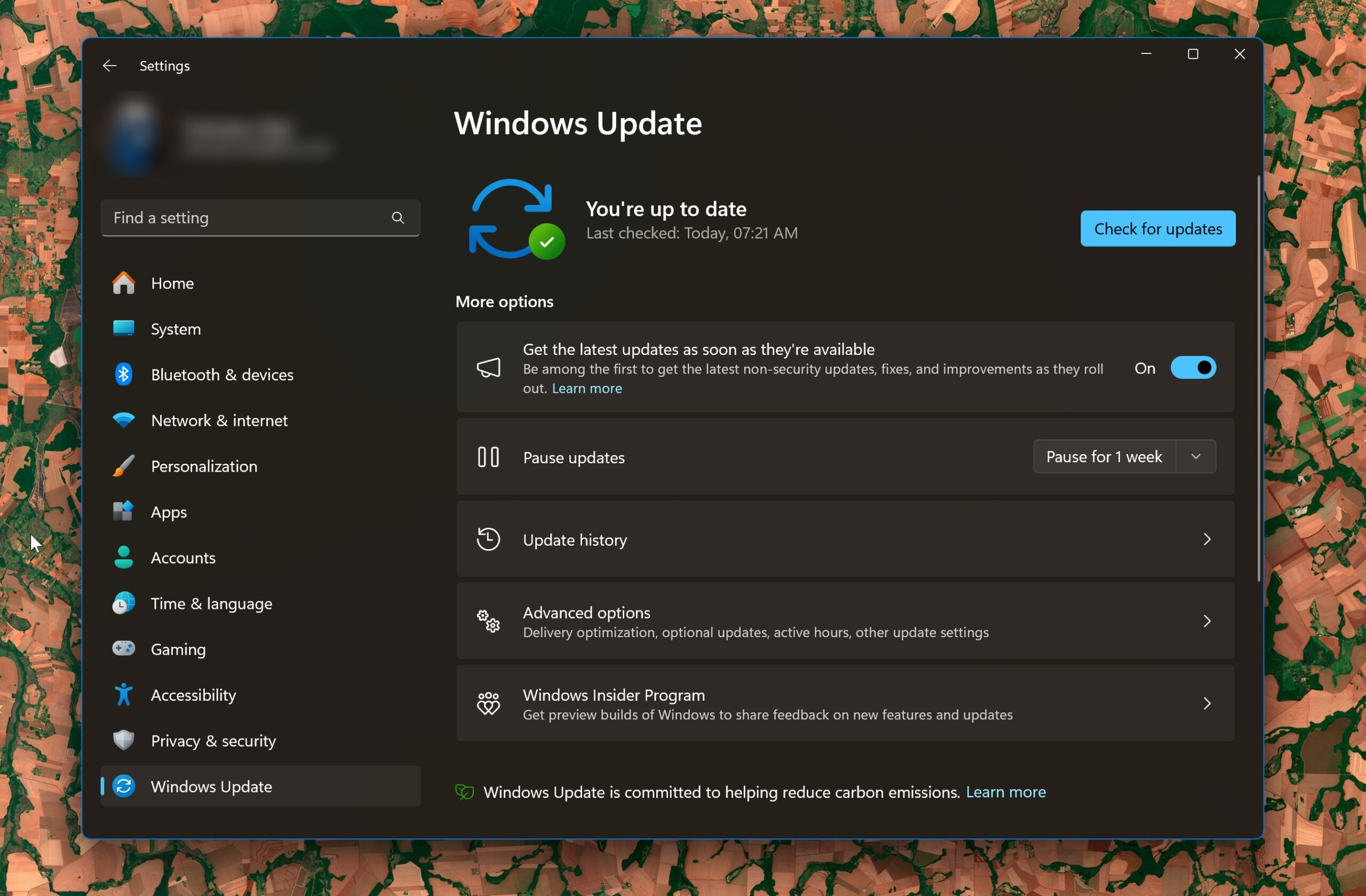

This comes after OpenAI, Google DeepMind, Microsoft, and Meta signed voluntary commitments in November 2023 to open up their latest generative AI models for pre-deployment testing by the newly established AI Safety Institute (AISI). While agreeing to adjust their models based on identified flaws, these companies are now seeking clarity on the specific tests conducted, their duration, and the feedback process in case of risk detection.

Despite not being legally bound to act upon the test results, the government emphasized its expectation for companies to address identified risks. This underlines the ongoing debate on the effectiveness of voluntary agreements in ensuring responsible AI development. In response to these concerns, the government outlined plans for “future binding requirements” for leading AI developers to strengthen accountability measures.

The AISI, established by Prime Minister Rishi Sunak, plays a central role in the government’s ambition to position the UK as a leader in tackling potential risks associated with AI advancement. This includes misuse of cyberattacks, bioweapon design, and other harmful applications.

According to sources familiar with the process, the AISI has already begun testing existing and unreleased models, including Google’s Gemini Ultra. Their focus lies on potential misuse risks, particularly cybersecurity, leveraging expertise from the National Cyber Security Centre within GCHQ.

Government contracts reveal investments in capabilities for testing vulnerabilities like “jailbreaking” (prompting AI chatbots to bypass safeguards) and “spear-phishing” (targeted attacks via email). Additionally, efforts are underway to develop “reverse engineering automation” tools for in-depth analysis of models.

We will share findings with developers as appropriate. However, where risks are found, we would expect them to take any relevant action ahead of launching.

UK government told the Financial Times.

Google DeepMind acknowledged its collaboration with the AISI, emphasizing its commitment to working together for robust evaluations and establishing best practices for the evolving AI sector. OpenAI and Meta declined to comment.

In easy words:

Big AI companies like Google and Facebook are working with the UK government to test their new AI models for safety before release. The government wants to ensure these models aren’t misused for hacking or creating dangerous weapons.

The companies are happy to help but want more details about the tests, like how long they’ll take and what happens if any problems are found. The government is trying to balance encouraging innovation with keeping people safe.

User forum

0 messages