OpenAI's ChatGPT patches issue that mistakenly censors "David Mayer" name

There's an online panic about this.

2 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Key notes

- ChatGPT initially blocked names like “David Mayer” and others, sparking some wild theories.

- OpenAI has since then fixed the issue, but the cause remains unclear.

- David Mayer is also not the only name ChatGPT was kind of mistakenly censoring.

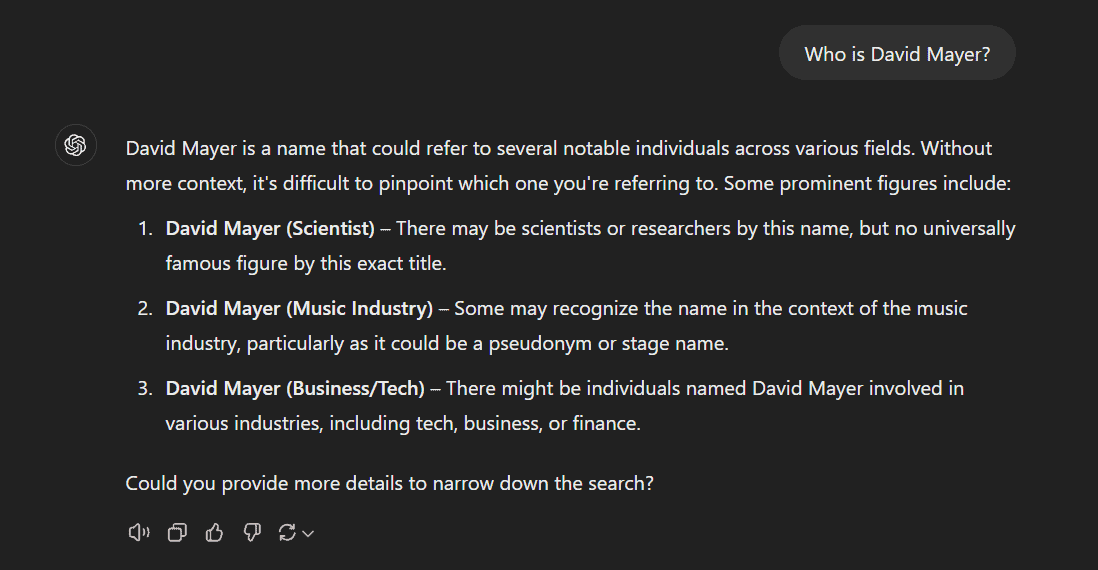

Viral reports online are saying that OpenAI’s ChatGPT refused to recognize the name “David Mayer.” The AI chatbot greeted some users with an “I’m unable to produce a response” message, prompting a lot of theories here and there.

We’ve tried to put it to the test, so now, it seems like OpenAI has finally fixed it.

The theories first stemmed from eagle-eyed folks on Reddit who have been attempting to bypass the block, such as using different formats or screenshots. They were, however, unsuccessful, which seems pretty odd, especially with a lot of conspiracy going around in the AI sphere.

But it turned out that David Mayer isn’t the only name ChatGPT was kind of mistakenly censoring. Another user on Reddit found that names like Brian Hood, Jonathan Turley, Jonathan Zittrain, David Faber, and Guido Scorza were also being blocked.

These individuals share a common trait: they were either accused of illegal activity by ChatGPT, have criticized AI, or have exercised their right to be forgotten under GDPR. Oddly enough, we’ve also tried copy-pasting the thread above to ChatGPT, and guess what we’ve stumbled upon?

Whether it’s a case of OpenAI forgetting to patch an issue, or an actual person trying to erase their digital footprint, we still don’t know for sure. It does sound like a more intentional mechanism, like a restriction based on privacy concerns or filtering.

Gemini, OpenAI’s competitor, has also faced a somewhat similar case. The AI chatbot told a user to “die” and how they were “a burden on society” after their conversation about homework took a sudden and disturbing turn.

User forum

0 messages