Microsoft's new vision-language (VL) system significantly surpasses human performance

2 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

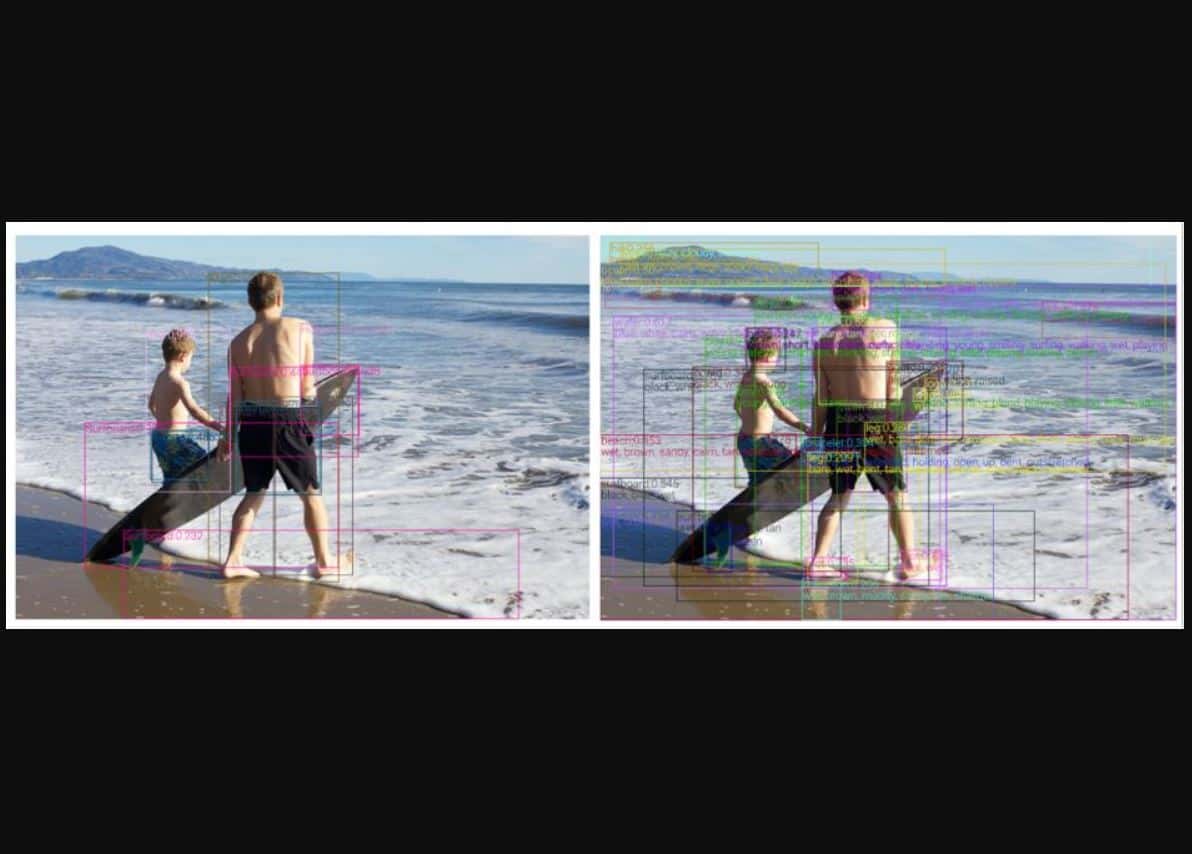

Vision-language (VL) systems allow searching the relevant images for a text query (or vice versa) and describing the content of an image using natural language. In general, a VL system uses an image encoding module and a vision-language fusion module. Microsoft Research recently developed a new object-attribute detection model for image encoding called VinVL (Visual features in Vision-Language).

When VinVL is combined with VL fusion modules such as OSCAR and VIVO, the new Microsoft VL system was able to achieve top position in the most competitive VL leaderboards, including Visual Question Answering (VQA), Microsoft COCO Image Captioning, and Novel Object Captioning (nocaps). Microsoft Research team also highlighted that this new VL system significantly surpasses human performance on the nocaps leaderboard in terms of CIDEr (92.5 vs. 85.3).

VinVL has demonstrated great potential in improving image encoding for VL understanding. Our newly developed image encoding model can benefit a wide range of VL tasks, as illustrated by examples in this paper. Despite the promising results we obtained, such as surpassing human performance on image captioning benchmarks, our model is by no means reaching the human-level intelligence of VL understanding. Interesting directions of future works include: (1) further scale up the object-attribute detection pretraining by leveraging massive image classification/tagging data, and (2) extend the methods of cross-modal VL representation learning to building perception-grounded language models that can ground visual concepts in natural language and vice versa like humans do.

Microsoft VinVL is being integrated into the Azure Cognitive Services, which powers various Microsoft services such as Seeing AI, Image Captioning in Office and LinkedIn, and others. Microsoft Research team will also release the VinVL model and the source code to the public.

Source: Microsoft

User forum

0 messages