Microsoft shows off amazing quality of new "best-in-class depth sensor" for the next generation HoloLens (video)

3 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

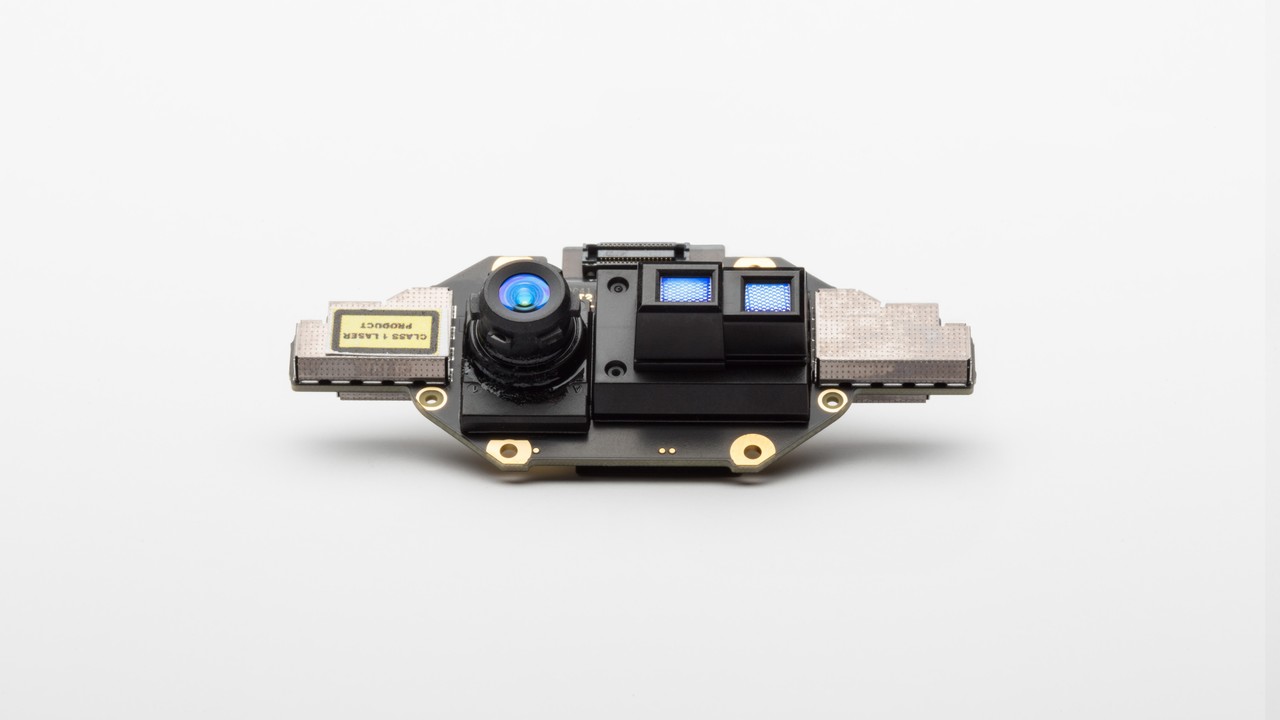

At Build Day 1 developer conference, Microsoft showed off a new Project Kinect for Azure, a package of sensors, including Microsoft’s next generation depth camera, with onboard compute designed for AI on the Edge.

This new sensor package will include Microsoft’s breakthrough Time of Flight sensor with additional sensors all in a small, power-efficient form factor. This sensor package will make use of Azure AI to significantly improve insights and operations. This can enable fully articulated hand tracking and high fidelity spatial mapping, enabling a new level of precision solutions. On LinkedIn, Alex Kipman confirmed this 4th generation Kinect sensor will find its way into the next generation of the Microsoft HoloLens.

The sensor features:

- Highest number of pixels (megapixel resolution 1024×1024)

- Highest Figure of Merit (highest modulation frequency and modulation contrast resulting in low power consumption with overall system power of 225-950mw)

- Automatic per pixel gain selection enabling large dynamic range allowing near and far objects to be captured cleanly

- Global shutter allowing for improved performance in sunlight

- Multiphase depth calculation method enables robust accuracy even in the presence of chip, laser and power supply variation.

- Low peak current operation even at high frequency lowers the cost of modules

The current version of HoloLens uses the third generation of Kinect depth-sensing technology to enable it to place holograms in the real world. The next generation HoloLens will use Project Kinect for Azure, to integrates with Microsoft’s intelligent cloud and intelligent edge platform.

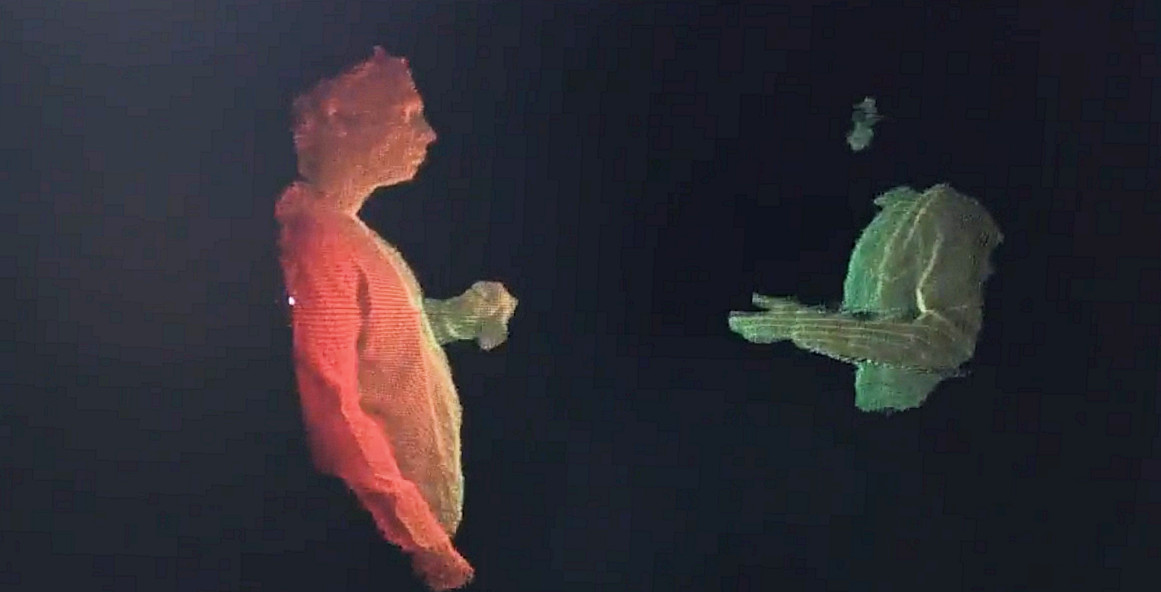

At Microsoft’s Research Faculty Summit 2018 Microsoft showed off the technology in action, demonstrating the dense and stable point cloud generated by the low-power sensor.

Microsoft has already said they will also use a next-generation Holographic Processing Unit which includes AI capabilities, enabling on-device deep learning. Doing deep learning on depth images can lead to dramatically smaller networks needed for the same quality outcome. This results in much cheaper-to-deploy AI algorithms and a more intelligent edge.

“Project Kinect for Azure unlocks countless new opportunities to take advantage of Machine Learning, Cognitive Services and IoT Edge. We envision that Project Kinect for Azure will result in new AI solutions from Microsoft and our ecosystem of partners, built on the growing range of sensors integrating with Azure AI services. I cannot wait to see how developers leverage it to create practical, intelligent and fun solutions that were not previously possible across a raft of industries and scenarios,” wrote Alex Kipman on his blog post.

Microsoft has still not revealed when the next generation of HoloLens will be coming, but with the device having passed its second birthday it is believed to be sooner rather than later.

Via WalkingCat

User forum

0 messages