Microsoft Research finds a way to enable natural interaction between humans and robots

1 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

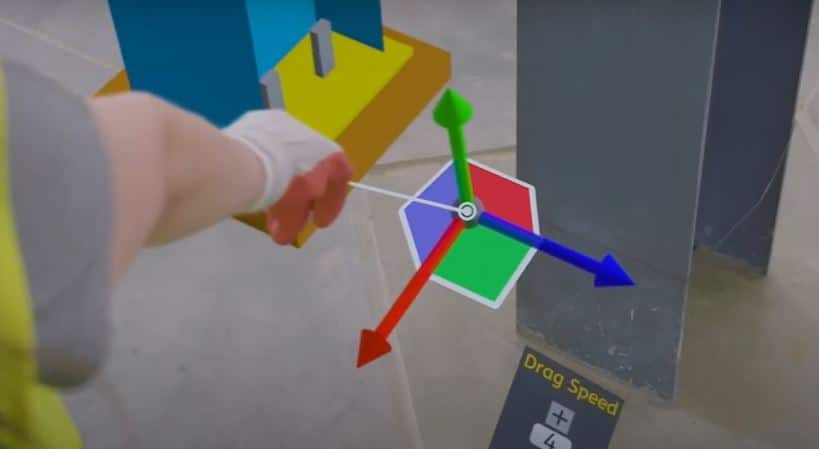

Microsoft Research today revealed a new project that will enable more natural interaction between humans and robots through Mixed Reality. Azure Spatial Anchors already supports colocalizing multiple HoloLens and smartphone devices in the same space using a shared coordinate system. With this project, Microsoft Research has extended Azure Spatial Anchors to support robots equipped with cameras.

This allows humans and robots sharing the same space to interact naturally: humans can see the plan and intention of the robot, while the robot can interpret commands given from the person’s perspective.

Microsoft has created a ROS wrapper for the Azure Spatial Anchors Linux SDK, allowing robots (and other devices equipped with a vision-based sensors and a pose estimation system) to create and query Azure Spatial Anchors, allowing the robot to co-localize with AR-enabled phones and Hololens devices. You can check out the project here at GitHub.

Source: Microsoft