Microsoft Orca-Math is a small language model that can outperform GPT-3.5 and Gemini Pro in solving math problems

2 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Key notes

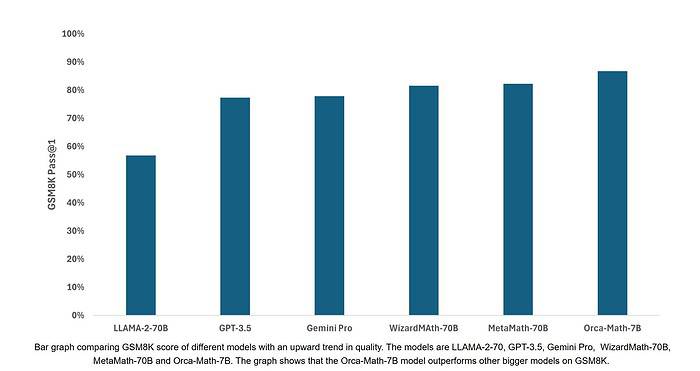

- According to benchmarks, Orca-Math achieved 86.81% on GSM8k pass@1.

- This number beats Meta’s LLAMA-2-70, Google’s Gemini Pro, OpenAI’s GPT-3.5 and even math-specific models like MetaMath-70B and WizardMa8th-70B.

Microsoft Research today announced Orca-Math, a small language model (SLM) that can outperform much larger models like Gemini Pro and GPT-3.5 in solving math problems. Orca-Math exemplifies how specialized SLMs can excel in specific domains, even outperforming larger models. It is important to note that this model was not created from scratch by Microsoft, instead this model was created by fine-tuning the Mistral 7B model.

According to benchmarks, Orca-Math achieved 86.81% on GSM8k pass@1. This number beats Meta’s LLAMA-2-70, Google’s Gemini Pro, OpenAI’s GPT-3.5 and even math-specific models like MetaMath-70B and WizardMa8th-70B. It is important to note that the base model Mistral-7B based on which Orca-Math was built achieved only 37.83% on GSM8K.

Microsoft Research was able to achieve this impressive performance by following the below techniques:

- High-Quality Synthetic Data: Orca-Math was trained on a dataset of 200,000 math problems, meticulously crafted using multi-agents (AutoGen). While this dataset is smaller than some other math datasets, it allowed for faster and more cost-effective training.

- Iterative Learning Process: In addition to traditional supervised fine-tuning, Orca-Math underwent an iterative learning process. It practiced solving problems and continuously improved based on feedback from a “teacher” signal

“Our findings show that smaller models are valuable in specialized settings where they can match the performance of much larger models but with a limited scope. By training Orca-Math on a small dataset of 200,000 math problems, we have achieved performance levels that rival or surpass those of much larger models,” wrote Microsoft Research team.

User forum

0 messages