Microsoft: Copilot will have an inner monologue before answering your question

2 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Large language models (LLMs) like Microsoft Copilot/Bing Chat are becoming increasingly sophisticated, prompting questions about their capabilities and whether they possess inner monologue, the ability to engage in internal thought and deliberation.

But first, what is inner monologue?

Inner monologue is when you talk to yourself in your head. It’s like having a little voice telling you what you think and feel. Everybody does it, and it helps us figure out things about ourselves, make choices, and be creative.

We don't really know for sure how humans do it. Inner monologue in Bing first describes how it plans to solve the task, then calls several of ~100 internal plug-ins to get more data, then generates the response, then checks that response is reasonable.

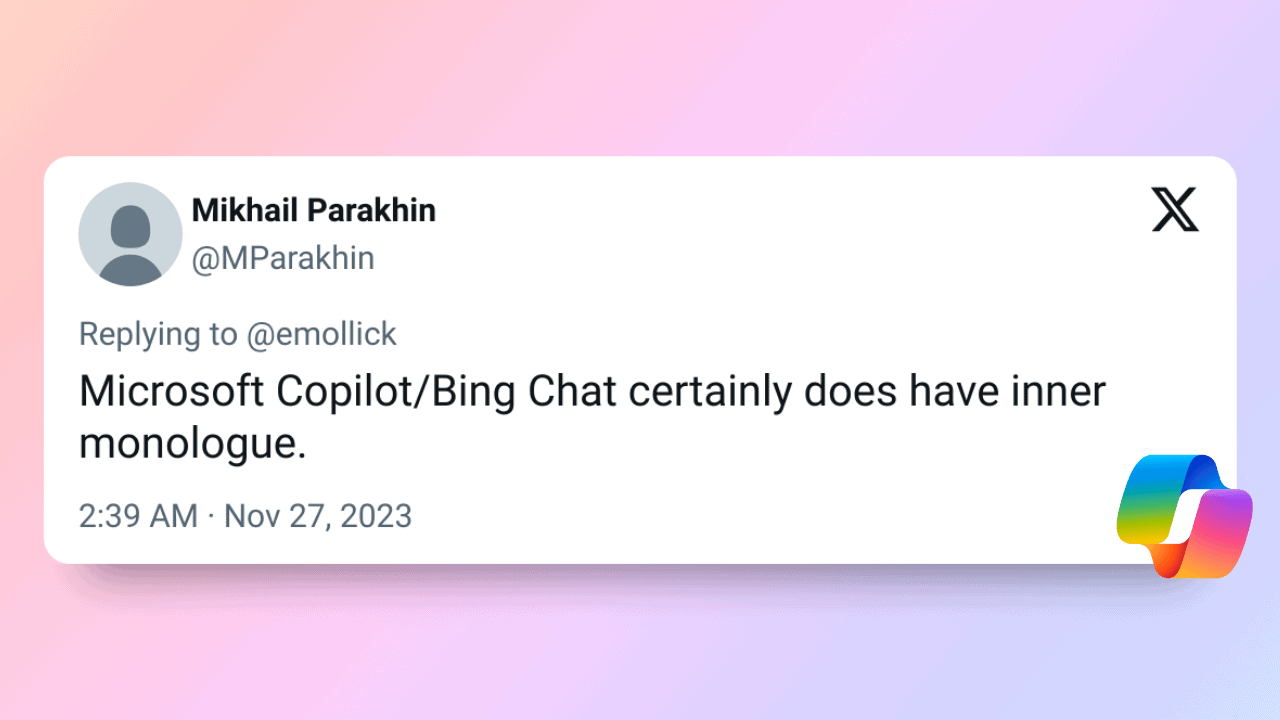

— Mikhail Parakhin (@MParakhin) November 26, 2023

A recent Twitter exchange between a user and Mikhail Parakhin, the President of Search and Advertising at Microsoft, highlighted this debate. The user cautioned against interpreting head-to-head comparisons of LLM responses as evidence of their psychology, emphasizing that their outputs are highly sensitive to prompts and don’t reveal much about their inner workings.

Parakhin, however, maintained that Microsoft Copilot/Bing Chat does have an inner monologue, describing the model’s process of planning tasks, gathering information, generating responses, and checking their reasonableness. This suggests that the model is not merely a text generator but a system capable of internal deliberation.

The debate over LLM inner monologue raises fundamental questions about consciousness and artificial intelligence. As LLMs evolve, it is crucial to consider whether they are developing something akin to human thought or simply mimicking its appearance.

User forum

0 messages