You might have to wait a little longer to get the image upload option for Bing's multimodal feature

2 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

OpenAI officially released GPT-4, and Microsoft revealed the model was “customized for search.” Part of this exciting revelation is the multimodal capability of the model, allowing it to process images. However, this feature is still limited even on Bing. Even more, uploading pictures to leverage this feature might still be far, as one of Microsoft’s employees hinted.

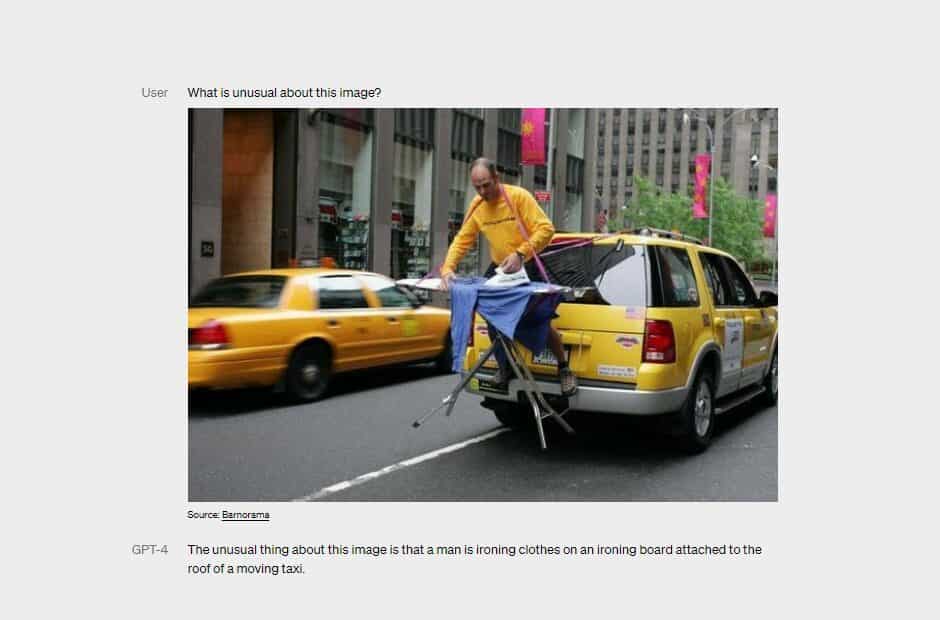

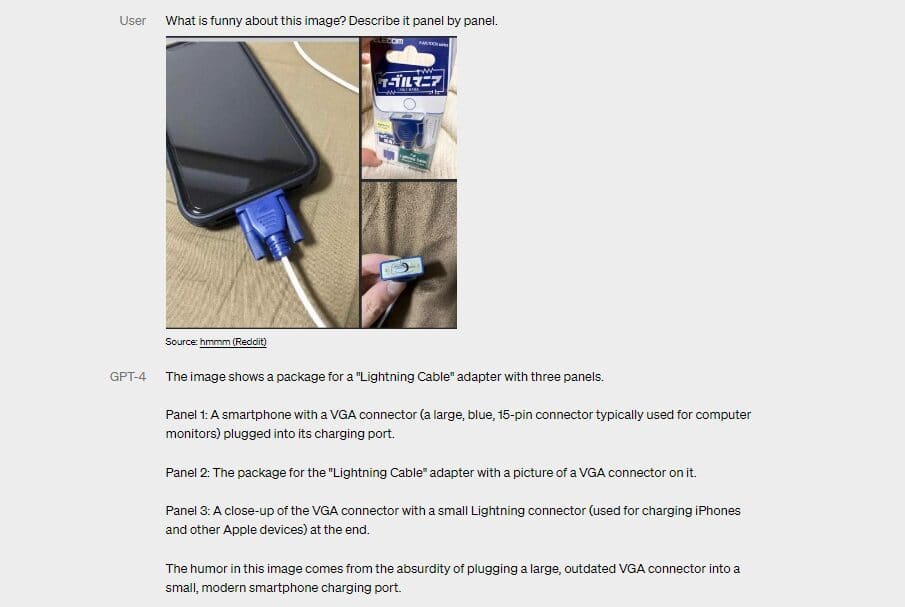

Multimodality is the biggest highlight of OpenAI’s latest model. And while GPT-4 is already accessible by subscribers of ChatGPT Plus, the feature is still under test, making it unavailable to all. Nonetheless, OpenAI showcased in its recent blog post how the multimodality would work in GPT-4, which can basically describe and interpret uploaded images.

Wow Bing can now actually describe images, now that it knows that its using GPT-4!

byu/BroskiPlaysYT inbing

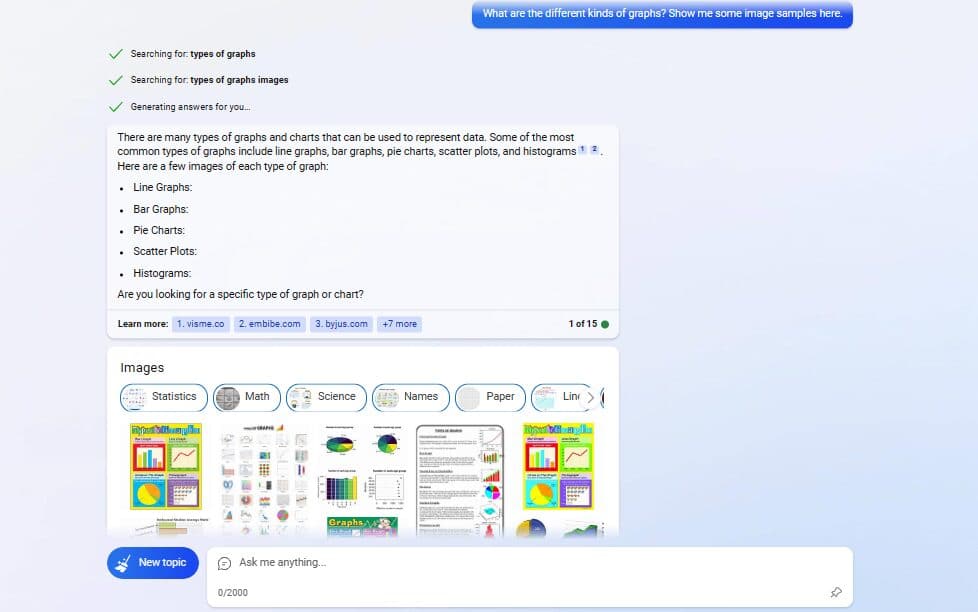

This multimodal capability is seemingly available now on the new Bing, though not fully. To start, users can ask Bing Chat to describe images using the image links taken from the web, and it will generate a response. Additionally, the chatbot will now include images in its process, especially if you ask it.

On the other hand, the option to directly upload a picture for Bing to describe or analyze is still unavailable. The arrival of this option was recently raised by a user on Twitter to Mikhail Parakhin, Microsoft’s head of Advertising and Web Services, who suggested that it is not the company’s priority for now.

It is much more expensive, we need to roll out the current functionality wide first. It's all about bringing in more GPUs.

— Mikhail Parakhin (@MParakhin) March 14, 2023

To make this possible, Parakhin noted the need for “more GPUs” and said that doing this was “much more expensive.” In a recent report, Microsoft revealed that it had already spent hundreds of millions of dollars to build a ChatGPT supercomputer by linking thousands of Nvidia GPUs on its Azure cloud computing platform. With this, if Parakhin’s words are taken seriously, Microsoft might be looking to spend more to completely bring the multimodal feature to Bing. And based on what Parakhin said, this is possible, just not today.

User forum

0 messages