Google VLOGGER AI brings photos to life with lifelike talking avatars

2 min. read

Updated on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Key notes

- Google AI creates lifelike talking avatars from single photos.

- VLOGGER uses diffusion models to animate photos realistically.

- Potential applications include VR avatars and video dubbing.

Google researchers have developed a new AI system called VLOGGER that can generate realistic videos of people speaking, gesturing, and moving from just a single still photo.

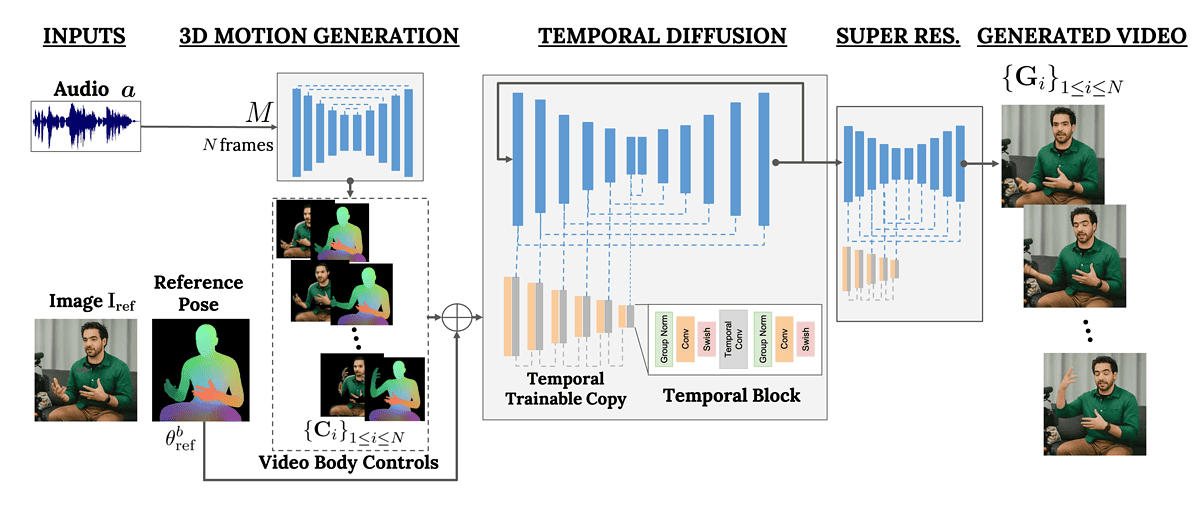

This technology, described in a research paper titled “VLOGGER: Multimodal Diffusion for Embodied Avatar Synthesis,” leverages diffusion models, a type of machine learning that excels at creating images from text descriptions. By applying this tech to video and training it on a massive dataset, VLOGGER can animate photos in a way that’s highly convincing.

In contrast to previous work, our method does not require training for each person, does not rely on face detection and cropping, generates the complete image (not just the face or the lips), and considers a broad spectrum of scenarios (e.g. visible torso or diverse subject identities) that are critical to correctly synthesize humans who communicate.

The authors wrote.

VLOGGER’s capabilities include automatically dubbing videos in different languages, editing videos, and even creating full videos from a single image.

Researchers claim VLOGGER outperforms other methods in image quality and realism. As this technology advances, the line between real and artificial videos may blur. VLOGGER offers a glimpse into the future of AI.

However, concerns exist regarding potential misuse. As VLOGGER refines its abilities, so does the ease of creating deepfakes – videos that replace a person’s likeness with another’s, like what happened with Taylor Swift.

You can view the examples here.

User forum

0 messages