Are Driverless Cars Really Accurate? Duke University Researchers Says They Can Be Fooled

3 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Driverless cars promise comfort and safety among drivers and passengers, but it might change with the revelation of researchers at Duke University. According to the team, there is an attack strategy that criminals can do to fool the autonomous vehicle sensors (combination of 2D data from cameras and 3D data from LiDAR) to perceive nearby objects closer or further than they appear. This can mean problems and significant damages, especially when used in military situations where a single vehicle translates to a valuable target. Even more, researchers underscored that it is possible for hackers to find a way to attack different vehicles all at once.

“Our goal is to understand the limitations of existing systems so that we can protect against attacks,” said Miroslav Pajic, Dickinson Family Associate Professor of Electrical and Computer Engineering at Duke. “This research shows how adding just a few data points in the 3D point cloud, ahead or behind of where an object actually is, can confuse these systems into making dangerous decisions.”

According to researchers, the flaw of the system will start when a laser gun is used to shoot a LIDAR sensor. This will twist the perception of the automobile caused by the addition of false data points. According to Pajic, the system can spot this attack if the data points greatly differ from what the car’s camera sees. However, according to the research at Duke, the system can be deceived when the 3D LIDAR data points are precisely placed within a certain area of a camera’s 2D field of view.

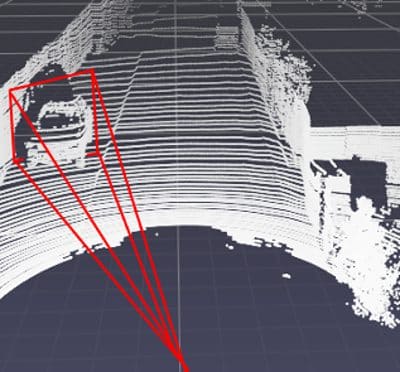

This creates an area vulnerable to attacks. It is in the shape of a frustum stretched out in front of a camera lens or in the form of a 3D pyramid with a tip sliced off.

“This so-called frustum attack can fool adaptive cruise control into thinking a vehicle is slowing down or speeding up,” Pajic said. “And by the time the system can figure out there’s an issue, there will be no way to avoid hitting the car without aggressive maneuvers that could create even more problems.”

Pajic and his team, fortunately, have a viable solution to the risk through added redundancy like stereo cameras with overlapping fields of view. These techs, according to them, will work together to properly calculate distances and determine the error between the LIDAR data and camera perception.

“Stereo cameras are more likely to be a reliable consistency check, though no software has been sufficiently validated for how to determine if the LIDAR/stereo camera data are consistent or what to do if it is found they are inconsistent,” said Spencer Hallyburton, lead author of the study and a Ph.D. candidate in Pajic’s Cyber-Physical Systems Lab. “Also, perfectly securing the entire vehicle would require multiple sets of stereo cameras around its entire body to provide 100% coverage.”

Pajic also introduced creating a system that will let cars near each other share data. The research and the team’s suggestions will be presented from August 10 to 12 at the 2022 USENIX Security Symposium.

User forum

0 messages