Apple's latest parental control feature will block children from sending or receiving sensitive images

2 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Apple is using its on-device neural engine for more than recognising your voice.

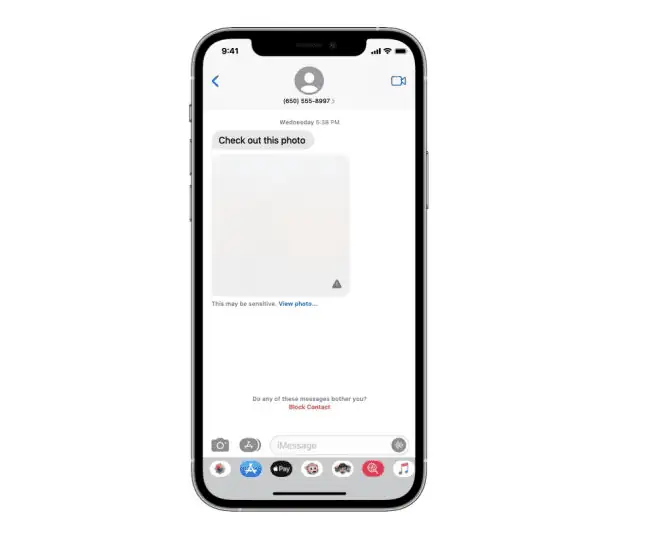

The company has revealed that, as part of its parental control features, iPhones will soon scan messages being sent or received to detect nudity, and block and blur such images. Children would still have the option of viewing the images, but when they do their parents will be informed. Similarly, if they try to send a possible explicit image, they will be warned, and if they go ahead a copy will be sent to the parent.

Given how often women are on the receiving end of unwanted sexual images it is likely a feature we all need, and of course 3rd party tools are usually not able to access Apple’s iMessage service to address the issue, making Apple’s effort a welcome one.

Privacy advocates are however expressing concern around the feature expanding to potentially police users in other ways, with Apple, for example, possibly working with oppressive governments to monitor dissidents.

Overall the feature however appears to be a real win for parents, and will hopefully keep children innocent for a bit longer.

This feature is coming in an update later this year to accounts set up as families in iCloud for iOS 15, iPad OS15 and macOS Monterey.

via Vice

User forum

0 messages