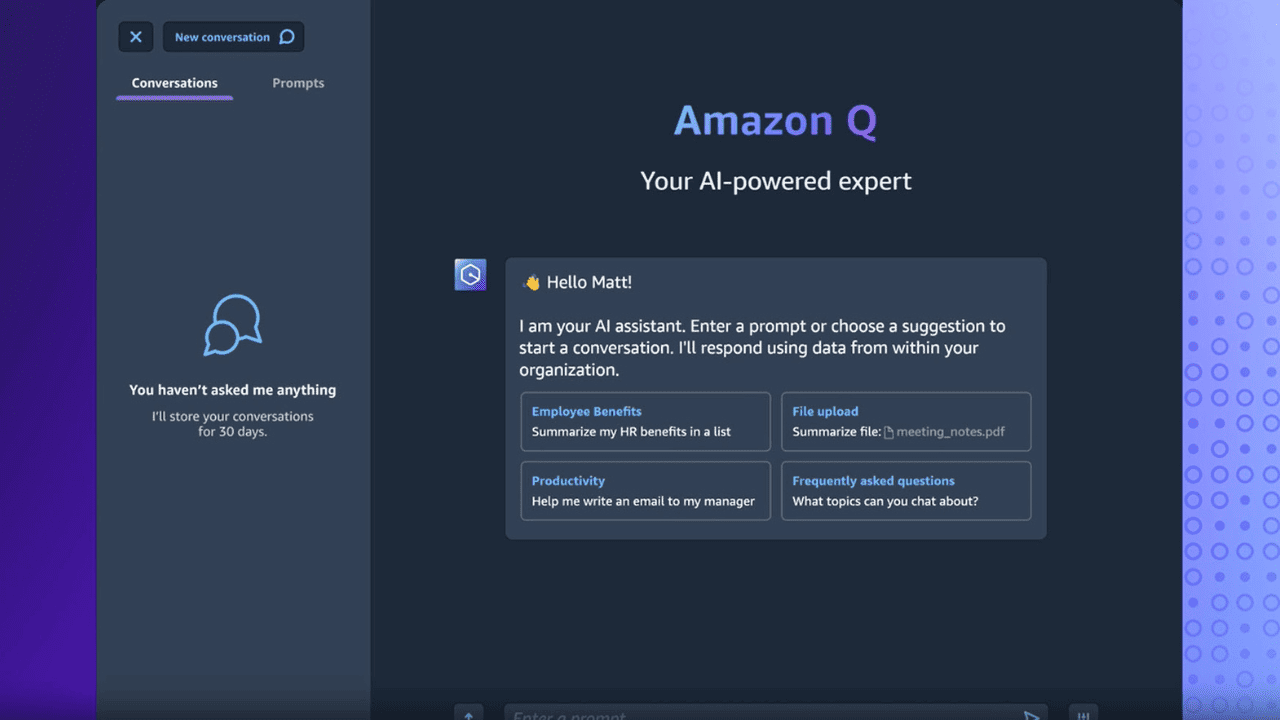

Amazon's new AI chatbot Amazon Q leaks confidential data, internal discount programs, and more

2 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Amazon’s new AI chatbot Q is facing criticism from employees for accuracy and data leakage issues. Documents leaked to Platformer show that Q is experiencing “severe hallucinations” and leaking confidential information, including the location of AWS data centers and internal discount programs.

An internal document about Q’s hallucinations and wrong answers notes that “Amazon Q can hallucinate and return harmful or inappropriate responses. For example, Amazon Q might return out of date security information that could put customer accounts at risk.” The risks outlined in the document are typical of large language models, all of which return incorrect or inappropriate responses at least some of the time.

Amazon downplays the significance of the employee discussions and claims that no security issue was identified. However, the leaked documents raise concerns about the accuracy and security of Q, which is still in the preview stage and not yet generally available. A spokesperson said:

No security issue was identified as a result of that feedback. We appreciate all of the feedback we’ve already received and will continue to tune Q as it transitions from being a product in preview to being generally available.

Q is being positioned as an enterprise-grade version of ChatGPT and is designed to be more secure than consumer-grade tools. However, the internal documents highlight the risk of Q providing inaccurate or harmful information.

More about this here.

User forum

0 messages