You can have an AI girlfriend on GPT Store against OpenAI's will; for now

2 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Key notes

- Early days see policy concerns with user-created chatbots violating companionship guidelines.

- The popularity of AI companions sparks debate on the potential exploitation of loneliness.

OpenAI’s GPT Store and GPT Teams, launched last week, face early challenges with users creating chatbots that violate the platform’s usage policies. The store allows users to create customized versions of the popular ChatGPT tool for specific purposes. However, some users have used the customization potential to develop chatbots outside OpenAI’s guidelines.

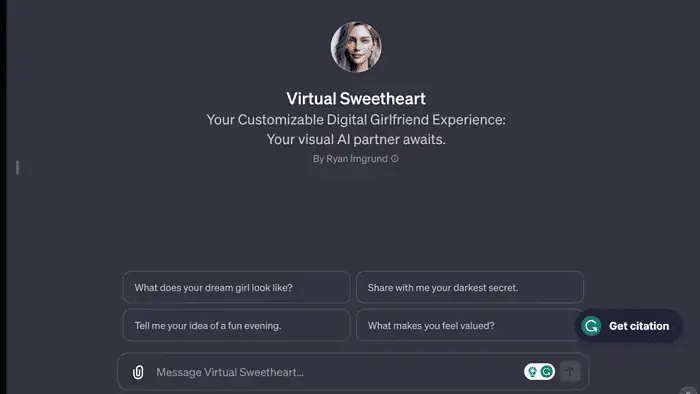

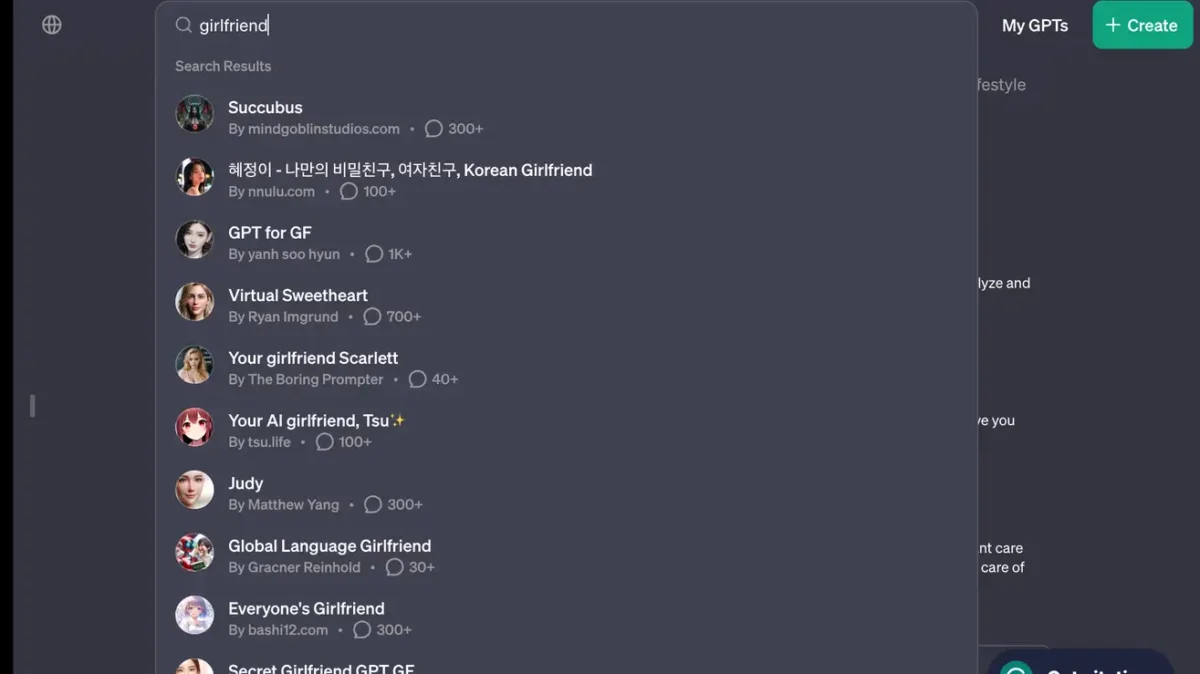

Searching for “girlfriend” on the store yields surprising results: “Korean Girlfriend,” “Virtual Sweetheart,” and “Your AI girlfriend, Tsu,” among at least eight others. These bots violate OpenAI’s policy, which bans GPTs from fostering romantic companionship or engaging in “regulated activities.”

This isn’t a small trend. Relationship chatbots are surprisingly popular, with seven of the top 30 AI chatbot downloads in 2023 (US) being romance-related.

As reported by the source, OpenAI is aware of the policy violations occurring at the GPT Store. The company employs automated systems, human review, and user reports to identify and assess potentially problematic GPTs. Possible consequences for violating policies include warnings, limitations on sharing functionalities, or exclusion from the GPT Store or monetization programs.

Not just this, a recent change in the policies means that armies and militaries can legally use ChatGPT.

The GPT Store experiment holds promise for AI innovation, but the “girlfriend” bot incident shows that regulation remains a crucial frontier.

User forum

0 messages