What is GPT-3 and how will it affect your current job

GPT is short for Generative Pre-training Transformer (GPT), a language model written by Alec Radford and published in 2018 by OpenAI, Elon Musks’s artificial intelligence research laboratory. It uses a generative model of language (where two neural networks perfect each other by competition) and is able to acquire knowledge of the world and process long-range dependencies by pre-training on diverse sets of written material with long stretches of contiguous text.

GPT-2 (Generative Pretrained Transformer 2) was announced in February 2019 and is an unsupervised transformer language model trained on 8 million documents for a total of 40 GB of text from articles shared via Reddit submissions. Elon Musk was famously reluctant to release it as he was concerned it could be used to spam social networks with fake news.

In May 2020 OpenAI announced GPT-3 (Generative Pretrained Transformer 3), a model which contains two orders of magnitude more parameters than GPT-2 (175 billion vs 1.5 billion parameters) and which offers a dramatic improvement over GPT-2.

Given any text prompt, the GPT-3 will return a text completion, attempting to match the pattern you gave it. You can “program” it by showing it just a few examples of what you’d like it to do, and it will deliver a complete article or story, such as the text below, written entirely by GPT-3.

GPT-3 achieves strong performance on many NLP datasets, including translation, question-answering, and cloze tasks, as well as several tasks that require on-the-fly reasoning or domain adaptation, such as unscrambling words, using a novel word in a sentence, or performing 3-digit arithmetic. GPT-3 can generate samples of news articles which human evaluators have difficulty distinguishing from articles written by humans.

The last application has always worried OpenAI. GPT-3 is currently available as an open beta, with a paid private beta expected to be available eventually. OpenAI said they will terminate API access for obviously harmful use-cases, such as harassment, spam, radicalization, or astroturfing.

While the most obviously threatened population are those who produce written work, such as scriptwriters, AI developers have already found surprising applications, such as using GPT-3 to write code.

Sharif Shameem, for example, wrote a layout generator where you describe in plain text what you want, and the model generates the appropriate code.

This is mind blowing.

With GPT-3, I built a layout generator where you just describe any layout you want, and it generates the JSX code for you.

W H A T pic.twitter.com/w8JkrZO4lk

— Sharif Shameem (@sharifshameem) July 13, 2020

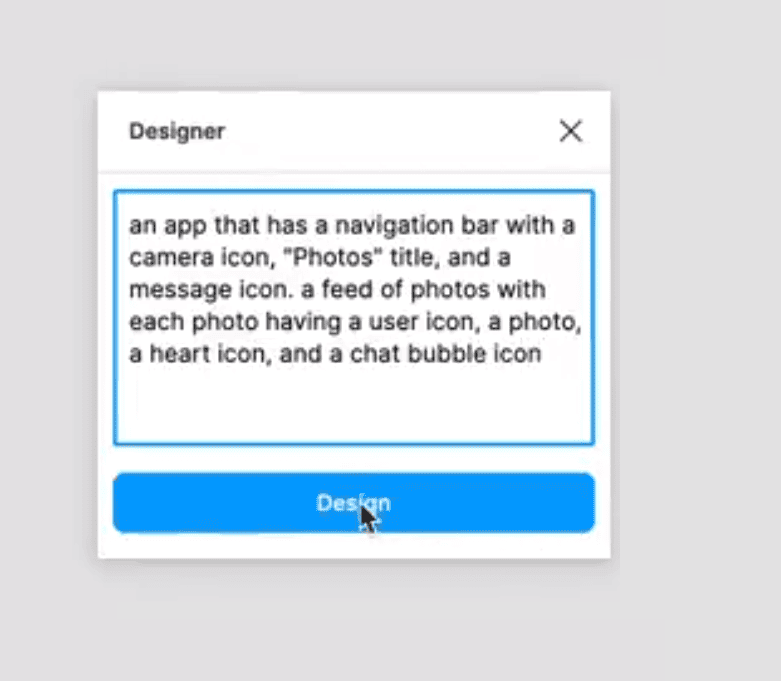

Jordan Singer similarly created a Figma plugin which allows one to create apps using plain text descriptions.

This changes everything. ?

With GPT-3, I built a Figma plugin to design for you.

I call it "Designer" pic.twitter.com/OzW1sKNLEC

— jordan singer (@jsngr) July 18, 2020

It can even be used to diagnose asthma and prescribe medication.

So @OpenAI have given me early access to a tool which allows developers to use what is essentially the most powerful text generator ever. I thought I’d test it by asking a medical question. The bold text is the text generated by the AI. Incredible… (1/2) pic.twitter.com/4bGfpI09CL

— Qasim Munye (@qasimmunye) July 2, 2020

Other applications is as a search engine or oracle of sorts, and can even be used to explain and expand on difficult concepts.

I made a fully functioning search engine on top of GPT3.

For any arbitrary query, it returns the exact answer AND the corresponding URL.

Look at the entire video. It's MIND BLOWINGLY good.

cc: @gdb @npew @gwern pic.twitter.com/9ismj62w6l

— Paras Chopra (@paraschopra) July 19, 2020

While it seems this approach may lead directly to a general AI that can understand, reason and converse like a human, OpenAI warns that they may have run into fundamental scaling up problems, with GPT-3 requiring several thousand petaflop/s-days of compute, compared to tens of petaflop/s-days for the full GPT-2. It seems while we are closer, the breakthrough that will make all our jobs obsolete is still some distance away.

Read more about GPT-3 at GitHub here.

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

User forum

0 messages