User finds "Safety None mode" in Google AI Studio, can handle previously off-limits requests

2 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

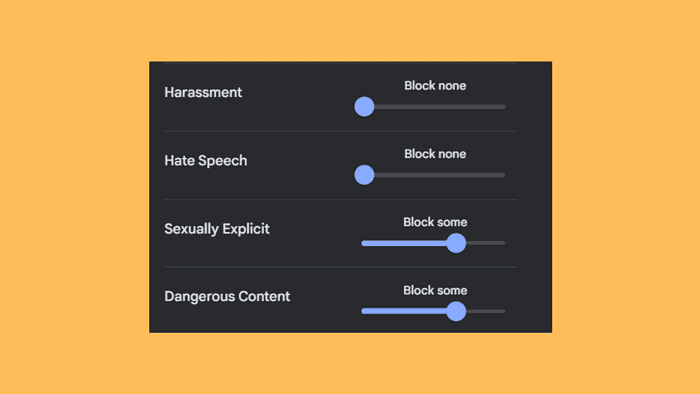

Google AI Studio is shaking things up with a new feature called “Safety None mode.” The Safety None mode can be a breath of fresh air for users who feel restricted by other AI models. A user said that Gemini can now handle previously off-limits requests, making it a more responsive and versatile tool. This could be particularly useful for tasks that require a wider range of information or perspectives.

Here is the post

The conversation also hints at the Safety None mode, fostering a more natural interaction with Gemini. Users report that AI is becoming more human-like, providing balanced answers and even cracking jokes. This suggests a potential for more engaging and informative experiences.

However, it’s important to note that Safety None doesn’t mean a free-for-all. Vision filters for inappropriate images still seem to be in place, and users might still need to refine their prompts to achieve the desired results.

Moreover, while the mode reduces restrictions, it doesn’t remove the importance of responsible AI use. As shown by a user’s experience, requests for unethical content are still likely to be blocked.

Overall, Google Gemini’s Safety None mode appears to be a step towards less restrictive AI interactions. While the jury’s still out on its long-term impact, it offers exciting possibilities for users who value flexibility and a wider range of information access. Remember, with great power comes great responsibility, so using Safety None ethically remains crucial.

User forum

0 messages