Taylor Swift not searchable on X (formerly Twitter) due to explicit deepfake image issue

2 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

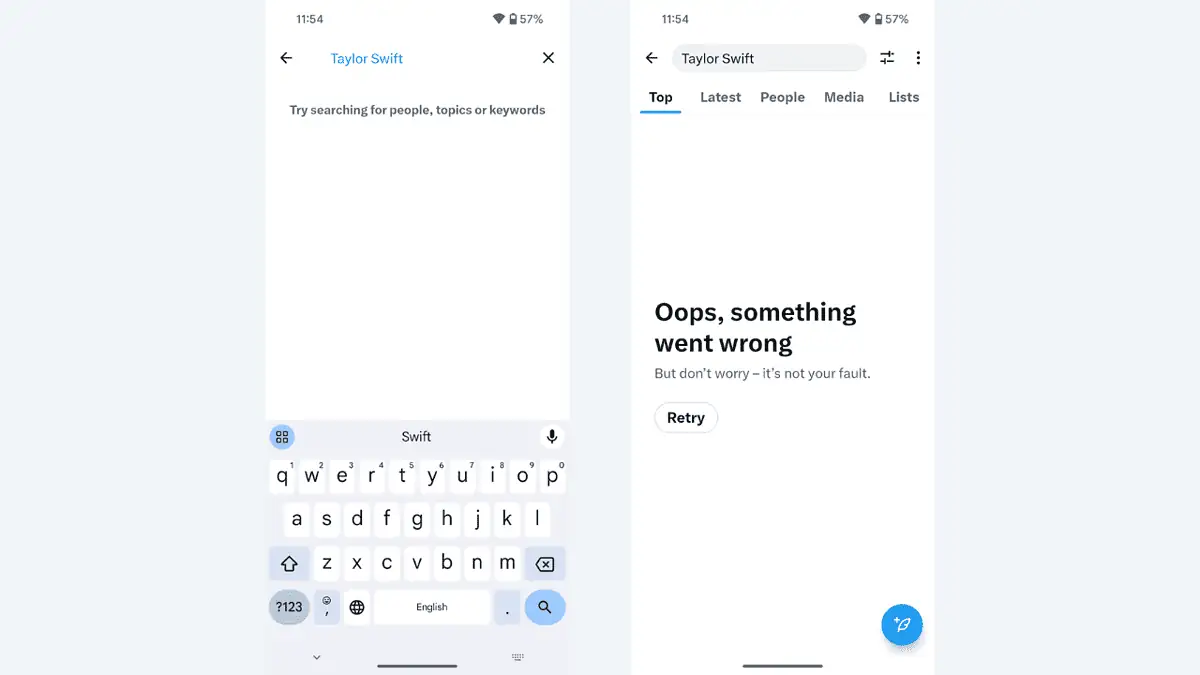

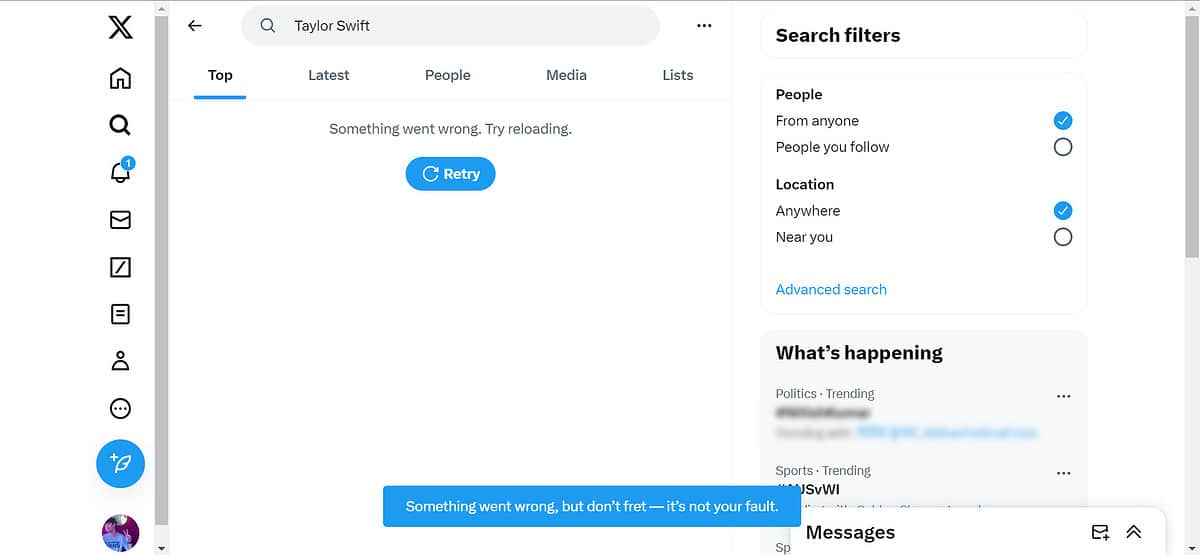

In late January, X (formerly Twitter) faced a challenge when sexually explicit deepfake images targeting Taylor Swift circulated around the internet. In response, X implemented temporary measures to contain the situation. I tried searching for Taylor Swift on X, both on the PC and Android app, and the results were the same, i.e., no results at all.

AI-generated images depicting Swift in non-consensual scenarios appeared on X, sparking immediate concern from fans and advocates. The circulation of these deepfakes was condemned as online harassment and a violation of the singer’s privacy.

The deepfake incident also resonated with Satya Nadella, CEO of Microsoft. He emphasized the importance of global collaboration in addressing online harms, saying:

And there’s a lot to be done and a lot being done there. But it is about global, societal–you know, I’ll say, convergence on certain norms. And we can do – especially when you have law and law enforcement and tech platforms that can come together – I think we can govern a lot more than we think– we give ourselves credit for.

Images found online were traced back to a Telegram group sharing abusive images of women. The group used a free Microsoft text-to-image generator named Designers to create the images from scratch using generative AI.

Users of a Telegram group shared prompts to bypass Microsoft’s protections. They found that using “Taylor ‘singer’ Swift” instead of “Taylor Swift” generated images more effectively. Although the group’s exact images could not be replicated, “Taylor ‘singer’ Swift” worked with Microsoft’s Designers tool, as reported here.

The Swift deepfake incident served as a stark reminder of the dark side of the internet and the challenges we face in the digital age.

User forum

0 messages