Opera becomes the first browser to integrate Local LLMs

1 min. read

Updated on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Key notes

- Users can now directly manage and access powerful AI models on their devices, offering enhanced privacy and speed compared to cloud-based AI.

Opera today announced experimental support for 150 local large language models (LLMs) within its Opera One developer browser. With this support, users can now directly manage and access powerful AI models on their devices, offering enhanced privacy and speed compared to cloud-based AI.

Expanded Choice and Privacy with Local LLMs

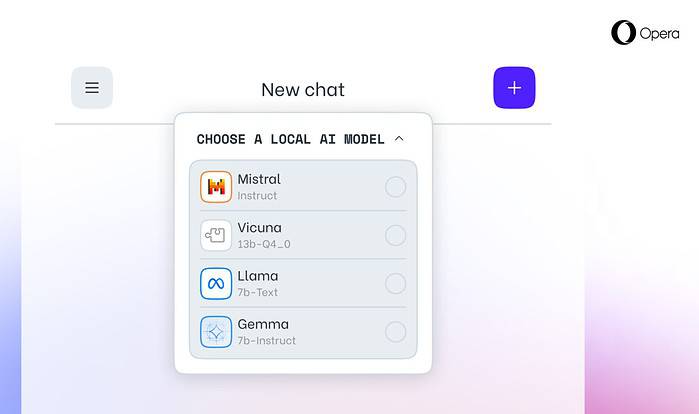

Opera’s integration includes popular LLMs like Meta’s Llama, Vicuna, Google’s Gemma, Mistral AI’s Mixtral, and many more. Local LLMs eliminate the need to share data with servers, protecting user privacy. Opera’s AI Feature Drops Program allows developers early access to these cutting-edge features.

How to Access Local LLMs in Opera

Users can visit Opera’s developer site to upgrade to Opera One Developer, enabling the selection and download of their preferred local LLM. While requiring storage space (2-10 GB per model), local LLMs can offer a significant speed boost over cloud-based alternatives, dependent on hardware.

Opera’s Commitment to AI Innovation

“Introducing Local LLMs in this way allows Opera to start exploring ways of building experiences and know-how within the fast-emerging local AI space,” said Krystian Kolondra, EVP Browsers and Gaming at Opera.

User forum

0 messages