OpenAI's GPT-4o mini processes over 200B tokens daily less than a week after launch

GPT-4o mini scores 82% on MMLU, 87% on MGSM, and 87.2% on HumanEval.

2 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Key notes

- GPT-4o mini supports 128K context and 16K max output tokens, surpassing 200 billion daily tokens.

- It outperforms competitors like Gemini Flash and Claude Haiku in benchmarks.

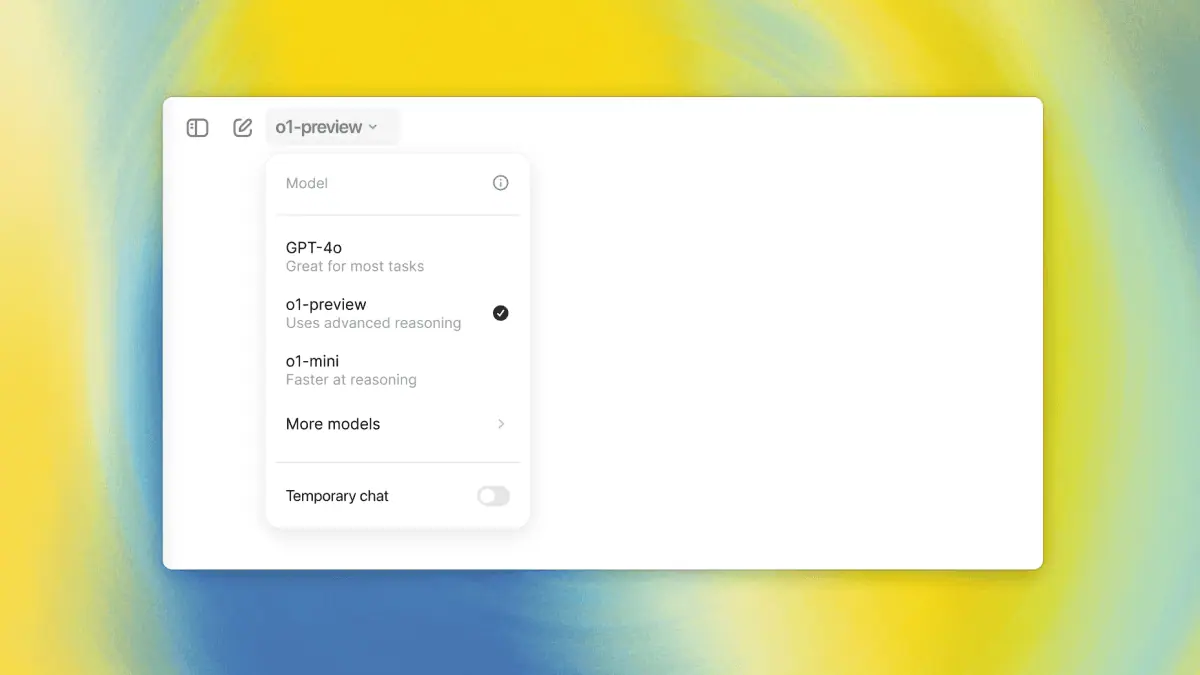

- Now used in ChatGPT Free, Plus, and Team, with future support for various input and output types.

OpenAI launched the GPT-4o mini, its latest, most capable small model, for the masses a little while ago. The Microsoft-backed company said at its launch that the model supports up to 128K of context window and max output tokens at 16K.

Now, in an update shared by OpenAI boss Sam Altman, the small model has surpassed the daily processing milestone of 200 billion tokens, although some users also noted that the Mini model consumes more than 20x tokens for images than GPT-4.

GPT-4o mini is ideal for tasks requiring low cost and latency, making it suitable for applications involving multiple model calls, large context handling, or real-time text interactions, such as APIs, comprehensive code base analysis, and customer support chatbots.

“Today, GPT-4o mini supports text and vision in the API, with support for text, image, video and audio inputs and outputs coming in the future,” the Microsoft-backed company promises.

OpenAI said at the time of the launch that the Mini model outperforms some of its competitors in several benchmarks. GPT-4o Mini outperforms competitors, like Google’s Gemini Flash and Anthropic’s Claude Haiku, with high benchmark scores: 82% on MMLU, 87% on MGSM, and 87.2% on HumanEval.

GPT-4o mini also replaces GPT-3.5 for ChatGPT Free, Plus, and Team users with a training cutoff date of October 2023. NeMo, another smaller model from Mistral AI that’s backed by Nvidia, is also making waves with 128K tokens and a 68.0% score on MMLU.

User forum

0 messages