Mistral's NeMo small model challenges OpenAI's latest GPT-4o mini

OpenAI launched the GPT-4o mini not too long ago

Key notes

- Mistral AI released NeMo, a 12B model with Nvidia’s backing, rivaling OpenAI’s GPT-4o mini.

- NeMo supports 128k tokens and scores 68.0% on MMLU, below GPT-4o mini’s 82%.

- Mistral, funded with $645 million, has partnered with Microsoft to offer models on Azure.

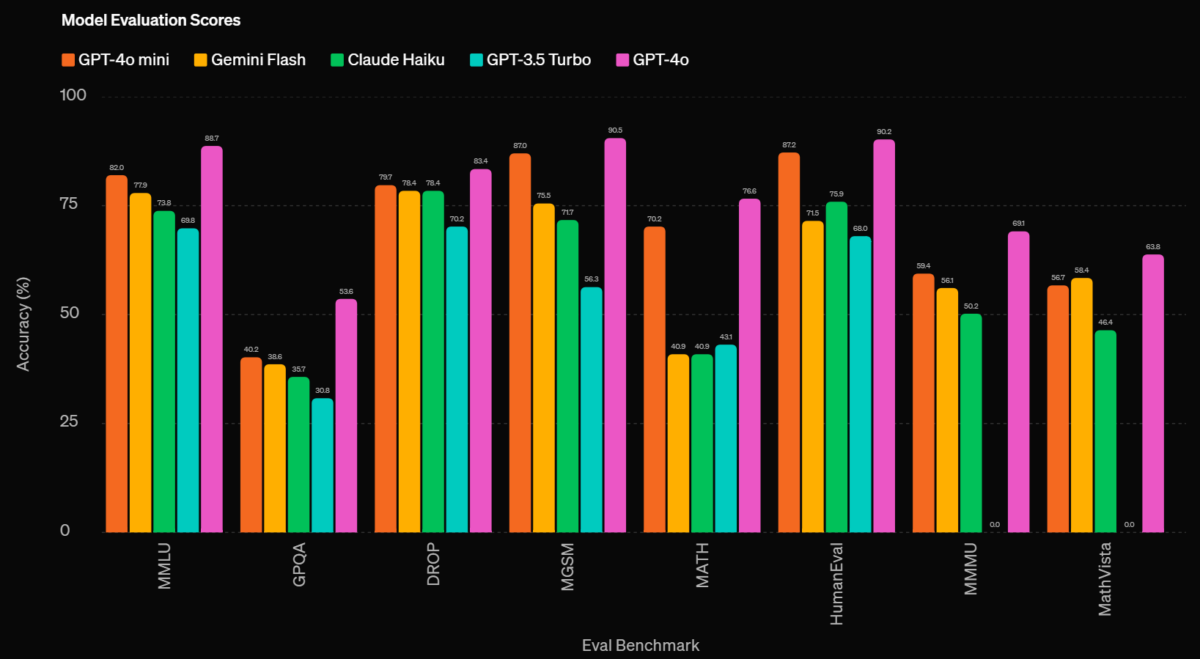

Mistral AI has just announced its yet latest smaller model, NeMo, with strong backing from Nvidia. Interestingly, the announcement came out as OpenAI announced its cost-friendly lightweight model, the GPT-4o mini, which scores better benchmark numbers than Gemini Flash and Claude Haiku.

This “state-of-the-art” 12B model supports a context length of up to 128k tokens is designed for high performance in reasoning, world knowledge, and coding accuracy, and is currently available under the Apache 2.0 license. Both base and instruction-tuned versions can be accessed on HuggingFace and other platforms.

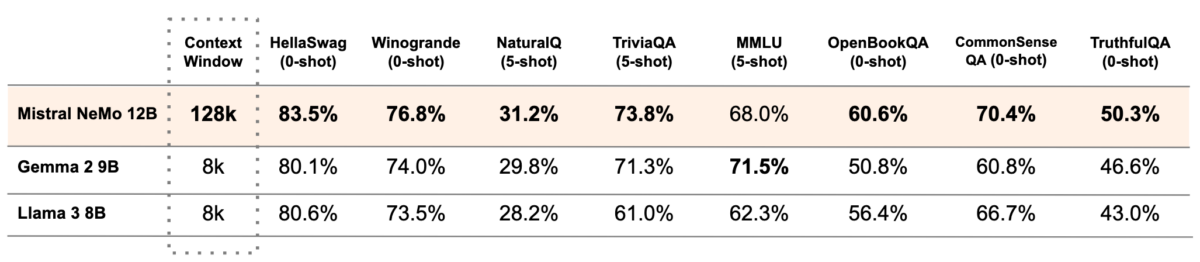

Mistral’s NeMo also records a few impressive benchmark performances. The MMLU benchmarking, which measures a model’s overall knowledge and problem-solving capabilities, sees NeMo scoring 68.0%. It’s close enough to Google’s Gemma 2, but not enough to close up with OpenAI’s GPT-4o mini that has an 82% on MMLU.

Here’s what the latter looks like:

The versatile, multilingual model also records strong capabilities in various languages and is trained for efficient function calling. It uses the advanced Tekken tokenizer, which compresses text and code more effectively than previous models, particularly in specific languages.

Mistral arrived not too long ago, merely over a year from former Meta and Google DeepMind employees. The company, which had a funding round of roughly $645 million in June 2024, has already been making waves in the AI race.

Earlier this year, Microsoft and Mistral AI also announced a partnership that sees Mistral’s advanced large language models (LLMs) available first on Microsoft’s Azure cloud platform. The company has also joined OpenAI in offering commercial models on Azure.

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

User forum

0 messages