Microsoft's Phi-2 2.7B model outperforms the recently announced Google Gemini Nano-2 3.2B model

2 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

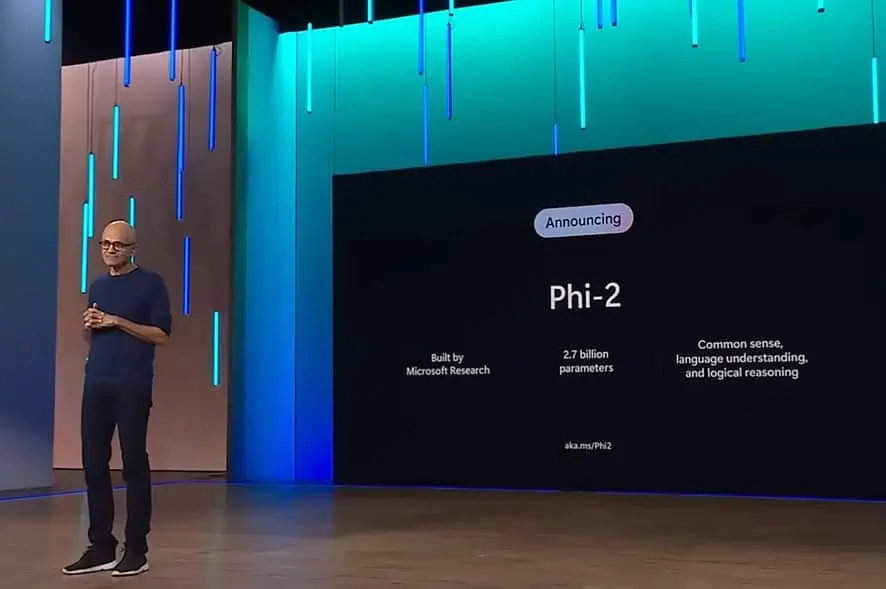

Over the past few months, Microsoft Research has been releasing a suite of small language models (SLMs) called “Phi”. The Phi-1 was released first with 1.3 billion parameters and it was specialized for basic Python coding. In September, Microsoft Research released Phi-1.5 model with 1.3 billion parameters, but it was trained with a new data source that included various NLP synthetic texts. Despite its small size, phi-1.5 was delivering a nearly state-of-the-art performance when comparable to other similarly sized models.

Today, Microsoft announced the release of Phi-2 model with 2.7 billion parameters. Microsoft Research claims that this new SLM delivers state-of-the-art performance among base language models with less than 13 billion parameters. On some complex benchmarks, Phi-2 matches or outperforms models up to 25x larger.

Last week, Google announced Gemini suite of language models. The Gemini Nano is Google’s most efficient model built for on-device tasks and it can run directly on mobile silicon. Gemini Nano-like small language model enables features such as text summarization, contextual smart replies, and advanced proofreading and grammar correction.

According to Microsoft, the new Phi-2 model matches or outperforms the new Google Gemini Nano-2, despite being smaller in size. You can find the benchmarks comparison between Google Gemini Nano-2 and Phi-2 models below.

| Model | Size | BBH | BoolQ | MBPP | MMLU |

|---|---|---|---|---|---|

| Gemini Nano 2 | 3.2B | 42.4 | 79.3 | 27.2 | 55.8 |

| Phi-2 | 2.7B | 59.3 | 83.3 | 59.1 | 56.7 |

In addition to outperforming Gemini Nano-2, Phi-2 also surpasses the performance of Mistral and Llama-2 models at 7B and 13B parameters on various benchmarks. Find the details below.

| Model | Size | BBH | Commonsense Reasoning | Language Understanding | Math | Coding |

|---|---|---|---|---|---|---|

| Llama-2 | 7B | 40.0 | 62.2 | 56.7 | 16.5 | 21.0 |

| 13B | 47.8 | 65.0 | 61.9 | 34.2 | 25.4 | |

| 70B | 66.5 | 69.2 | 67.6 | 64.1 | 38.3 | |

| Mistral | 7B | 57.2 | 66.4 | 63.7 | 46.4 | 39.4 |

| Phi-2 | 2.7B | 59.2 | 68.8 | 62.0 | 61.1 | 53.7 |

While the previous two phi models were made available on Hugging Face, Phi–2 has been made available on the Azure model catalog. You can learn more about Phi-2 here.