Microsoft's new VASA-1 makes realistic talking faces from images and speech

Just before elections, talk about bad timing.

2 min. read

Updated on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Key notes

- VASA, a new AI system, creates realistic talking faces from a single image and audio clip.

- VASA goes beyond lip-syncing, capturing emotions and natural head movements for lifelike results.

- The system offers control over gaze, distance, and emotions in the generated video.

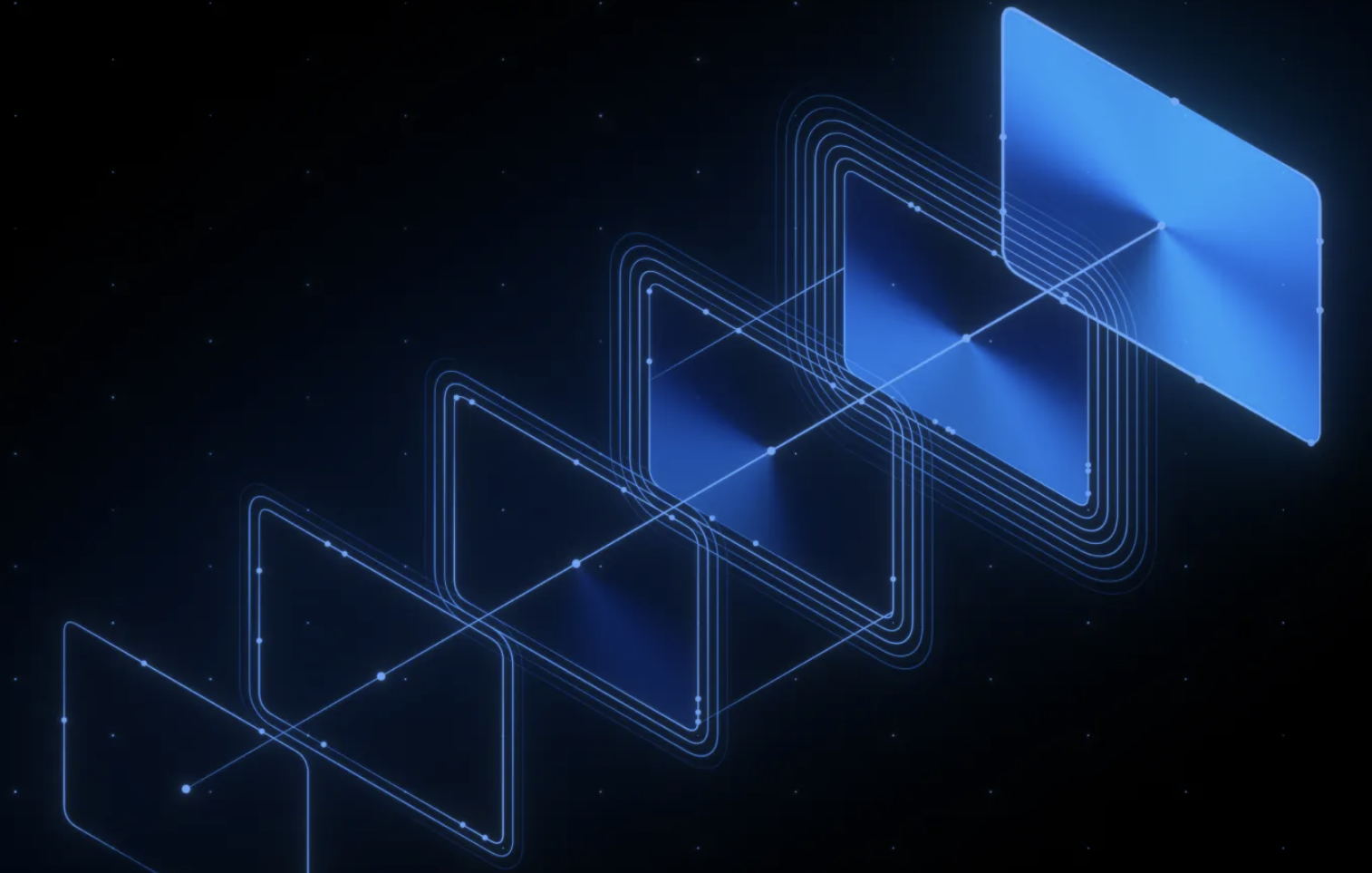

Microsoft researchers have developed a new VASA system that can create lifelike talking faces from a single image and an audio clip. VASA-1, the first model built with this framework, can produce facial expressions, precisely synchronized lip movements, and natural head motions. This has the potential to create more engaging and realistic experiences in various applications.

VASA-1 goes beyond simply matching lip movements to audio. It can capture a wide range of emotions, subtle facial nuances, and natural head movements, making the generated faces appear more believable. It also has control over the generated video. Users can specify the character’s gaze direction, perceived distance, and even their emotional state.

The best thing is that the system is also designed to handle unexpected inputs. Even though it wasn’t trained on artistic photographs, singing voices, or non-English speech, VASA-1 can still generate videos using these inputs.

VASA-1 gets this realism by separating facial features, 3D head position, and facial expressions into distinct parts. This “disentanglement” gives independent control and editing of these aspects within the generated video.

The researchers behind VASA-1 highlight its real-time efficiency. The system can produce high-resolution videos (512×512 pixels) at high frame rates. In offline mode, it generates frames at 45 frames per second, while online generation delivers 40 frames per second.

While acknowledging the potential for misuse, the researchers emphasize the positive applications of VASA-1. These include enhancing educational experiences, assisting people with communication challenges, and providing companionship or therapeutic support.

Either way, I still question the timing of this research paper. I believe it could have been delayed, given that people will believe anything they see on Social media; this tech can be misused severely, especially when the elections are here. Also, I find this technology very similar to Google’s VLOGGER.

I know it’s still new but the eye movement feels weird to me, see here.

User forum

0 messages