Meta's first SAM object-identifying AI was so good that it now launched SAM 2

It extends segmentation capabilities to videos and is freely accessible under the Apache 2.0 license.

Key notes

- Meta released SAM 2, an upgraded model for real-time video object tracking.

- It’s six times faster and more accurate than SAM 1, with real-time segmentation at 44 fps.

- SAM 2’s code and dataset are freely available on GitHub.

Over a year after launching the Segment Anything Model (SAM) and its 1B mask dataset, Meta came with another announcement. The Facebook parent company has now introduced SAM 2, a follow-up model to the AI that lets you identify and follow visual objects in real time.

SAM and its SA-1B were successful because they had mixed-reality applications in fields like marine science and even medicine, Meta says. So much so that Meta now extends the segmentation capabilities to videos for SAM 2, from the previous images-only first SAM, and the codes are also available on GitHub.

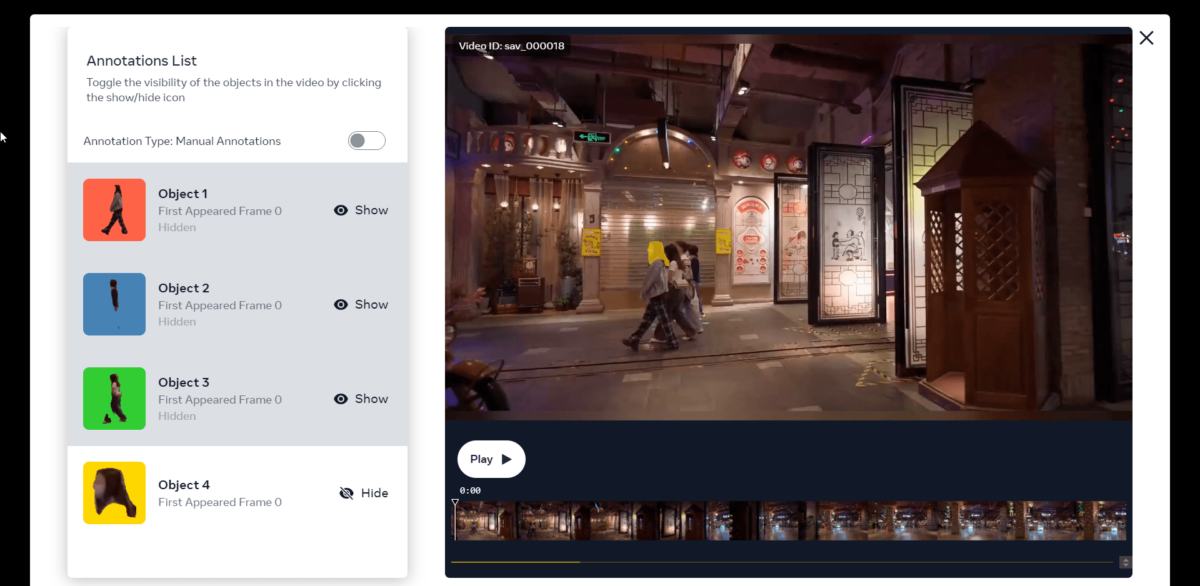

“SAM 2 can segment any object and consistently follow it across all frames of a video in real-time – unlocking new possibilities for video editing and new experiences in mixed reality,” Meta describes.

Meta also says that the next-gen Segment Anything Model is six times faster and more accurate than its predecessor, excelling in various benchmarks and real-world video segmentation applications. It requires fewer human interactions for effective video segmentation and supports real-time inference at approximately 44 frames per second (fps).

Released under the permissive Apache 2.0 license, SAM 2’s code, weights, and the new SA-V dataset are freely available.

Its predecessor, the first SAM, was easily integrated into different systems and can work with various types of images right out of the box. The SA-1B dataset, with over 1.1 billion masks, was created using a mix of manual and automatic methods, making it faster and easier to collect data.

You can try out SAM 2’s demo here.

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

User forum

0 messages