Meet Microsoft DeepSpeed, a new deep learning library that can train massive 100-billion-parameter models

2 min. read

Updated on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Microsoft Research today announced DeepSpeed, a new deep learning optimization library that can train massive 100-billion-parameter models. In AI, you need to have larger natural language models for better accuracy. But training larger natural language models is time consuming and the costs associated with it are very high. Microsoft claims that the new DeepSpeed deep learning library improves speed, cost, scale and usability.

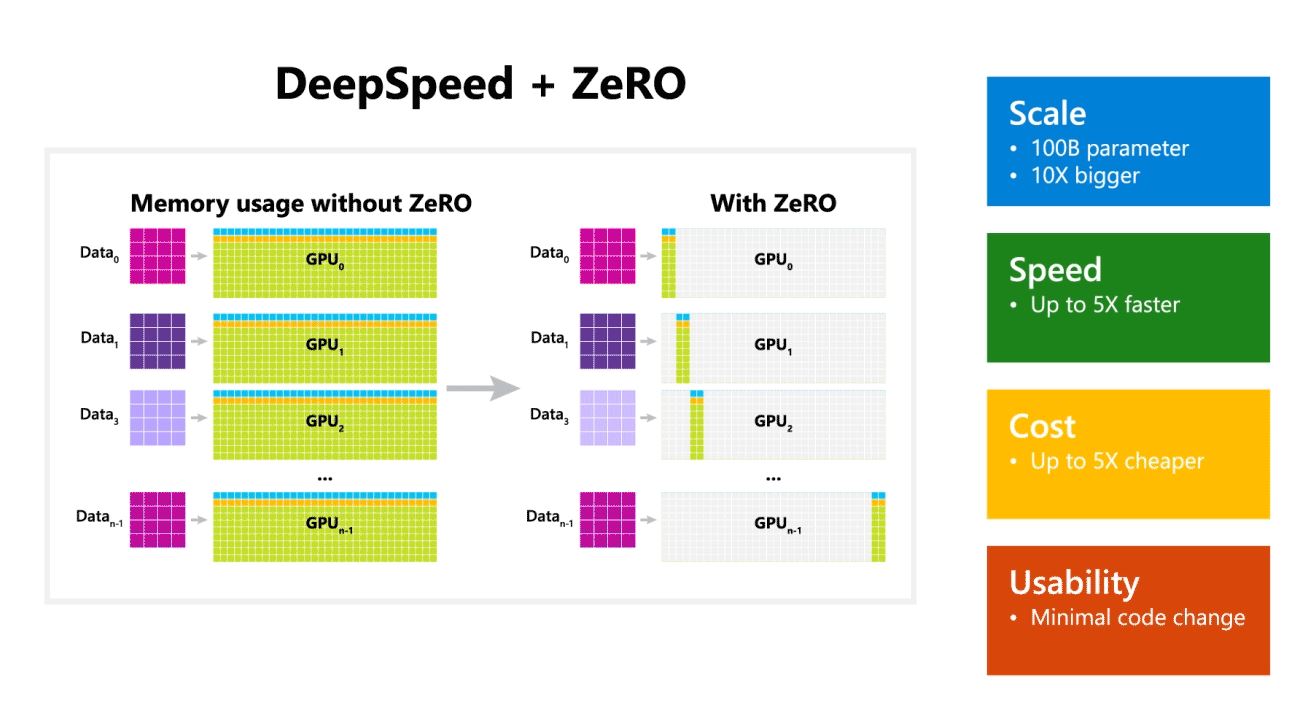

Microsoft also mentioned that DeepSpeed enables language models with up to 100-billion-parameter models and it includes ZeRO (Zero Redundancy Optimizer), a parallelized optimizer that reduces the resources needed for model and data parallelism while increasing the number of parameters that can be trained. Using DeepSpeed and ZeRO, Microsoft Researchers have developed the new Turing Natural Language Generation (Turing-NLG), the largest language model with 17 billion parameters.

Highlights of DeepSpeed:

- Scale: State-of-the-art large models such as OpenAI GPT-2, NVIDIA Megatron-LM, and Google T5 have sizes of 1.5 billion, 8.3 billion, and 11 billion parameters respectively. ZeRO stage one in DeepSpeed provides system support to run models up to 100 billion parameters, 10 times bigger.

- Speed: We observe up to five times higher throughput over state of the art across various hardware. On NVIDIA GPU clusters with low-bandwidth interconnect (without NVIDIA NVLink or Infiniband), we achieve a 3.75x throughput improvement over using Megatron-LM alone for a standard GPT-2 model with 1.5 billion parameters. On NVIDIA DGX-2 clusters with high-bandwidth interconnect, for models of 20 to 80 billion parameters, we are three to five times faster.

- Cost: Improved throughput can be translated to significantly reduced training cost. For example, to train a model with 20 billion parameters, DeepSpeed requires three times fewer resources.

- Usability: Only a few lines of code changes are needed to enable a PyTorch model to use DeepSpeed and ZeRO. Compared to current model parallelism libraries, DeepSpeed does not require a code redesign or model refactoring.

Microsoft is open sourcing both DeepSpeed and ZeRO, you can check it out here on GitHub.

Source: Microsoft

User forum

0 messages