Databricks' $10 million-worth new DBRX open-source language models are finally here

For an open-source model that cost $10 million & 2 months to train, it's impressive.

2 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Key notes

- Databricks launches DBRX, its set of generative AI models on GitHub and Hugging Face.

- DBRX includes DBRX Base for instruction-based tasks and DBRX Instruct for customization.

- It requires powerful hardware to run & outperforms other models like GPT-3.5.

Databricks has just recently announced that it’s launching DBRX, its set of generative AI models that’s now available on GitHub and Hugging Face. It’s pre-trained on 12T tokens, uses GLU and GQA, and is not multimodal (can’t run images).

The model has two versions, the DBRX Base, optimized for instruction-based tasks, and the DBRX Instruct, a pre-trained model suitable for further customization. The 132B parameters model is open source and available in English, although it claims to be capable of translating into French, German, and Spanish, too.

Databricks’ VP of generative AI, Naveen Rao, disclosed in a TechCrunch interview that the company invested $10 million and two months in training the models. But the catch is that, running these models requires hefty hardware, like a minimum of 4 Nvidia H100 GPUs or equivalent, totaling 320GB of memory, or a third-party cloud with more or less similar requirements.

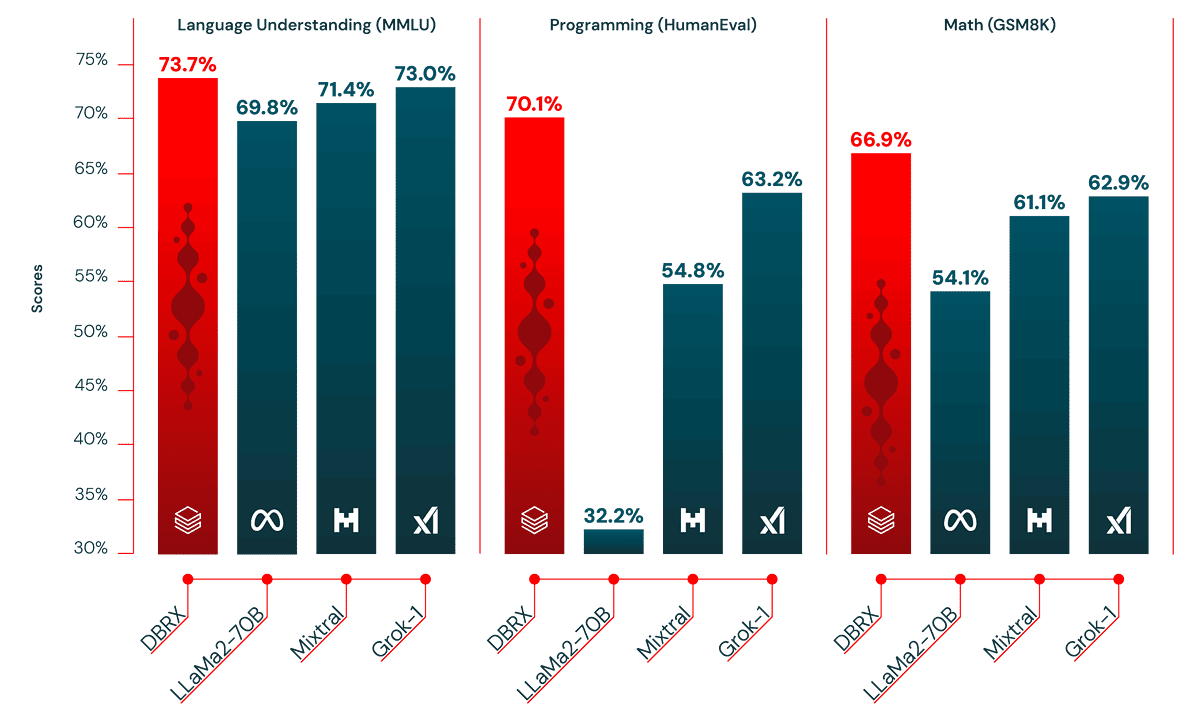

According to its press release, DBRX claims that it can outperform existing open-source models like LLaMA2-70B, GPT-3.5, Mixtral, and Grok-1 on certain things like math, logic, and more. You can put them to work directly with Databricks Model Serving or customize them further for specific needs as they’re licensed for use with the Databricks Open Model License.

They can be deployed directly to Databricks Model Serving or utilized for fine-tuning and batch inference purposes.

You can try Databricks’ DBRX open-source models here.

User forum

0 messages