22 Shocking AI Electricity Usage Statistics for 2025 and Beyond

AI is taking the world by storm and innovating industries in ways never thought possible. But today, I crunched the numbers to find the most shocking AI electricity usage statistics.

The sheer amount of data and processing power it takes to train and develop AI models and algorithms, as well as the big rise in daily requests, requires huge amounts of electricity. Can we adapt to meet such demand?

Let’s see!

Key AI Electricity Usage Statistics

AI power consumption is growing exponentially. These key AI electricity stats demonstrate where we are now and the problems the world could face in the future.

- In 2022, AI contributed to 2% of global energy usage – as much as a small country.

- By 2026, the IEA estimates that AI will use as much electricity as Japan.

- Running AI models and servers requires two to three times the power of conventional applications.

- AI will be a strong contributor to the projected 80% rise in US data center demand by 2030.

- 200 million ChatGPT requests are made each day, which uses up half a million kilowatt-hours of electricity.

- The GPT-3 model uses as much power as 130 US homes in a year.

- Elon Musk believes there won’t be enough electricity to power all the AI chips by 2025.

Global AI Electricity Usage Statistics

These AI electricity usage stats give a global overview of the current levels of AI power consumption.

1. In 2022, AI contributed to 2% of global energy usage – as much as a small country.

(Source: Vox)

The International Energy Agency (IEA) warned that in 2022 alone, 2% of the world’s energy went to power AI and cryptocurrency-related tasks. As well as electricity, the infrastructure needed to back this technology requires vast data centers, plastics, metals, wiring, water, and labor, which also poses concern for the environment.

2. Running AI models and servers requires two to three times the power of conventional applications.

(Source: S&P Global)

AI systems run on powerful servers in data centers, which consume large amounts of electricity to maintain cooling, and security, and facilitate high-speed processing.

A traditional rack at a data center uses between 30-40 kW of electricity. When AI is thrown into the mix with processors like the NVIDIA Grace Hopper H100, this power consumption is boosted by two to three times.

3. AI GPUs consume 650 watts of electricity on average.

(Source: Barron’s)

AI tasks use GPUs, which consume a significantly larger amount of energy compared to CPUs. NVIDIA aside, the common average for all AI GPUs is currently 650 watts, significantly more power-hungry than traditional top-end graphics cards that are rarely more than half this amount.

4. There are 200 million ChatGPT requests made each day, the same as half a million kilowatt-hours of electricity.

(Source: The New Yorker)

ChatGPT is only one AI system, but as the most popular, it consumes a staggering amount of electricity. With an average of 200 million generative AI requests a day, this equates to more than half a million kilowatt-hours of electricity per day. By comparison, the average US home consumes just twenty-nine kilowatt-hours a day.

5. The GPT-3 model uses as much power as 130 US homes in a year.

(Sources: The Verge, Statista)

Even before we account for the latest models, training the GPT-3 model already consumed as much electricity as 130 US homes (1,300 MWh) and 200 German ones do in a year. Training a large language model takes more power than any traditional data center task and this consumption only rises as new models emerge, evolve, and require more data.

6. GPT-3 consumes more resources than the 20 most powerful supercomputers in the world.

(Source: Forbes)

When looking at processing power alongside electricity, GPT-3 requires 800 petaflops, the same amount of processing power as the current 20 most powerful supercomputers in the world combined.

7. 15% of Google’s data center energy usage is consumed by machine learning.

(Source: Yale)

Google is one of the few AI pioneers that are upfront about electricity usage. As of 2024, the search giant admits 15% of its data center’s energy usage now comes from machine learning.

AI Energy Market Stats

It’s not just the consumption of electricity and other energy by AI that’s worth considering. AI is deployed in these markets too.

8. AI in energy was valued at $4 billion.

(Source: Allied Market Research)

AI in the energy market was valued at $4.0 billion globally in 2021 and is forecast to reach $19.8 billion by 2031. Contributors include Smart Grids, machine learning, and climate change and emission-reduction effects.

9. North America has a 37.08% share of the global AI energy market.

(Source: Statista)

The latest available data from 2019 shows that North America is the global leader in the artificial intelligence energy market, holding a 37.08% share. By the end of 2024, this is expected to be valued at $7.78 billion.

10. AI has more than 50 different applications in the energy industry.

(Source: Latitude Media)

A recent estimate revealed some 100 energy-related vendors offering AI solutions, with more than 50 possible applications, including grid maintenance and load forecasting.

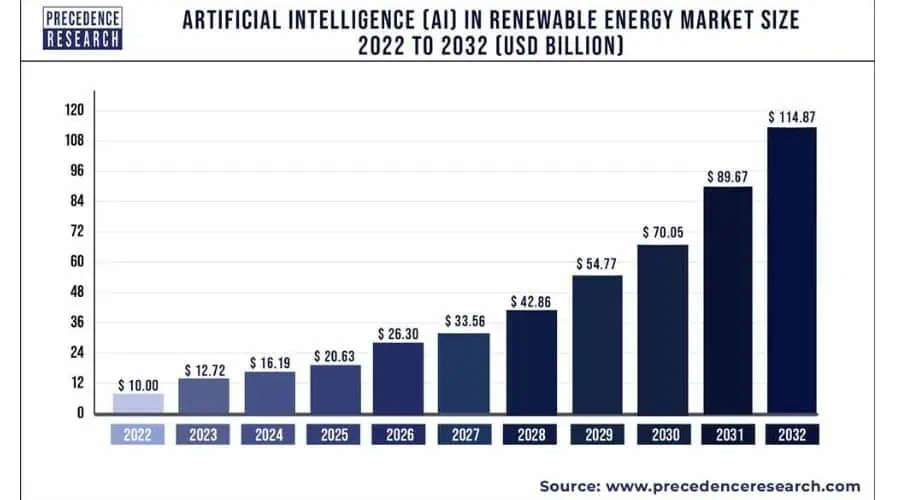

11. AI in the renewable energy market is valued at over $16 billion.

(Source: Precedence Research)

Not just an electricity hog, AI has growing applications in renewable energy. The global market is currently valued at $16.19 billion and is expected to reach over $114 billion by 2032. Leading the way is the Asia-Pacific region, equating to a $4.4 billion market share, projected to reach $44.4 billion by 2032.

AI Electricity Usage Projections

It’s one thing to understand the power consumption of AI today, but these AI electricity usage statistics explore the growth that’s just around the corner.

12. By 2026, the IEA estimates AI will use as much electricity as Japan.

(Sources: Vox, Data Commons)

Japan has a large population of over 125 million people. It’s also in the top 10 electricity-consuming nations. The IEA projects that AI electricity usage will match the entire country of Japan as early as 2026.

13. AI data centers could add 323 terawatt-hours of electricity in the US by 2030.

(Sources: CNBC, Wells Fargo)

As AI becomes more widely adopted and its applications become more complex, energy consumption must increase to meet the growing demands.

Wells Fargo predicts the electricity demand from AI data centers could add 323 terawatt-hours of electricity in the US by 2030. That’s a whopping seven times more than the current electricity consumption of New York City.

14. NVIDIA will ship 1.5 million AI servers a year by 2027.

(Source: Scientific American)

It’s not all about gaming for NVIDIA. The card and chip maker is at the forefront of AI servers and is expected to reach 1.5 million in the annual shipping of these specialized systems by 2027. This would equate to 85.4 terawatt-hours of electricity a year, more than what many small nations consume.

15. AMD could soon rival NVIDIA in the AI GPU market.

(Source: Investors.com)

NVIDIA currently dominates the AI GPU market with its full-stack A100 solution for AI computing. However, analysts believe AMD could potentially reach 20% market share, not too far behind its overall GPU market share.

Its AI contribution has the benefit of competitive performance but at a more affordable price.

16. Most GPUs used for AI will consume 1,000 watts of electricity by 2026.

(Source: Barron’s)

As AI GPUs develop, each one will require an average of 1,000 watts of electricity by 2026, a notable increase from the average of 650 watts consumed today.

17. AI will be a strong contributor to the projected 80% rise in US data center demand by 2030.

(Source: S&P Global)

Navitas Semiconductor predicts a rise of US data center demand of 80% between 2023 and 2030, with the majority of this attributed to the power needed to train AI models, which, as noted, is between two to three times higher than regular applications.

18. Google Search would double its energy usage by switching to AI-only servers.

(Source: Cell)

A recent academic study notes that Google is increasingly embracing AI servers in search functions. If the giant switched its entire search side of the business to AI, it would double its electricity consumption, which would roughly be the same amount of electricity used by the country of Ireland.

19. Natural gas companies expect AI electricity demand to cause a business boom.

(Source: CNBC)

With some estimates suggesting electricity demand will rise 20% by 2030 thanks to AI, natural gas producers believe they can fill the gap where renewables will fail. AI could lead to an increase in natural gas consumption by as much as 10 billion cubic feet per day.

20. Elon Musk believes there won’t be enough electricity to power all the AI chips by 2025.

(Source: Barron’s)

Even with natural gas and other sources, the question remains: does the world even have the energy capacity to keep up with the growth of AI electricity usage? SpaceX and Tesla chief Elon Musk believes that even by next year there won’t be enough electricity to run the chips that power AI.

21. Renewable energy could be the answer.

(Source: Barron’s)

Virginia-based global energy company AES is spearheading the transition to clean energy. It predicts data centers will reach 7.5% of total US electricity consumption by 2030, but the ability to use renewable power for AI is the safest option to meet demand cleanly.

22. AI-run smart homes could reduce household CO2 emissions by 40%.

(Sources: BI Team, GESI)

Another sign that the rise of AI isn’t all bad for the planet is its ability to make smart homes more efficient. Research suggests smart heating systems will reduce gas consumption by around 5%, while automation in home energy systems could reduce the average home’s CO2 emissions by up to 40%.

Summary

There is no question that AI is here to stay. However, if the rate of AI adoption outpaces the growth of electricity production, the availability of electricity may struggle to keep up with power demands, potentially leading to power shortages or increased energy costs.

As these AI electricity usage statistics reveal, efforts are being made to improve the energy efficiency of AI systems, and some of its applications could actually benefit the planet in terms of increased focus on renewables and energy efficiency within homes.

Yet as AI continues to evolve and expand, the demand for electricity is still likely to remain high.

Sources:

1. Vox

2. S&P Global

3. Barron’s

5. The Verge

6. Statista

7. Forbes

8. Yale

10. Statista

11. Latitude Media

13. Data Commons

16. Investors

17. Cell

18. BI Team

19. GESI

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

User forum

0 messages