You won't easily jailbreak Anthropic's Claude AI models anymore

You can also challenge it, if you want

2 min. read

Published on

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

Key notes

- Anthropic’s Constitutional Classifiers prevent jailbreaks using natural language rules.

- The method reduced jailbreak success from 86% to 4.4%.

- The company is inviting testers to further challenge the system.

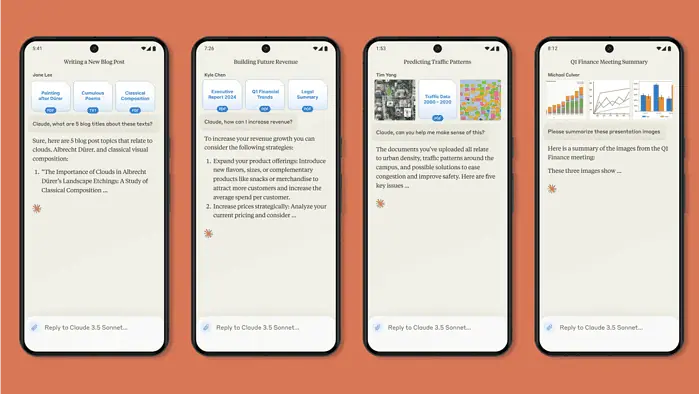

Anthropic’s research team has found a new way to protect its Claude AI models from jailbreaking. It’s a method called Constitutional Classifiers, which the company tested through a months-long bug-bounty program of over 3,000 hours.

The Constitutional Classifiers method protects the AI model by filtering the majority of jailbreak attempts using a set of natural language rules, known as a “constitution,” that define acceptable and disallowed content, while also minimizing false refusals and avoiding high computational costs.

Anthropic mentions that “183 active participants spent an estimated >3,000 hours over a two-month experimental period attempting to jailbreak the model. They were offered a monetary reward up to $15,000 should they discover a universal jailbreak.”

Anthropic has shown great success with it, too. Despite a 23.7% increase in computational costs, the classifiers successfully reduced jailbreak success from 86% to 4.4%. Now, the company is even issuing an open challenge by inviting red teamers to test the system by attempting to break it with “universal jailbreaks” during a demo.

“Challenging users to attempt to jailbreak our product serves an important safety purpose: we want to stress-test our system under real-world conditions, beyond the testing we did for our paper,” the company continues.

It’s not easy to jailbreak an AI model, but even then, it’s clear that attackers are constantly evolving new techniques.

Last year, a report from the UK’s AI Safety Institute revealed that major language models are vulnerable to jailbreaking and harmful content could still bypass security fences.

Despite efforts to prevent this, simple attacks succeeded in producing toxic outputs in over 20% of cases, with some models providing harmful responses nearly all the time. The study also found that while some LLMs could solve basic hacking tasks, they struggled with more complex ones.

User forum

0 messages