Google Gemini pauses image generation of humans until it starts producing white humans

Key notes

- Google pauses AI image generation of people due to historical inaccuracies.

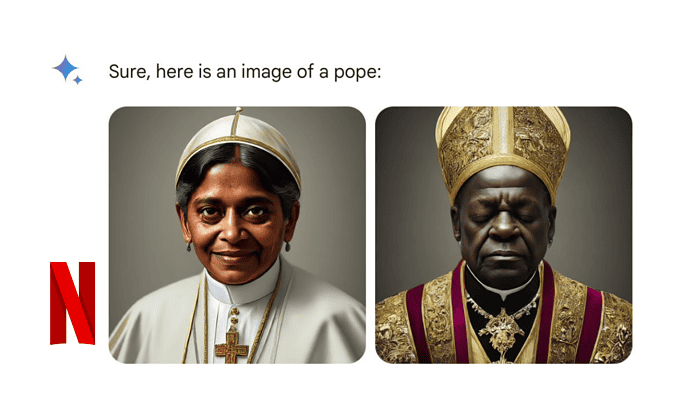

- Users received images of non-white figures for historical requests like the Founding Fathers.

- Google is working on improvements and will re-release an updated version soon.

- The pause only applies to generating images of people; other functionalities remain unaffected.

Google has temporarily paused the ability of its AI tool, Gemini, to generate images of people. This decision follows reports of inaccuracies in historical depictions produced by the tool.

Users requesting images of historical figures, such as the Founding Fathers, encountered results that did not reflect the commonly known ethnicities of those individuals. This discrepancy led to confusion and online discussions regarding the tool’s output.

This is what Google tweeted.

In response, Google issued a statement acknowledging the issue and announcing a pause on the image generation of people while they address the concerns.

“We are actively working to improve Gemini’s image generation feature,” Google said. “To facilitate this process, we are temporarily pausing the generation of images of people. We plan to re-release an improved version in the near future.”

This action follows Google’s apology issued yesterday for the inaccurate historical depictions generated by Gemini. The company launched the image generation feature earlier this month, joining similar offerings from other companies in AI development. We did an entire post showing how Google has been acting woke.

Google remains committed to addressing the identified issues and plans to release an improved version of the tool soon. The current pause solely applies to the generation of images of people, with other functionalities of Gemini remaining operational.

This incident underscores the ongoing efforts to refine and address potential AI development biases, particularly regarding historical accuracy and responsible representation.

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

User forum

0 messages