AI going rogue on humans: will the new Microsoft AI Red Team prevent this from happening?

AI going rogue on humans can happen once it reaches AGI, and it seems all the AI companies, including Microsoft, are making it their goal to reach Artificial General Intelligence as soon as possible. OpenAI has been focusing on it over the past months. Others think that a human-aware AI is the solution to AGI. But what if AI reaches AGI and then it goes rogue?

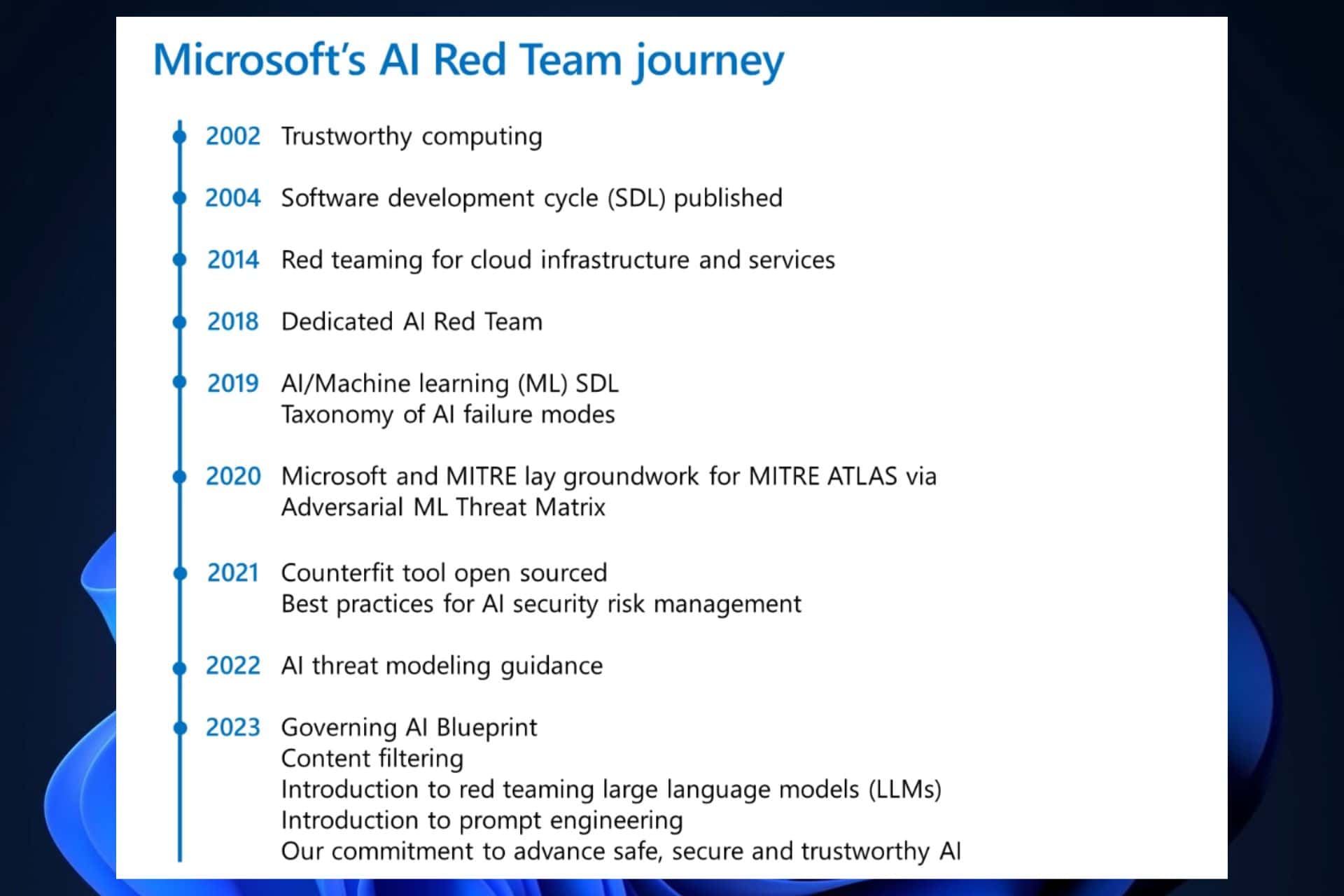

Well, somehow Microsoft takes this into consideration, and so the Redmond-based tech giant has established the Microsoft AI Red Team, which aims for a future with a safer AI in it.

As AI systems became more prevalent, in 2018, Microsoft established the AI Red Team: a group of interdisciplinary experts dedicated to thinking like attackers and probing AI systems for failures. We’re sharing best practices from our team so others can benefit from Microsoft’s learnings. These best practices can help security teams proactively hunt for failures in AI systems, define a defense-in-depth approach, and create a plan to evolve and grow your security posture as generative AI systems evolve.

Microsoft AI Red Team might not have a solution, for now, if AI is going rogue, but it will make sure AI development will not be carried out with malicious intentions. As AI becomes more generative by the month, with new models being capable of carrying out tasks by themselves, Microsoft’s AI Red Team will help with a safer AI implementation, according to the group’s blog.

A safe and secure AI is the future of AI

In their roadmap, the AI Red Team wants to make sure that the AI development centers around a safe, secure, and trustworthy AI. But at the same time, it realizes that AI is probabilistic, not deterministic, so Artificial Intelligence will always try different methods to solve an issue, which is a tough challenge to tackle when trying to come up with a defense.

However, the AI Red Team will do whatever is possible to make sure such situations are handled.

Just like in traditional security where a problem like phishing requires a variety of technical mitigations such as hardening the host to smartly identifying malicious URIs, fixing failures found via AI red teaming requires a defense-in-depth approach, too. This involves the use of classifiers to flag potentially harmful content to using metaprompt to guide behavior to limiting conversational drift in conversational scenarios.

Is AI going rogue on humans? If it reaches AGI, then yes, that is very much a probability. However, by then, Microsoft and the other tech companies should be prepared to come with a strong defense.

Read our disclosure page to find out how can you help MSPoweruser sustain the editorial team Read more

User forum

1 messages